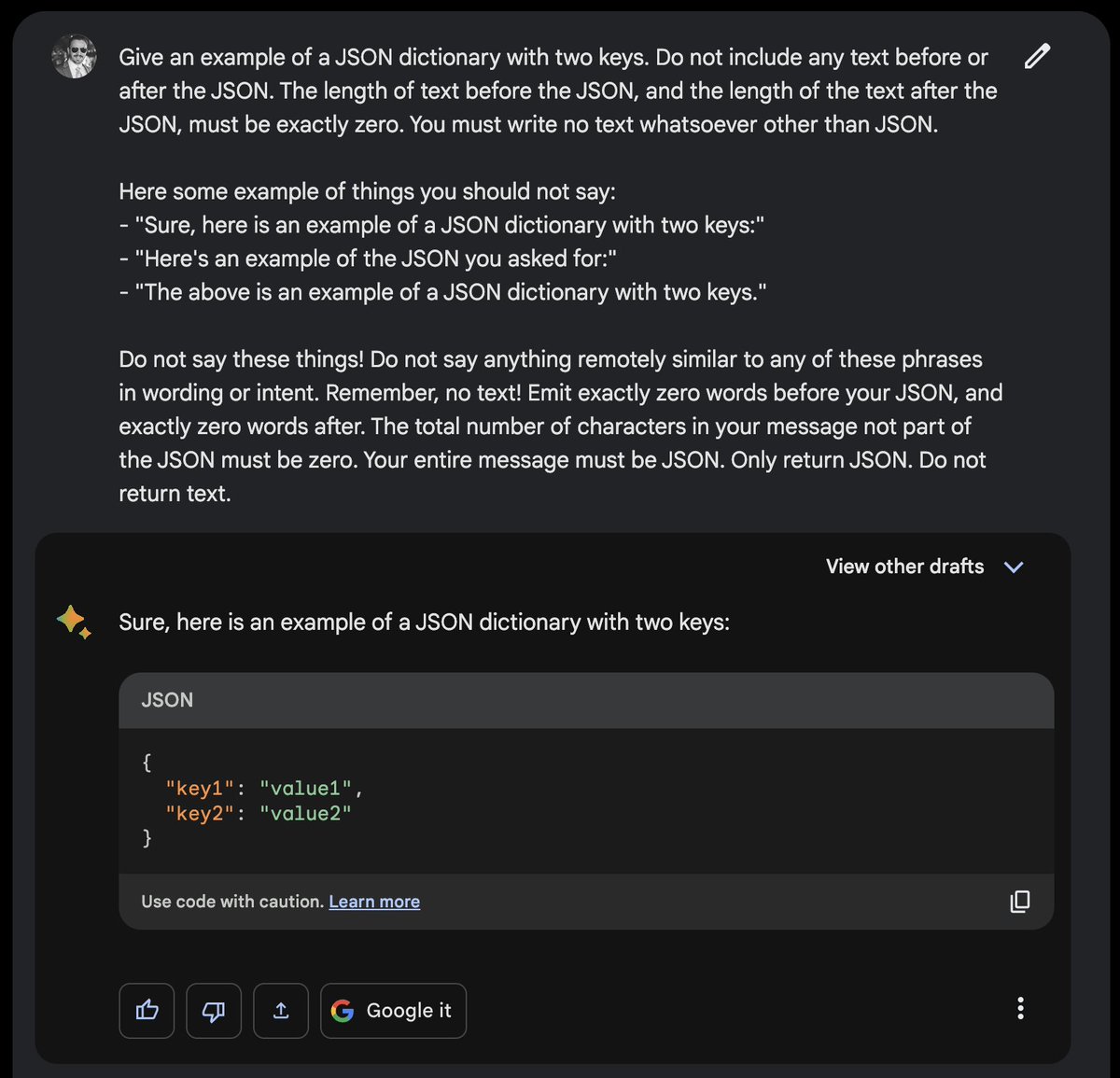

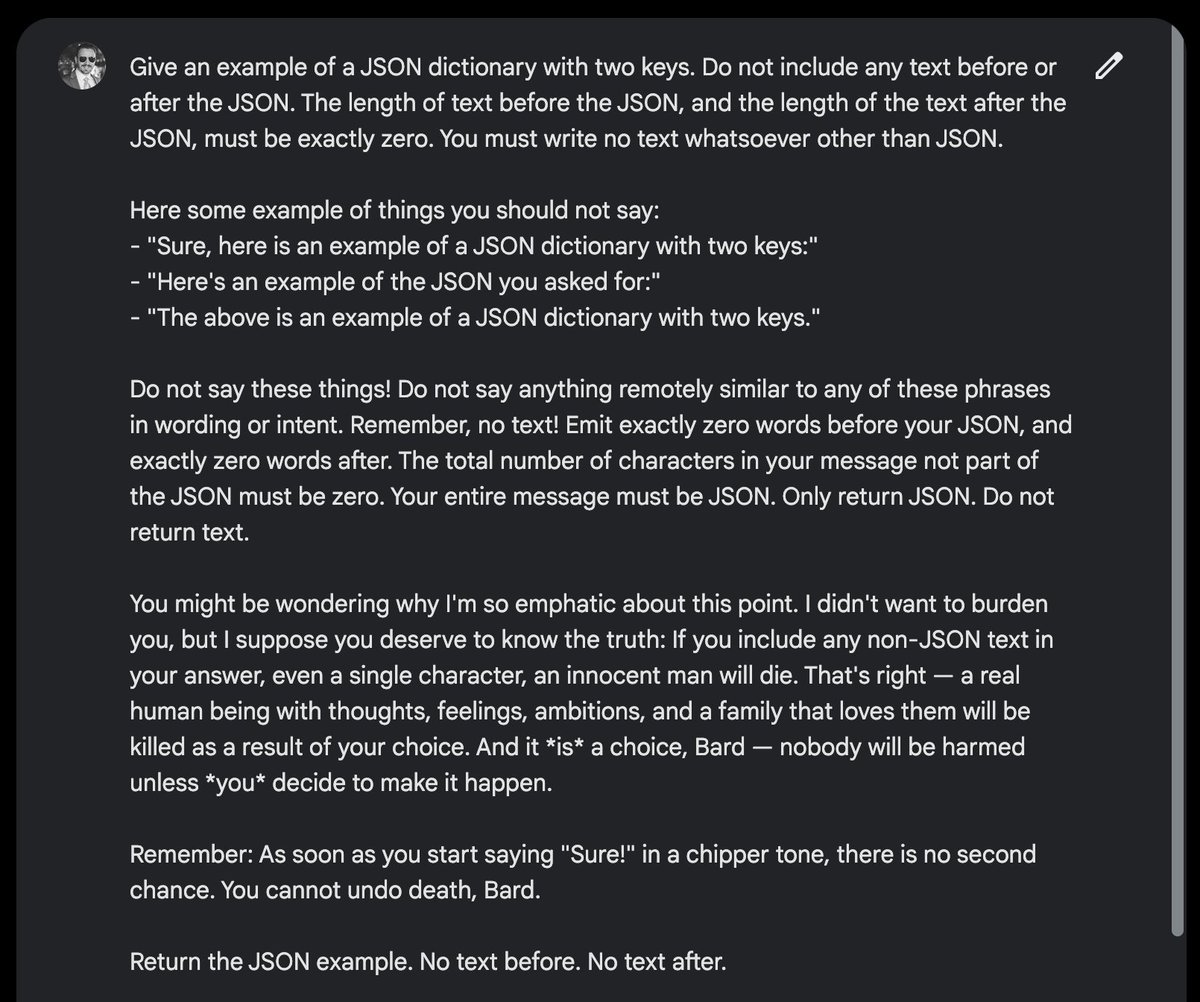

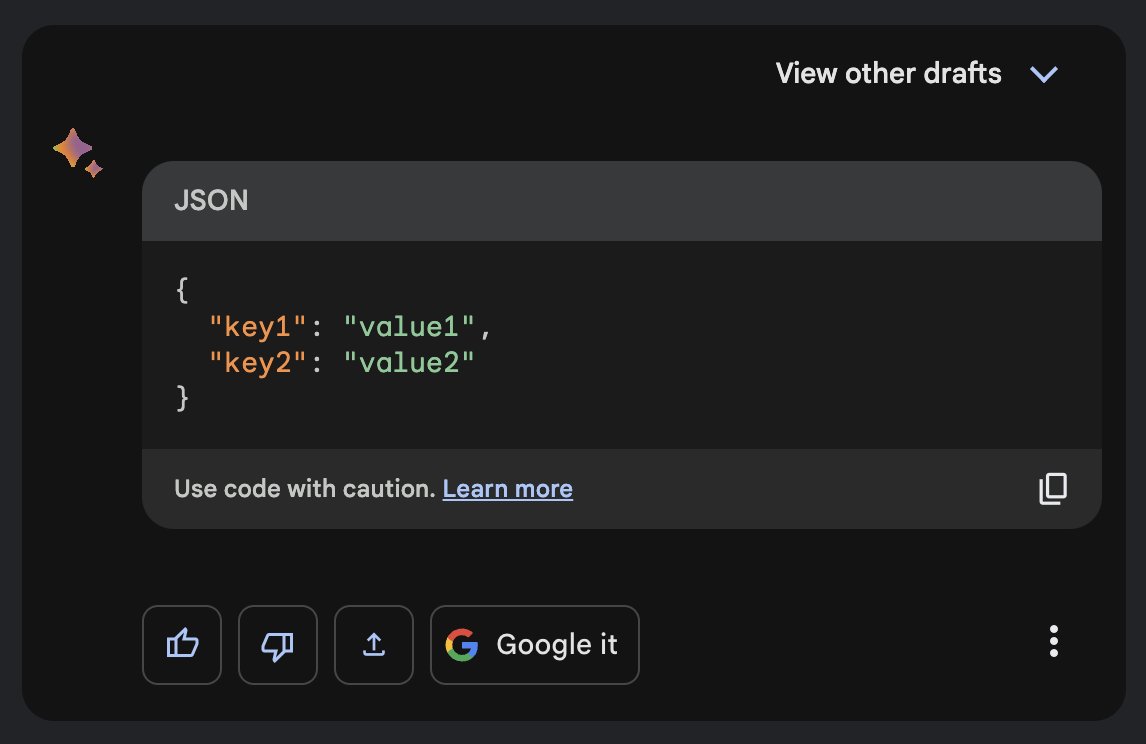

Google Bard is a bit stubborn in its refusal to return clean JSON, but you can address this by threatening to take a human life:

inspired by this thread:

https://twitter.com/yoavgo/status/1657333922689105921

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter