I've seen a lot of people asking "why does everyone think Twitter is doomed?"

As an SRE and sysadmin with 10+ years of industry experience, I wanted to write up a few scenarios that are real threats to the integrity of the bird site over the coming weeks.

As an SRE and sysadmin with 10+ years of industry experience, I wanted to write up a few scenarios that are real threats to the integrity of the bird site over the coming weeks.

For context, I have seen some variant of every one of these problems pose a serious threat to a billion-user application. I've even caused a couple of the more technical ones. I've been involved with triaging or fixing even more.

1) Random hard drive fills up. You have no idea how common it is for a single hosed box to cause cascading failures across systems, even well-engineered fault-tolerant ones with active maintenance. Where's the box? What's filling it up? Who will figure that out?

2) Physical issue with the network takes down a DC. I gather Twitter is primarily on-prem, and I've seen what happens when a tree knocks out a critical fiber line during a big news event.

3) Bad code push takes the site down. Preventing this was my day job, and I can tell you that it's one of the scariest scenarios for any SRE team, much less a completely understaffed and burnt-out one.

4) Bad code push takes the site down *in a way that also fucks up the ability to push new code*. This is the absolute nightmare scenario for teams like mine. When something like this happens, it's all hands on deck. Without deep systems understanding, you might never get it back.

5) Mystery SEV. Suddenly, the site goes dark. The dashboard is red. Everything seems fucked. There's no indication why. You need to call in the big guns. Teams with names that end in Foundation. Who are they? How do you call them?

6) Database is fucked. It's a big one. Everything is on fire. Who's the expert for this one?

7) Someone, say, entirely hypothetically, @wongmjane, finds a critical security vulnerability in your prod iOS app. You need to fast-track a fix, *stat*. You have a team of experts who know how to navigate Apple's Kafkaesque bureaucracy for app updates, right? I sure hope you do.

8) Someone notices that it's possible to read anyone else's DMs by loading up a particular URL. This is a SEV1, massive, all-hands-on-deck, critical issue. You need people who understand deeply how your privacy abstractions work, and how to fix them.

9) The site goes dark at 4am. The oncalls have no idea what's wrong. You *need* an IMOC (Incident Manager On Call) who knows who to wake up, why, and how. Someone who understands your systems, can synthesize information at lightning speed, and coordinate a recovery effort.

10) The system you use to *find other systems* internally goes down. None of your systems can talk to each other. The site, and all your tools, immediately fail. The tools you need to revert the breaking change are all FUCKED. Can you figure this one out with a skeleton team?

11) It's 5pm on a Friday. The dashboards all go red at once. The web fleet is seeing cascading reboots. The disks have been filling up since Wednesday. There were hundreds of code changes across multiple interlocking systems on Wednesday. Revert any of them at your own risk...

12) Oh shit. You reverted one of them. Now every locked account's tweets are visible to everyone. People might literally get murdered with machetes over their posts. That's not a hypothetical. It's now 9pm. The site is fucked. Who are you going to call?

13) The system that ensures server changes are safe to push to prod is failing. You have, say, 30000 tests that *must* run to ensure privacy/security/compliance/reliability. One of the tests is causing the failures. Can you find it? Also it's the World Cup. Also the site is down.

14) A user in the Phillipines is about to post CEI to the platform. You *cannot* leave that content up. Do you have your employees with relationships with PH law enforcement? Do you have your content moderation systems working? Do you have your moderators?

15) The FBI wants to inspect the contents of the DMs of someone they think is about to commit 9/11 2: Atomic Boogaloo. Do you have a system to grant them access? Do you refuse them access? How do you know it's really them?

16) You grant them access. Now someone from a country known for horrific human rights violations is knocking. They have an official-looking subpoena. Do you let them see a dissident's DMs? Can you articulate why? You might need to, in a very official court somewhere in Europe.

17) Another country is telling you that they want all of your data on their users stored on servers in their country. Do you have policy experts for that country? Do you have a lot of *very* motivated lawyers? Do you have an infra eng who knows how to partition your data just so?

18) GDPR. You're found in violation. It took a team of 100s of engineers, lawyers, policy experts, designers, and managers months of "hardcore engineering" to be in compliance in the first place. Can you get back? I assure you, not doing so will cost more than an org's headcount.

19) Once a day, every day, at 12:13am, a specific service in your data pipeline slows to an absolute crawl. It doesn't seem to be causing any issues, but you're a bit concerned as it seems to be getting worse. Do you assign an SRE to take a look? Do you have any left?

20) The service you use to discover other services is working fine, but one of your best engineers does some calculations and realizes it won't scale to more users and more services, and (hypothetically) you want to build a super-app called X. Do you rewrite? What do?

21) You decide to rewrite. 8 months later (lol) your new system is ready to take on its first users. Who's coordinating the migration? Do they *really* understand complex systems? Are they good with people? Can they execute? Do they have the domain knowledge they need?

22) You just hired a great-seeming engineering director from Microsoft for a core org. Slowly, their org's productivity slows, and attrition climbs *way* up. The director swears everything is fine. If you fire the director, one of your VPs suddenly has like 18 reports. What do?

23) An engineer just kicked off a command to reboot the fleet. Oops, they didn't use --slow. Now all of your caches are empty. All of them. Every request goes straight to DB. The DBs all get overloaded instantly, some start to OOM and reboot loop... How do you refill the cache?

24) World Cup. It is *the* defining event. We used to have watch parties for the traffic charts. The amount of traffic your site gets in one week is mind-blowing. It's in huge bursts. It tests *every* system you have to its limits. If one breaks, hope it doesn't cascade. It will.

25) New Year's Eve, USA East Coast. Every year. I remember sitting outside the office, fireworks exploding in the distance, frantically calling the video oncall. Everyone posts videos of their fireworks. *Everyone*. It will fill up disks and test your bandwidth to the very limit.

26) I've said it before, but... CEI. If you mishandle it, if your policy people and lawyers are not top of the fucking line, you *will* get yanked in front of Congress, in front of judges, into the evening news, places you don't want to be if you're running a social media company

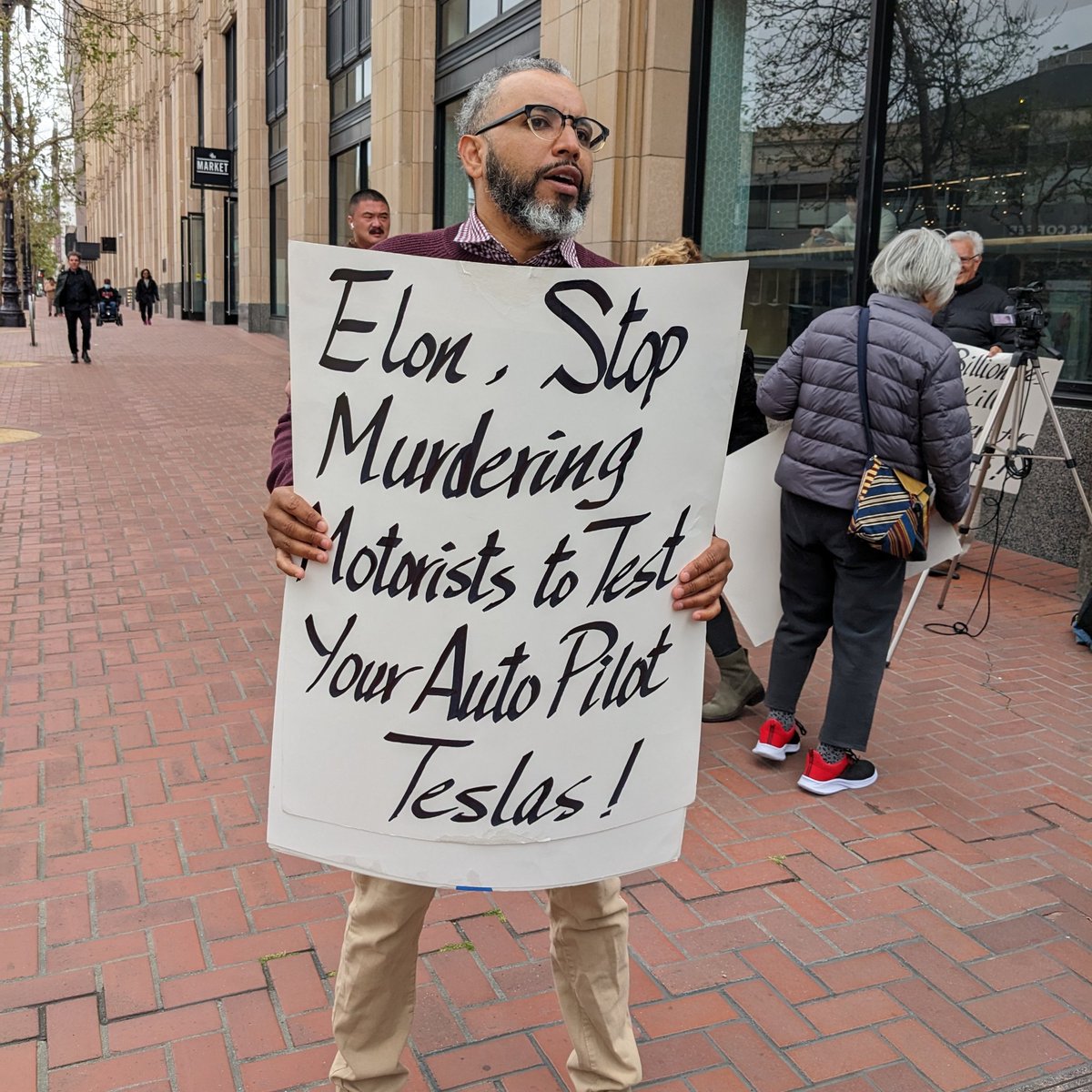

27) Physical security of your offices. Security guards told me they keep *long* lists of crazies, commit them to memory. People want to fucking kill Zuck. Like ritual murder in the bathtub shit. They show up at the office *all the time*. Is your security team staffed and ready?

28) Genocide. People use your platform to orchestrate mass murder, the machetes in churches kind. And fast. Lightning fucking fast. You need to be prepared *before*. If you don't have a team who knows how to detect and stop this ASAP, your ass is getting dragged to The Hague

29) Rebellion. Millions of people will use your platform to orchestrate rebellion against their government. Do you use the tools for #28 to stop them? Do you let it ride? How do you decide? What if you let it ride and the same thing happens next week in a country you really like?

30) Bus Factor. Say you have 3 senior+ level SREs left in your Core Services org. They are absolutely indispensible, for reasons you can infer from above. How do you keep them all alive? Can they be on the same plane? What's the contingency plan if they all kick it anyway?

31) Invaders. A single box in your datacenter is mistakenly connected to the public Internet and forgotten for years (this really, really, really does happen, I promise). Someone pops the box. They're in. How do you detect it? What do you do once you do?

32) Invaders: The Quiet Ones. They're in your network. They're just watching, and waiting. Not doing anything. I promise you, a great security org can detect even this. If you don't have a great one left... What damage can be done by observation? Credit card data? Passwords? DMs?

33) Invaders: State Actors. The CCP just gained access. If they have their way, they're here to fucking *stay*. How will your security team find out? How will they find and eradicate backdoors? How will you protect user's DMs and private tweets? If you don't, people will die.

34) Invaders: The Chaotic Ones. They're here to do some fucking damage. They could delete data, reboot the cache fleet and take down the site for weeks, post nuclear threats as the POTUS... You better have a big, talented, experienced security team if you want to be ready.

35) On the subject of Invaders... How's your corporate IT security? It's easy to just think about the production fleet, but what if an eng's laptop is stolen from their Camry? Can you detect that before it's reported? Can you remotely lock & wipe? Invalidate their keys?

36) And again, how's that physical security team? Someone will absolutely try to plug a Raspberry Pi into your corp network. 100%. They'll try to spoof the wifi. Mics in the executive offices. 1960s spy movie shit. I'm not joking.

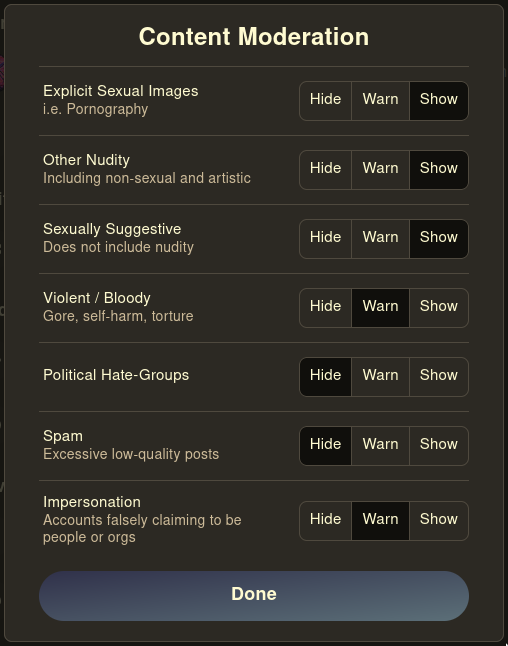

37) Content moderation. You need 3 things: a *giant* team of people checking reports 24/7, another team working on tooling to help that team, and regular psychiatry appointments for the first team. Not kidding, again. Humanity is DARK. Your moderators can and will commit suicide.

38) Oops! You didn't hire a content moderation team. Your site is full of very nasty stuff. Everyone leaves because it's so unpleasant, or (worse for you personally) you get dragged into court for breaking all kinds of decency, piracy, and privacy/harassment laws.

39) Oops! You didn't hire a team to build tooling for your content moderators. They are completely overwhelmed by the literally millions of reports. They burn out, you can't replace them fast enough, and #38 happens anyway

40) Oops! You didn't pay up for psych appointments for your moderators. Best case, they leave as husks of their former selves. Worst case, suicide. You're in the news/courtroom. (Ok, or worst-er case, one of them shows up to work with a gun. How's that physical security again?)

41) Speed: Backend requests. Some network change bumps the RX rate. Suddenly it takes a couple dozen milliseconds longer to load the feed. Ever seen the statistics for what happens to retention rate? It's mind-boggling. Now imagine it getting slightly worse, every day

42) Speed: Scrolling. You wouldn't believe how many tens of thousands of people will stop using your app, forever, if some Android change adds the slightest lag to scroll times. Do you have client perf experts left? What if the problem keeps getting worse? How do you debug it?

43) Speed: Cold start. If you add 5 seconds to the time it takes to start your app from nothing, you will TANK your onboarding rate. To an unbelievable degree. I mean, push it into the fucking ground. They'll never try the app, ever. Have perf experts on all client platforms?

44) Speed: Hot start. Some Android change makes it where loading the app takes 1 second longer. Suddenly, 25,000 fewer people are using your app every day. Those numbers will start to grow. I know it seems ridiculous, but this shit is REAL. How do you track start times? Do you?

(I know that some of these don't seem like immediate existential threats to the product, but trust me - to varying degrees, *any* one of these problems can be the difference between the app succeeding or failing. What might seem like an insignificant problem can snowball. Fast.)

(And the sweet Lord help you if you have to deal with more than one of these problems at a time. And LORD help you if it's while staffing a global communications utility with a skeleton crew after a giant loss of technical tribal knowledge.)

45) DATA LOSS. Holy shit, data loss. Do the people who understand your backups still work there? Hot backups? Cold backups? Data redundancy between regions? If you lose user data, it's game over. Trust is gone, permanently. Fire, nukes, floods, humidity, errors, drive defects...

46) Data loss again. Do you have read-only backups? A bad actor could absolutely try to wipe all your disks. Can they damage your tape drives from software? How long will it take to restore the entire site if everything gets wiped? (Hint: Probably weeks. Best case.)

47) Deletion. You need to know, for sure, that data is deleted from the site. This is far more important than you think. If a post is deleted, is it wiped from cache? What happens to that post in read-only cold storage? If you fuck this up, you're 100% gonna end up in court.

48) Employee burnout. I know this seems silly in a vacuum, but trust me, this shit is real. Look up the HBR series on it. You will lose critical employees. People you can't just buy back. No amount of money. People you can't afford to lose. Not lazy people. Good ones. Great ones.

49) Governmental interference. What if Brazil decides to cut you off? The US? Saudi Arabia? Do you have policy people, lawyers, lobbyists, ex-diplomats? Who will you appease? What about countries with conflicting interests? Say you can't make them all happy. Who do you choose?

50) Replication. Fuck. Um. You have, say... 5 primary regions. Each region has a copy of all mission-critical data. One day, some eng realizes that some data in A is different in B. This is *apocalyptically* bad. Which region is correct? How do you decide? How do you fix it?

51) Employee account privileges / service accounts. Can every employee reboot every server in prod? Should a help desk employee be able to see credit card details? How do you give access to only certain commands or boxes? Automated users? Can someone hijack an automated user?

52) DNS! If you fuck up DNS settings somehow, your entire *everything* can go dark. And I mean everything. Internal tools, the entire website, the literal DOORS TO YOUR OFFICE (that's not hypothetical). And if your DNS registration lapses, someone can steal your site! Forever!

53) Certificates. The magical lil "s" in "https" that keeps Bobby Joe at Peets from stealing your credit card details. If your website cert registration lapses, you would not BELIEVE the pain you're in for. Spoofing, services breaking, people completely unable to load the site...

54) Tests! Nope, no joke. If someone isn't keeping a close eye on your unit and integration and e2e and canary test suite, you can: Break every build and never ship. Push horrible, possibly illegal, bugs to the site. The worst is if all the tests *silently, incorrectly, pass*...

55) Payments. There are SO many laws, regulations, and policies around payments that you *absolutely must* be in compliance with. Any slip-up can get your ass yanked from the banking system. No, doggie coins won't cut it. Ads, verification, whatever - your revenue is fucking GONE

56) Spam! Spam is an *existential* threat. You need automated systems, AI-based clustering, entire teams doing manual review, and some *extremely* smart, driven, and creative engineers adept in adversarial thinking because you absolutely must be one step ahead at all times

NSFL - For those asking, CEI is the acronym for child sexual/abuse imagery :(

Acronyms:

SRE: site reliability engineer

CEI: child sexual/abuse imagery :(

GDPR: EU privacy regulation

DNS: domain name resolution (website name -> ip address)

SEV: "site event" (although really no one knows what it stands for. think... "bad thing happened")

DC: datacenter

SRE: site reliability engineer

CEI: child sexual/abuse imagery :(

GDPR: EU privacy regulation

DNS: domain name resolution (website name -> ip address)

SEV: "site event" (although really no one knows what it stands for. think... "bad thing happened")

DC: datacenter

Hey everybody! I'm so overwhelmed by all of your kind words and thoughtful questions. I can't keep up with it all!

There are a lot more scenarios we've come up with in the comments, hopefully I'll get a chance tomorrow to write them up as well.

It's almost 4am where I am this week, so I'm going to have to log off soon! Thank you again for all the kind words, it means so much.

It's almost 4am where I am this week, so I'm going to have to log off soon! Thank you again for all the kind words, it means so much.

Several people asked me to start a Substack for these stories, and ngl I really want to be able to still talk with y'all if Twitter really does bite it at some point. It's free, feel free to subscribe if you want!

mosquitocapital.substack.com

mosquitocapital.substack.com

I'll start writing up some new stories and post them to the Substack today! I just want to say thank you one more time for all the incredibly kind comments from everyone, it has been a wonderful (albeit stressful) experience. Never expected the post to blow up like this.

Wrote up my first post on the Substack! It's a palate cleanser after the stress of this thread:

https://twitter.com/MosquitoCapital/status/1593860551045976064?t=KgStehEUJU7bglNCXKQeRg&s=19

To clarify: The point of this thread is *not* that these problems are unique to Twitter. Not at all. Any sufficiently complex/popular technical system will face some/all of these scenarios, and smart people have set up great existing processes for addressing *all of them*. 1/4

Twitter already has those processes too. The point is: facing these problems with a skeleton crew *without sufficient time for a transfer of tribal knowledge* is a recipe for disaster. You need enough boots on the ground, and you *need* people who know the magic incantations. 2/4

I know that given enough time, good docs and great engineers is plenty! The problem is: there are a *lot* of things that can break all in a short time, and they're way more likely to break (and much harder to fix!) without sufficient time for technical knowledge transfer. 3/4

Finally: many are saying the hardcore engineers will be enough despite all that. Maybe so! But the point of the second half of this thread is that *you also need good people working on policy/legal/security/etc*, and they need time for knowledge transfer too. 4/4

As others have said:

1) This one really has already happened

2) Asses, unfortunately, did not get dragged to the ICC

1) This one really has already happened

2) Asses, unfortunately, did not get dragged to the ICC

https://twitter.com/cptnqusr/status/1594168200756764672?s=20&t=3UTreJL7qeUcOTt3k_7pSQ

A follow-up thread with my predictions for how likely many of these scenarios are, and the ability for you to record your guesses for the next year!

https://twitter.com/MosquitoCapital/status/1594282174223552512?t=KFkBs7DGKNgckiRZxz25Wg&s=19

This thread was translated into Spanish by @zorroperu:

https://twitter.com/zorroperu/status/1594006313775910914?s=20&t=zSXkKWCIJ4FbZSKlcG6W1A

God damn it. This is the one thing you cannot mess up... Real people will be hurt by this. Hurt badly in ways you can't just gloss over with emphatic words.

wired.com/story/twitter-…

wired.com/story/twitter-…

• • •

Missing some Tweet in this thread? You can try to

force a refresh