Thread: After @icsjournal's "APIcalypse" issue & #IC2S2 2019, it's clear many are asking what the future holds for social media data. After working on privacy & data access at FB for > a year, I have thoughts. Thread ends w little known source for fb page data so read it

In 2011, @seanjwestwood & I ran a (IRB-approved) study using Facebook's graph API to analyze participants' entire ego network on the fly, have strong v weak ties endorse experimental post (stimulus), then re-render participants’ News Feeds. Those days are over.

The API was meant for developers to build on top of the social graph, but approvals were friendly to researchers. The scope of the data was startling & thankfully Sean had the foresight to delete data beyond what was necessary for analysis & publication.

Times have changed - OPM, MyLife, Equifax, Cambridge Analytica. Privacy issues are difficult to anticipate & secure, especially for complex & relational data. & CA showed the world that APIs are open to nefarious schemes and basic research alike.

Data privacy is in the air in newsrooms & capitals across the world. As privacy advocates devise strategies and work to protect people from identity theft, scams, information & other abuse, often social scientists advocating for research aren't at the table.

In the wake of these cross currents, privacy legislation & regulation present challenges for research communities. No company can shrug off a $5 bn fine for being too permissive w data—data collected via APIs in the name of social science research.

What's more under GDPR, meaningful, socially beneficial independent social science research often maps to the generally prohibited processing of "3rd party sensitive category data collected without consent." iapp.org/news/a/how-gdp…

Now the GDPR's research exemption carves out a protected legal space for that! BUT--only if originally *collected for research purposes.* The data everyone cares about was collected for business. What's left? Case-by-case opt-in--severe confounds, overhead, paperwork, etc.

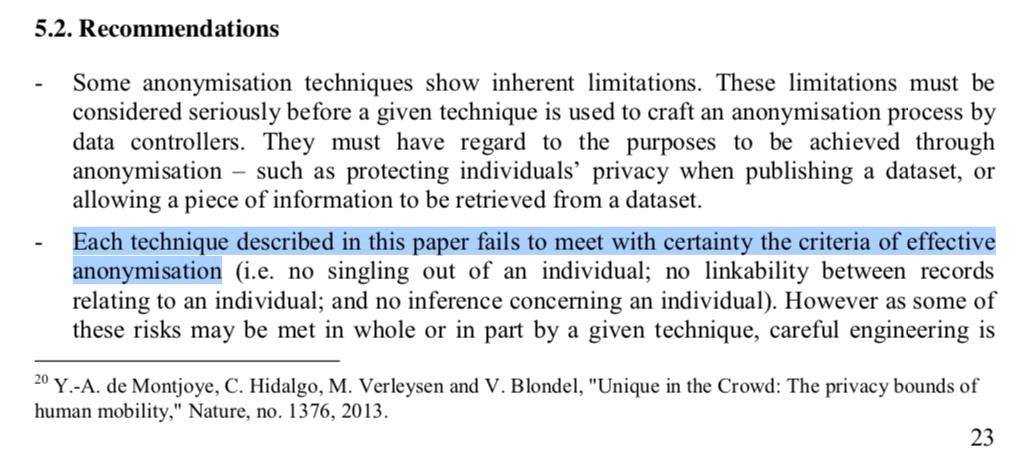

Another path is if the data in question are anonymous. BUT GDPR does not define anonymity the same way that say HIPAA does--PII removed. Instead, data is anon if it cannot "reasonably" be re-identified. Sounds great, until you get into details pdpjournals.com/docs/88197.pdf

Differential privacy provides a theoretical framework showing that firm provable guarantees can be made. BUT they are probabilistic. What does "reasonably protected from reidentification" mean if it is always possible to some degree?

GDPR was not drafted to make things difficult for social media researchers. Rather, it may not have been crafted with this kind of research in mind. We are now grappling with likely unintended consequences.

What can be done? Global companies follow applicable law & regulation. Researchers need a seat at the policy making table to incentivize corporate sharing for basic research. May be even as simple as removing some of the downside risk.

People making decisions need to understand the societal value of social science. So keep sending work to @monkeycageblog, @MisOfFact, weigh in on Twitter, talk to journalists & present to policy-makers.

And learn about privacy - as social scientists we don't have the training to deeply understand the issues & participate in the debate. So read up on differential privacy.

Briefly: johndcook.com/blog/2018/11/0…,

more here privacytools.seas.harvard.edu/files/privacyt…

and finally cis.upenn.edu/~aaroth/Papers….

Briefly: johndcook.com/blog/2018/11/0…,

more here privacytools.seas.harvard.edu/files/privacyt…

and finally cis.upenn.edu/~aaroth/Papers….

Unfortunately, DP introduces noise, requires-hard to come by expertise & is suitable to answer a limited number of questions. The US Census has worked on DP for nearly a decade & assumes that it is not currently feasible for the ACS census.gov/content/dam/Ce…

And if you’ve read this far to the bitter end, here’s a tip. If you've been negatively affected by the pages API restrictions (e.g.,

https://twitter.com/TristanHotham/status/1158659238069252096) apply for CrowdTangle access via @SocSciOne here socialscience.one/rfp-crowdtangle. It doesn't have everything, but it has a lot.

• • •

Missing some Tweet in this thread? You can try to

force a refresh