You can always rely on @nonprofitssay to tell the hard truths, and it turns out that this particular one is backed up by research. Thread:

https://twitter.com/nonprofitssay/status/1160934071955935232

Earlier this year I highlighted a study from @CEPData and @Eval_Innovation that revealed the futility of many foundations' evaluation efforts. The numbers from that report are eye-popping, but it's admittedly only one study. How much stock to put in it?

https://twitter.com/iandavidmoss/status/11135548873281167381/

Fortunately, there are other studies out there. And on the whole, they strongly reinforce the point that people with influence over social policy simply don't read or use the vast majority of the knowledge we produce, no matter how relevant it is. 2/

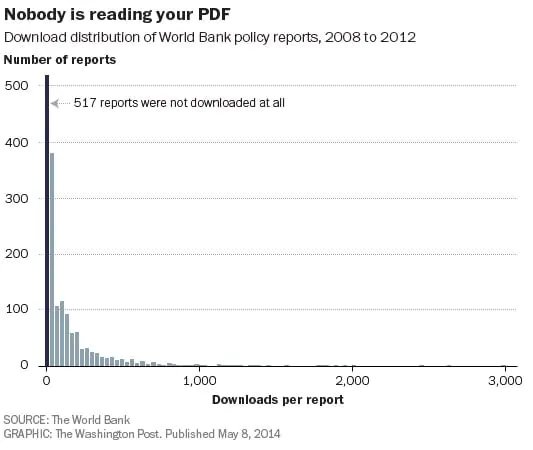

One of my favorite factoids of all time comes from a study the @WorldBank conducted on its own policy papers several years ago. The methodology was simple: researchers just counted the number of times each paper had been downloaded between 2008-12. documents.worldbank.org/curated/en/387… 3/

They found that three-quarters of these papers, each one representing likely hundreds of hours and thousands of dollars of investment on the institution's part, were downloaded fewer than 100 times. Nearly a third had never been downloaded EVEN ONCE! Not even by their authors! 4/

This was all chronicled in a Washington Post piece by @_cingraham with the unforgettable title, "The Solutions to All Our Problems May Be Buried in PDFs Nobody Reads." Touché! washingtonpost.com/news/wonk/wp/2… 5/

Research that asks policymakers and philanthropists about their reading habits tells a similar story. I wrote last year about the @Hewlett_Found's "Peer to Peer" study, which surveyed more than 700 foundation professionals in the United States.

https://twitter.com/iandavidmoss/status/9634364537023897606/

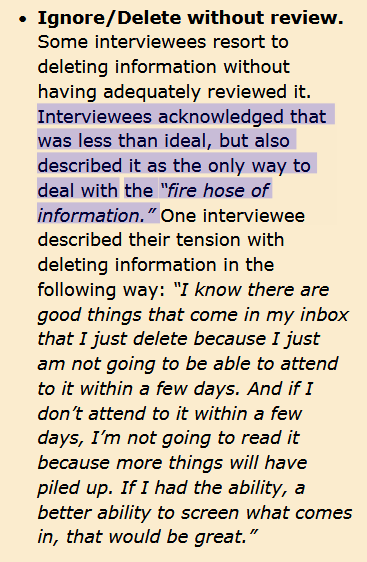

Funders responding to that survey report being completely overwhelmed with information, to the point where some of them just delete emails announcing new reports and studies without even skimming them first to see if they’re relevant. 7/

In a study of over 1600 civil servants in Pakistan and India by @EPoDHarvard, policymakers "agreed that evidence should play a greater role in decision-making" but acknowledged that it doesn't. washingtonpost.com/news/monkey-ca… 8/

According to the study, the issues are structural. "Few [respondents] mentioned that they had trouble getting data, research, or relevant studies. Rather, they said...that they had to make decisions too quickly to consult evidence and that they weren’t rewarded when they did." 9/

And the topline finding of an @EHPSAprog/@INASPinfo study looking at HIV policymakers in eastern and southern Africa is that "policymakers value evidence but they may not have time to use it." ehpsa.org/critical-revie… 10/

What about front-line practitioners? A US survey found doctors generally don't follow research relevant to their practice area, and when research comes out that challenges the way they do their work, they expect their medical associations to attack it. washingtonpost.com/news/monkey-ca… 11/

The UK's @EducEndowFoundn conducted an RCT to test strategies to get evidence in front of schoolteachers. They tried online research summaries, magazines, webinars, conferences. None of these methods had any measurable effect on student outcomes. educationendowmentfoundation.org.uk/news/light-tou… 12/

It's important to note that none of this is news to the people whose job it is to generate and advocate for the use of evidence. In my experience, the vast majority know this is a huge problem and have their own stories to tell. 13/

For example, this report from the 2017 Latin American Evidence Week @evidencialatam decried the "operational disconnect [that] makes it impossible for evidence generated at the implementation level to feed into policy (and programme) design." onthinktanks.org/articles/latin… 14/

Or why 125 social sector leaders interviewed by @Deloitte @MntrInstitute's Reimagining Measurement initiative identified “more effectively putting decision-making at the center” as the sector’s top priority for the next decade. www2.deloitte.com/us/en/pages/mo… 15/

The consistent theme in all these readings: it's really tough to get policymakers and other people in power to use evidence, especially when it challenges their beliefs. Very, very rarely will evidence on its own actually influence the views or actions of influential people. 16/

What's really astounding about this is how much time, money, attention we spend on evidence-building activities for apparently so little concrete gain. I mean, we could just throw up our hands and say, "well, no one's going to read this stuff anyway, so why bother?" 17/

But we don't. Instead, it's probably not an exaggeration to say that our society invests millions of hours and billions of precious dollars toward generating knowledge about the social sector...most of which will have literally zero impact. 18/

So we are either vastly overvaluing or vastly undervaluing evidence. We need to get it right. Because those are real resources that could be spent elsewhere, and the world is falling apart around us while we get lost in our spreadsheets and community engagement methodologies. 19/

Don't get me wrong. I believe in science. We can learn so much just from better understanding and connecting the work we've already done! At the same time, there's so much more we could be doing to ensure we get bang for our evidence buck. E.g.: cep.org/why-your-hard-… 20/

Thanks for listening. Sometime soon I'll post about what people have done to try to increase evidence use, and what seems to work. /fin

• • •

Missing some Tweet in this thread? You can try to

force a refresh