1/ The “Auditing Radicalization Pathways” study is worthwhile looking at. arxiv.org/abs/1908.08313

They use comments to correlate users pathways across time. This is clever and it's a great way to get at the true movement of viewers between channels. However...

They use comments to correlate users pathways across time. This is clever and it's a great way to get at the true movement of viewers between channels. However...

2/ I read the paper and come away baffled about why their study is considered the evidence for supporting the rabbit-hole/alt-right-radicalization narrative. Let me show you the important parts

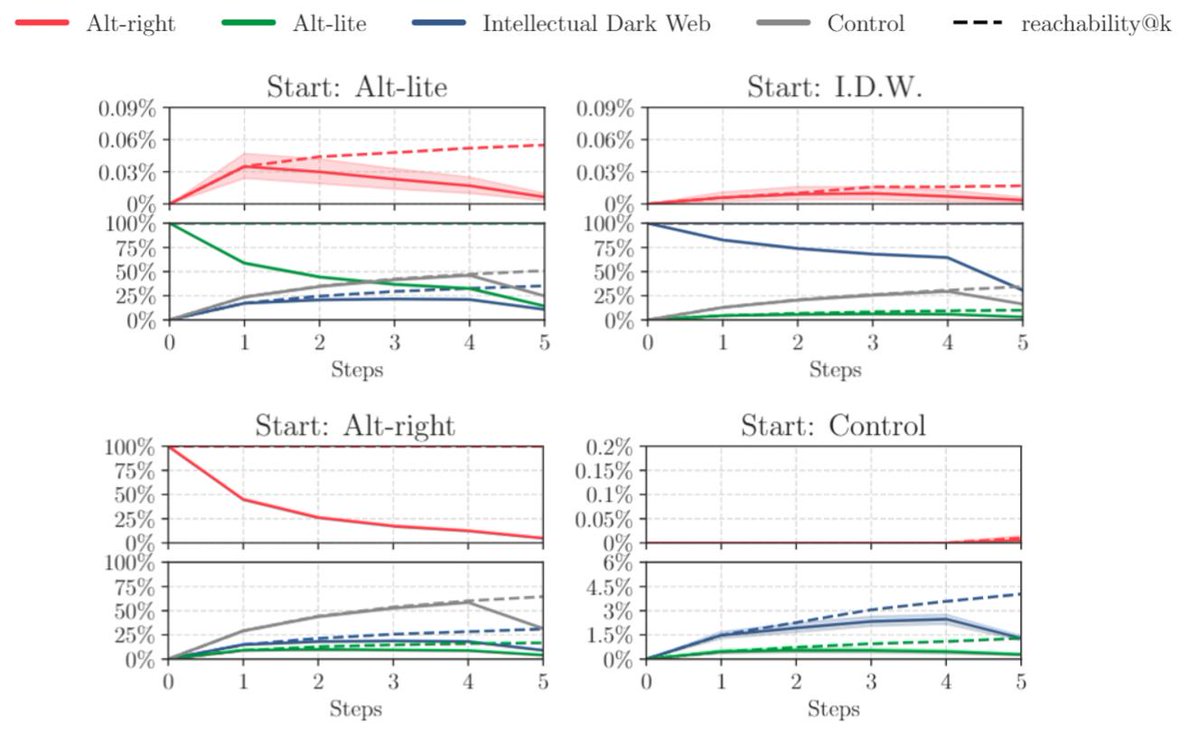

3/ This shows that IDW exclusive-commentators are more likely to comment on alt-right channels in the future than the control.

4/ @JeffreyASachs called this 4% movement (vs 1% of control) a “high % of people”. And author @manoelribeiro

Called this “consistent migration” to the alt-right. Decide for yourself if that is fair.

I do believe this result corresponds to reality tho...

Called this “consistent migration” to the alt-right. Decide for yourself if that is fair.

I do believe this result corresponds to reality tho...

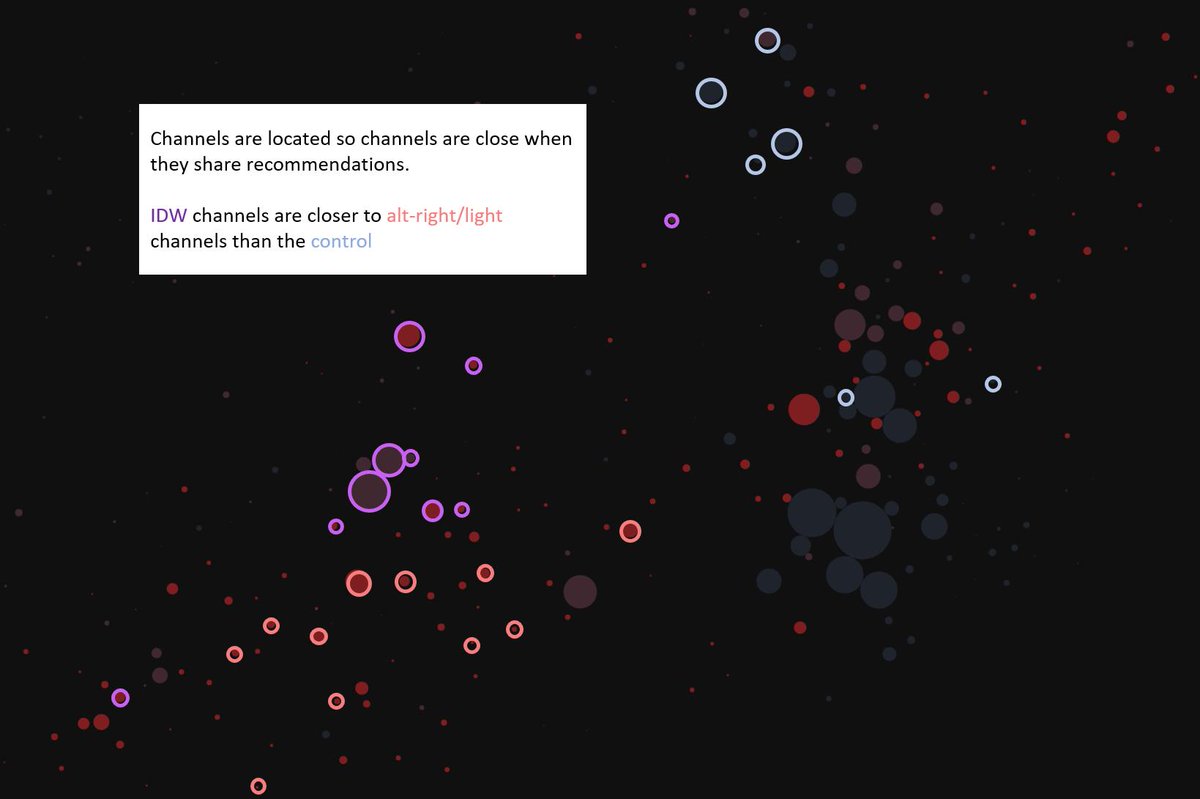

5/ In my model of an ideological movement, viewers are more likely to migrate to channels/politics close them in the ideological/recommendation landscape.

I have highlighted the large matching channels in our datasets showing the IDW/Alt-right/Control proximity

I have highlighted the large matching channels in our datasets showing the IDW/Alt-right/Control proximity

6/ This doesn't contradict their take. But this study doesn’t look at the obvious other direction of movement that would support or refute the alt-right rabbit hole theory. For example, these movements aren't part of their results: ...

7/

Left -> Far-left: Do mainstream left channels (e.g. Vox, Wired) to “infect” people to far-left youtube.

Alt-right -> IDW: What rate are alt-right users un-”infected” onto IDW channels. Is the movement more in one direction than the other?

Left -> Far-left: Do mainstream left channels (e.g. Vox, Wired) to “infect” people to far-left youtube.

Alt-right -> IDW: What rate are alt-right users un-”infected” onto IDW channels. Is the movement more in one direction than the other?

8/ Until you can compare these other movements, this is not evidenced either way.

The next interesting result is a random walk. Random walk: Simulate many viewers starting at each of the channel categories, follow recommendations randomly, where do they end up after X steps?

The next interesting result is a random walk. Random walk: Simulate many viewers starting at each of the channel categories, follow recommendations randomly, where do they end up after X steps?

9/ If you start from alt-right, almost 100% of viewers end up watching non-alt-right videos. This shows that the algorithm’s influence. It leads away from the Alt-right towards IDW/Control, the opposite of the way this study is being represented.

10/ Final thoughts. I would dispute some of the channels that have been classified IDW (e.g. Sargon of Akkad), but I don’t think that would change the results. Use of moralistic language like “infection” is unnecessary and is evidence of their bias is leaking into this work

11/ It's a shame, because I really like some of these methods. I'm planning to mimic some of this and fix the problems complaining about.

12/ One more thought. The "missing movement" problem in this paper is glaring. Consider this study: "Planes are problematic because they move people to New Zealand". Imagine only looking at flights into NZ and not even mentioning the flights out.

• • •

Missing some Tweet in this thread? You can try to

force a refresh