#IDATHAINSIGHTS

- it has to be a value between 0 and 1

- the sum of all possible values must be 1

en.m.wikipedia.org/wiki/Probabili…

#IDATHAINSIGHTS

en.wikipedia.org/wiki/Softmax_f…

#IDATHAINSIGHTS

#IDATHAINSIGHTS

#IDATHAINSIGHTS

#IDATHAINSIGHTS

#IDATHAINSIGHTS

#IDATHAINSIGHTS

#IDATHAINSIGHTS

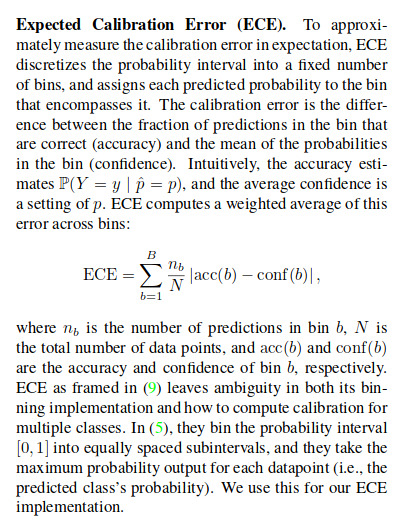

arxiv.org/pdf/1706.04599…

#IDATHAINSIGHTS

#IDATHAINSIGHTS

#IDATHAINSIGHTS

openaccess.thecvf.com/content_CVPRW_…

#IDATHAINSIGHTS

#IDATHAINSIGHTS

#IDATHAINSIGHTS

#IDATHAINSIGHTS

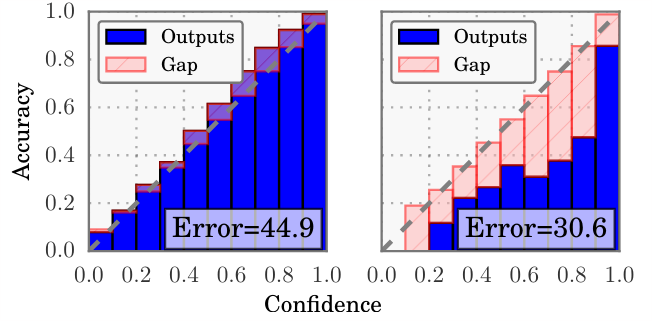

Discrimination: how well the scores separate the classes

Calibration: whether those scores can be interpreted probabilistically.

#IDATHAINSIGHTS

tv.vera.com.uy/video/55289

References: 📃

drive.google.com/file/d/1j7lykM…

scikit-learn.org/stable/modules…

cs231n.stanford.edu