New result published by @SpringerNature has proven mathematically that homeopathy works.

I had to tweet about this paper.

I had to tweet about this paper.

Homeopathy is often thought to be "natural medicine." In truth, it's not anything medicine. Homeopathy is actually a remarkable delusion: the idea is that you take a substance and dilute it in water until there are no molecules of the substance left.

en.wikipedia.org/wiki/Homeopathy

en.wikipedia.org/wiki/Homeopathy

The "memory" of the substance is supposed to heal you.

"Water has memory! And whilst its memory of a long lost drop of onion juice is infinite, it somehow forgets all the poo its had in it..." -- @timminchin in his beat poem, Storm

So, people who take "homeopathic medicines" are taking pills with basically zero chance of containing an active ingredient. In fact, the more diluted, the MORE effective homeopaths think the treatment is.

Here's a daredevil drinking homeopathic bleach.

Here's a daredevil drinking homeopathic bleach.

They even mark the packages with things like "30X potency" to make the more diluted stuff sound better. The difference between 30X and 10X is that 30X has been diluted EVEN MORE.

en.wikipedia.org/wiki/Homeopath…

en.wikipedia.org/wiki/Homeopath…

Insane. Here's a video of James Randi explaining homeopathy...

and him "overdosing" on homeopathic sleeping pills.

(If you don't know Randi, check out the great documentary about him, An Honest Liar)

(If you don't know Randi, check out the great documentary about him, An Honest Liar)

So it's no big surprise that studies and then reviews find nothing. So many that you need a review of reviews. Homeopathy does no better than a placebo, because it *is* just a placebo.

ncbi.nlm.nih.gov/pmc/articles/P…

ncbi.nlm.nih.gov/pmc/articles/P…

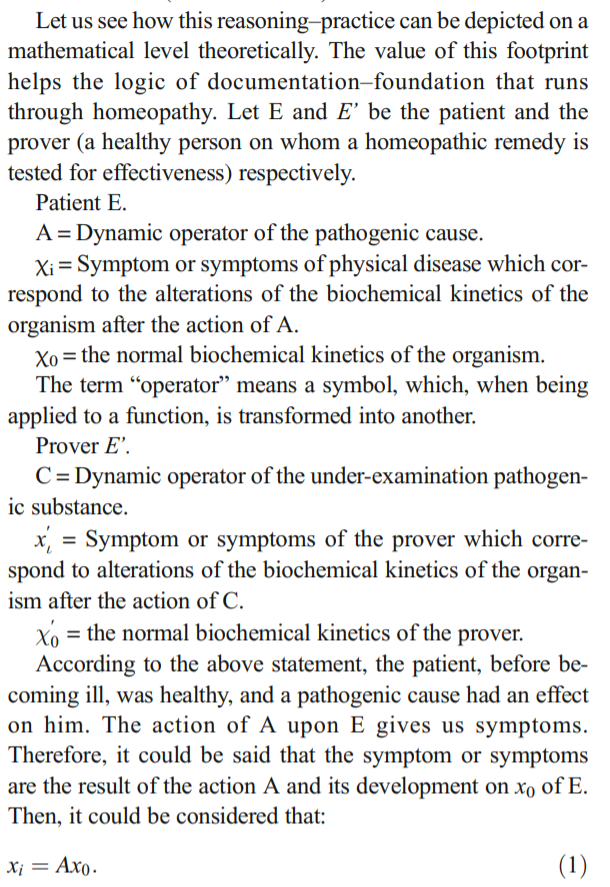

But that doesn't stop homeopaths from writings papers in the midst of the global COVID pandemic --- not only providing misinformation, but claiming that to have a MATHEMATICAL PROOF of efficacy.

Oops, wait, don't read that. Those Chis appear to be typos. There are no Chis later in the paper. Nor are there subscript iotas. That probably should be subscript 1? And the Chis xes? What kind of lazy, incompetent people are typesetting this paper, the reviewers?

Let's pick an easier notation. Here is what equation (1) means. There's some function A which changes a person's internal biochemistry to have a disease and its symptoms. This is what happens to the patient:

DiseaseSymptoms = A(NormalBiochemistry)

DiseaseSymptoms = A(NormalBiochemistry)

Ok, equation (2) says that you can get the same symptoms without the disease from a healthy person called a "prover" with some substance C:

Symptoms = C(ProversNormalBiochemistry)

Symptoms = C(ProversNormalBiochemistry)

But, the prover and a healthy person are kinda similar since I guess they're both people. And having the disease and having the symptoms are similar, so we assume there's some function B so that,

Symptoms = B(DiseaseSymptoms)

ProversNormalBiochemistry = B(NormalBiochemistry)

Symptoms = B(DiseaseSymptoms)

ProversNormalBiochemistry = B(NormalBiochemistry)

(At this point you might be thinking: well what is B exactly? It's a function from internal biochemistry to internal biochemistry, or a function from disease to symptoms or something else? Who knows.)

A "Therefore" comes next, followed by a repeat of (2), but that doesn't seem like the target of "therefore", it's probably the next line,

Symptoms = B(A(NormalBiochemistry))

Symptoms = B(A(NormalBiochemistry))

and with a little rearranging and using the tweet above,

Symptoms = B(A(B^{-1}(ProversNormalBiochemistry)))

Symptoms = B(A(B^{-1}(ProversNormalBiochemistry)))

from which we conclude about C, from the equation up there relating Symptoms and ProversNormalBiochemistry, that C satisfies C = B A B^{-1}! So... the authors declare that

It seems to me like they forgot these were functions and are suddenly thinking they are... matrices? Were they matrices all along? Do we have to worry if they're invertible? If "biochemical kinetics" are linear? Could someone who uses the term "affine" ever actually be wrong?

So what have we proven? Sometimes a function can be written using another function and its inverse? An inverse is the same as an "opposite"? And "opposite" substance is the same as its absence? COVID treatments need homeopathy?

This garbage reminds me a lot of those proofs that people send around trying to claim the prize for famous mathematical problems. They have this kind of flavor of huge claims, numbered equations, and foundational incoherence.

• • •

Missing some Tweet in this thread? You can try to

force a refresh