journals.sagepub.com/doi/abs/10.117…

1/

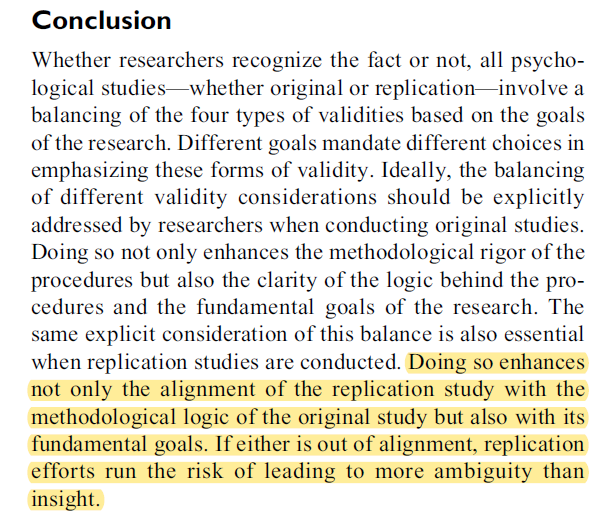

validity is only 1of the 4 categories of explanations for non-replication." Thus, let's move on.

5/

9/

..aren't we doing that? Is that not what @OSFramework and @improvingpsych are doing? It's not all just replications...

23/

I appreciate and respect the authors strong stance here, and criticism of current directions in our field are great.

I agree with their primary thesis that more attention can be paid to other forms of validity.

26/

They make implicit & explicit statements that original work is more valid than replications. I disagree. It misses the point that older work is lacking in psychometrics, power, transparency, etc. 27/

Thanks for reading. This was not as brief as I originally thought. Interested to hear others thoughts on this paper. Cheers.

/END