When continuous-valued probability distributions are taught, typically the density function (pdf) is introduced first, with some handwaving, and then the cdf is defined as an integral of the pdf.

This is backwards! A pdf ONLY EXISTS as the DERIVATIVE of the cdf.

This is backwards! A pdf ONLY EXISTS as the DERIVATIVE of the cdf.

I'm not saying that the Radon-Nikodym theorem or Lebesgue measure should be explicitly introduced before we can talk about Gaussians, but I think people comfortable with calculus would rather see d/dx P(X<x) than the usual handwaving about densities

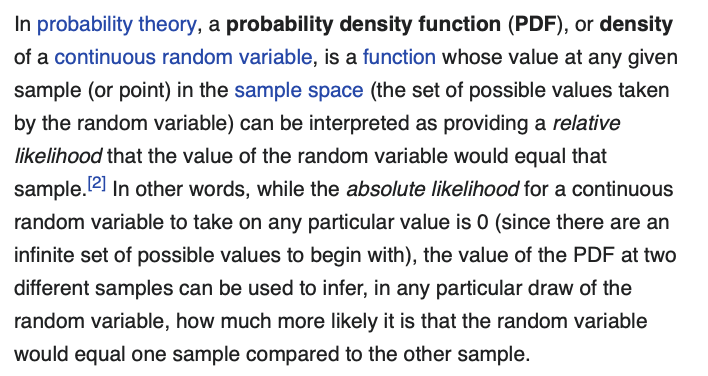

A prime example of the kind of handwaving I'm whinging about, from Wikipedia. What is this nonsense,

very tempted to add a "[by whom?]" tag after "can be interpreted"

• • •

Missing some Tweet in this thread? You can try to

force a refresh