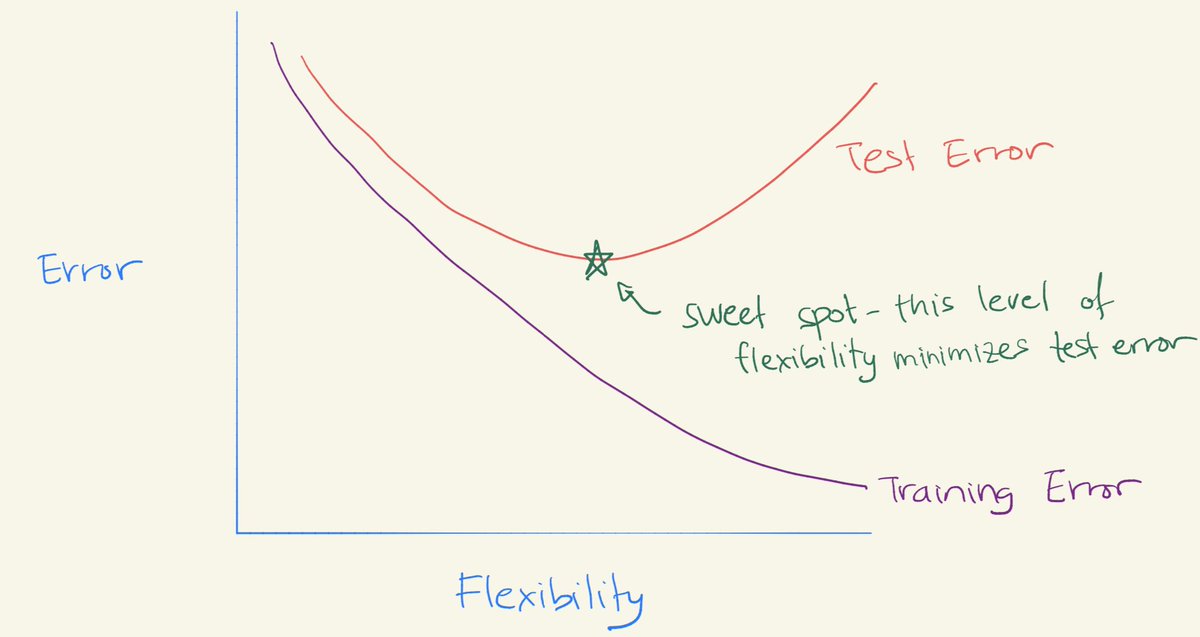

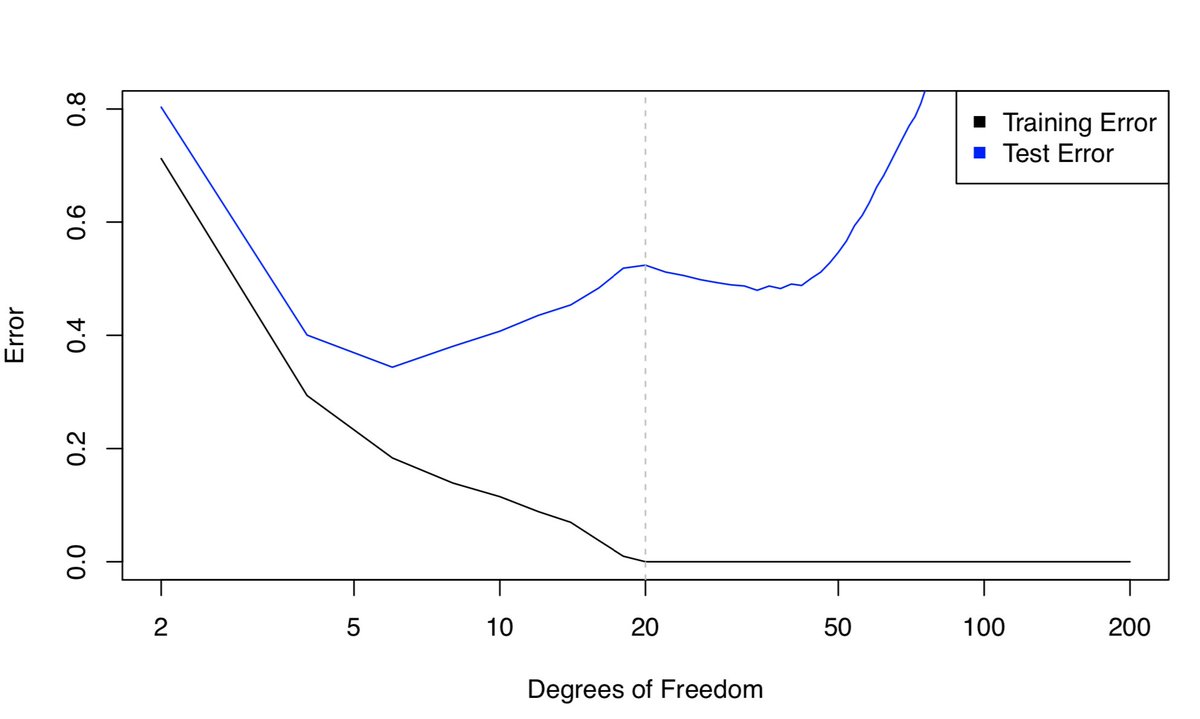

Exp. Pred. Error = Irreducible Error + Bias^2 + Var

As flexibility increases, (squared) bias decreases & variance increases. The "sweet spot" requires trading off bias and variance -- i.e. a model with intermediate level of flexibility.

2/

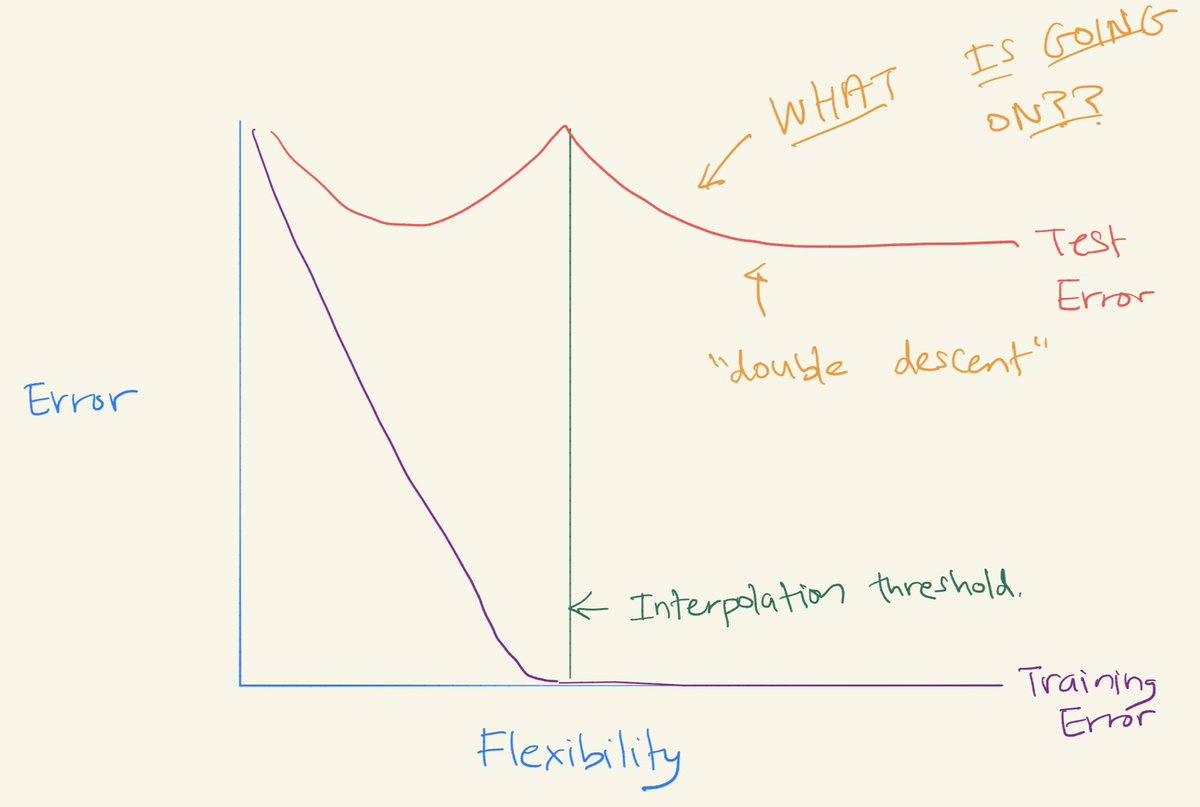

What the heck is going on? Does the bias-variance trade-off NOT HOLD? Are the textbooks all wrong?!?!?!

Or is deep learning *magic*?

4/

I promise, the bias-variance trade-off is OK!

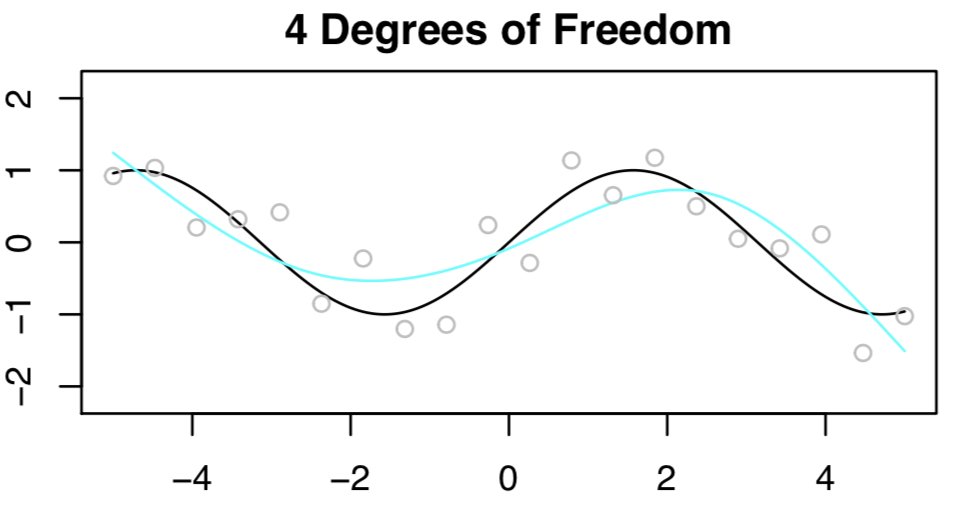

To understand double descent, let's check out a simple example that has nothing to do with deep learning: natural cubic splines.

5/

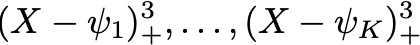

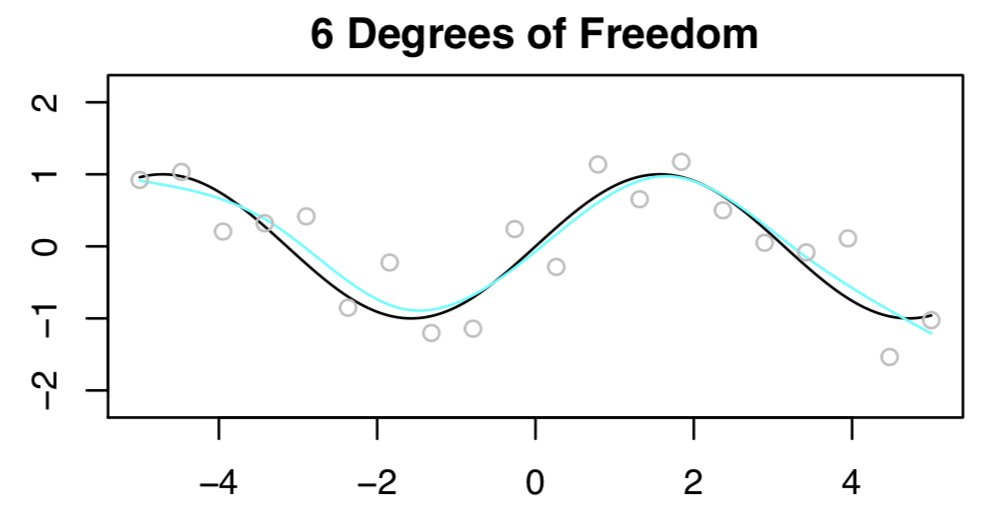

To fit a spline, we construct some basis functions and then fit the response Y to the basis functions via least squares.

6/

11/

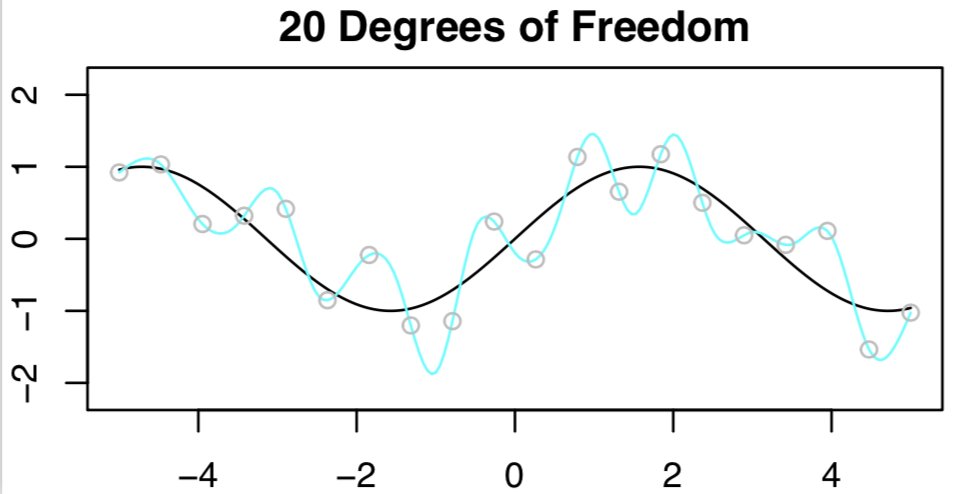

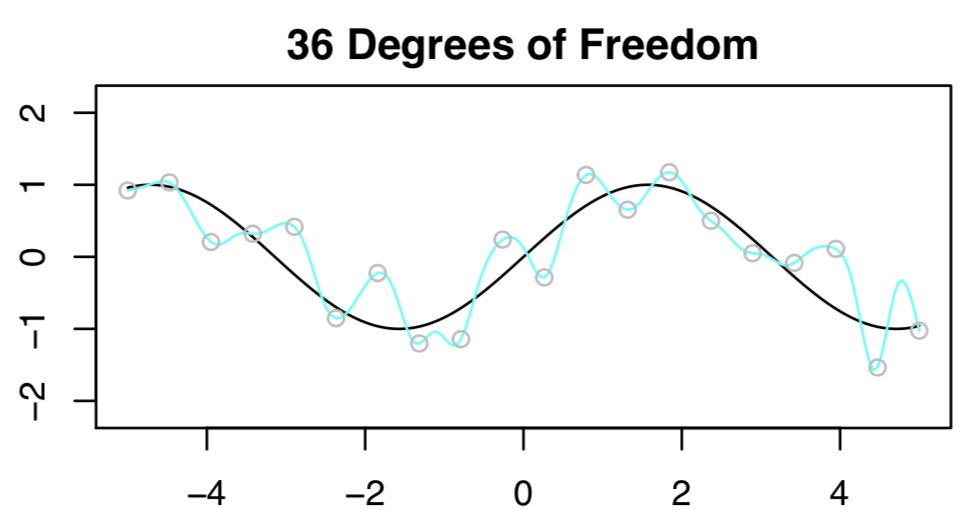

You heard me right: least squares with n=20 and p=36. WHAT THE WHAT?!!

12/

To select among the infinite number of solutions, I choose the "minimum" norm fit: the one with the smallest sum of squared coefficients. [Easy to compute using everybody's favorite matrix decomp, the SVD.]

13/

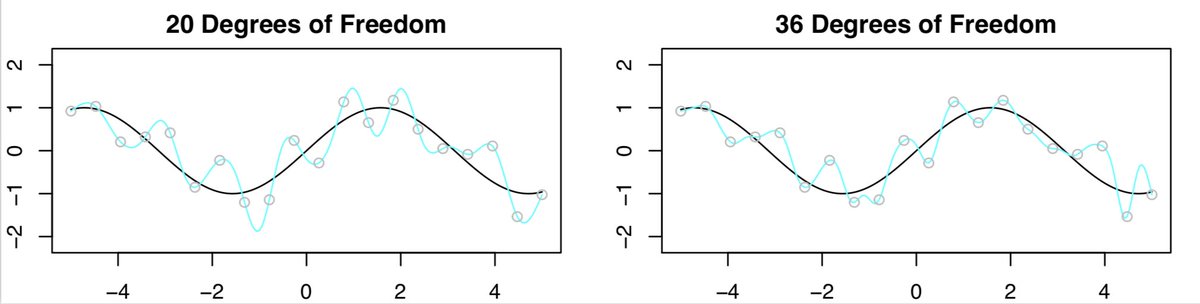

The key point is with 20 DF, n=p, and there's exactly ONE least squares fit that has zero training error. And that fit happens to have oodles of wiggles.....

17/

The MINIMUM NORM least squares fit is the "least wiggly" of those zillions of fits. And the "least wiggly" among them is even less wiggly than the fit when p=n !!!

18/

Crazy, huh?

19/

Well then we wouldn't have interpolated training set, we wouldn't have seen double descent, AND we would have gotten better test error (for the right value of the tuning parameter!)

20/

When we use (stochastic) gradient descent to fit a neural net, we are actually picking out the minimum norm solution!!

So the spline example is a pretty good analogy for what is happening when we see double descent for neural nets.

21/

✅ double descent is a real thing that happens

✅ it is not magic 🚫

✅ it is understandable through the lens of stat ML and the bias-variance trade-off.

Actually, the B/V T/O helps us understand *why* DD is happening!

No magic ... just statistics

22/

Thanks to my co-authors @robtibshirani @HastieTrevor and Gareth James for discussions leading to some of the ideas in this thread

24/24