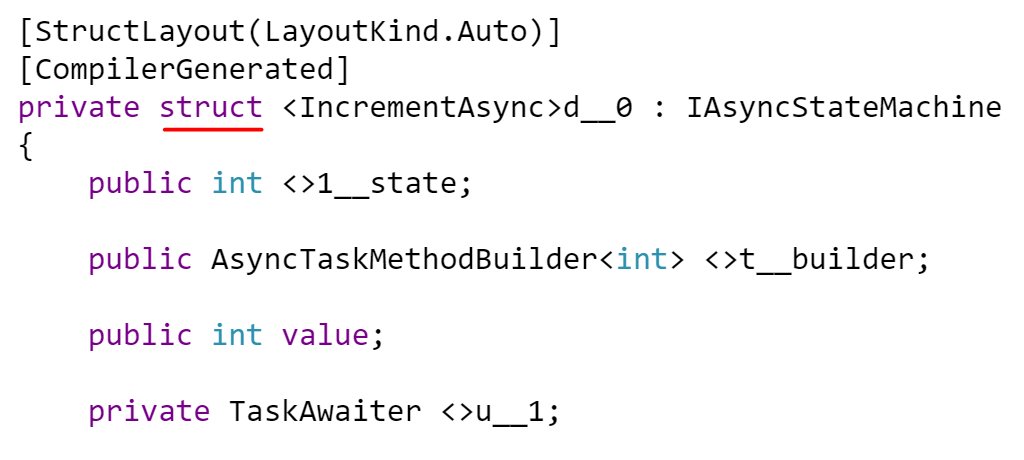

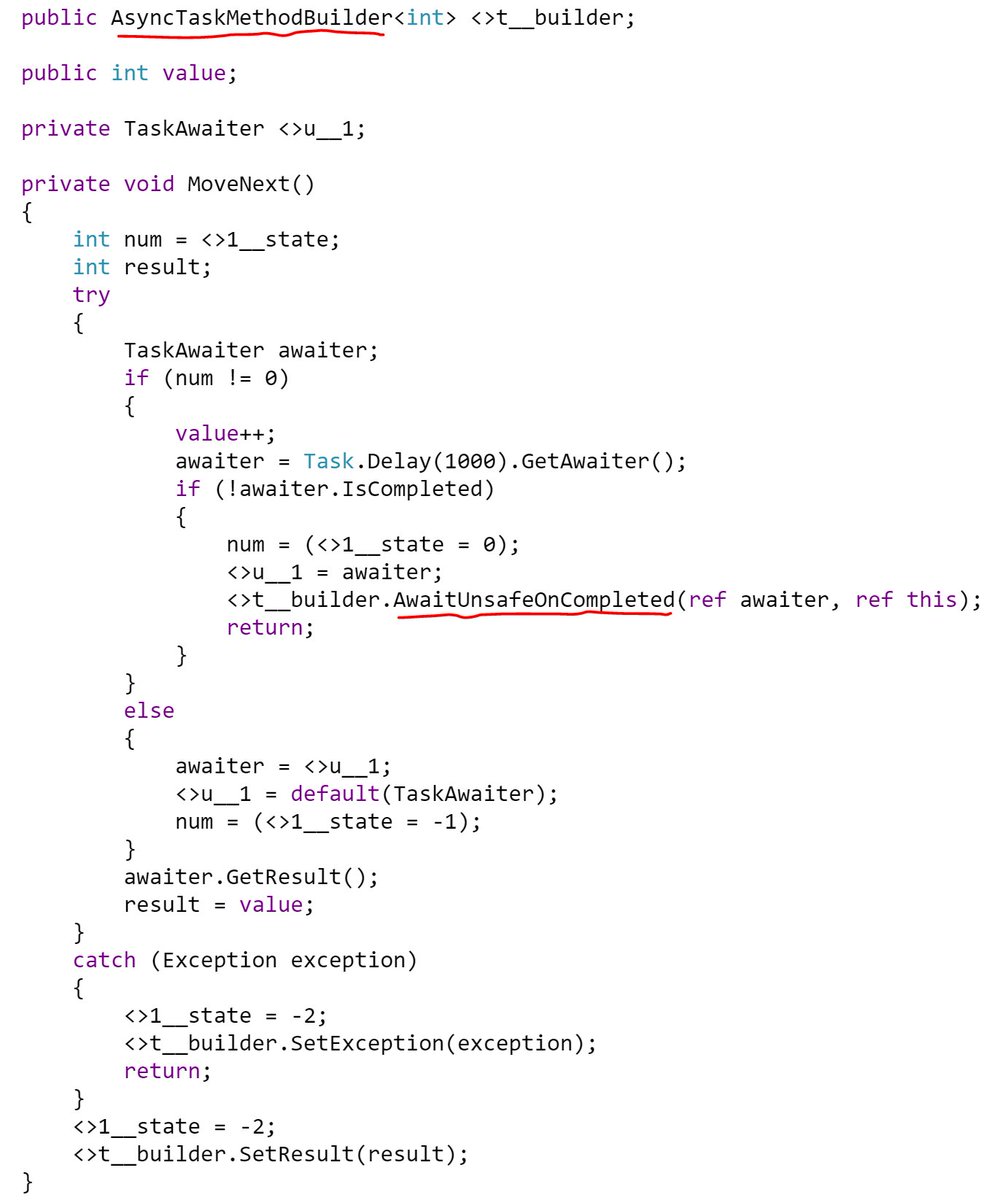

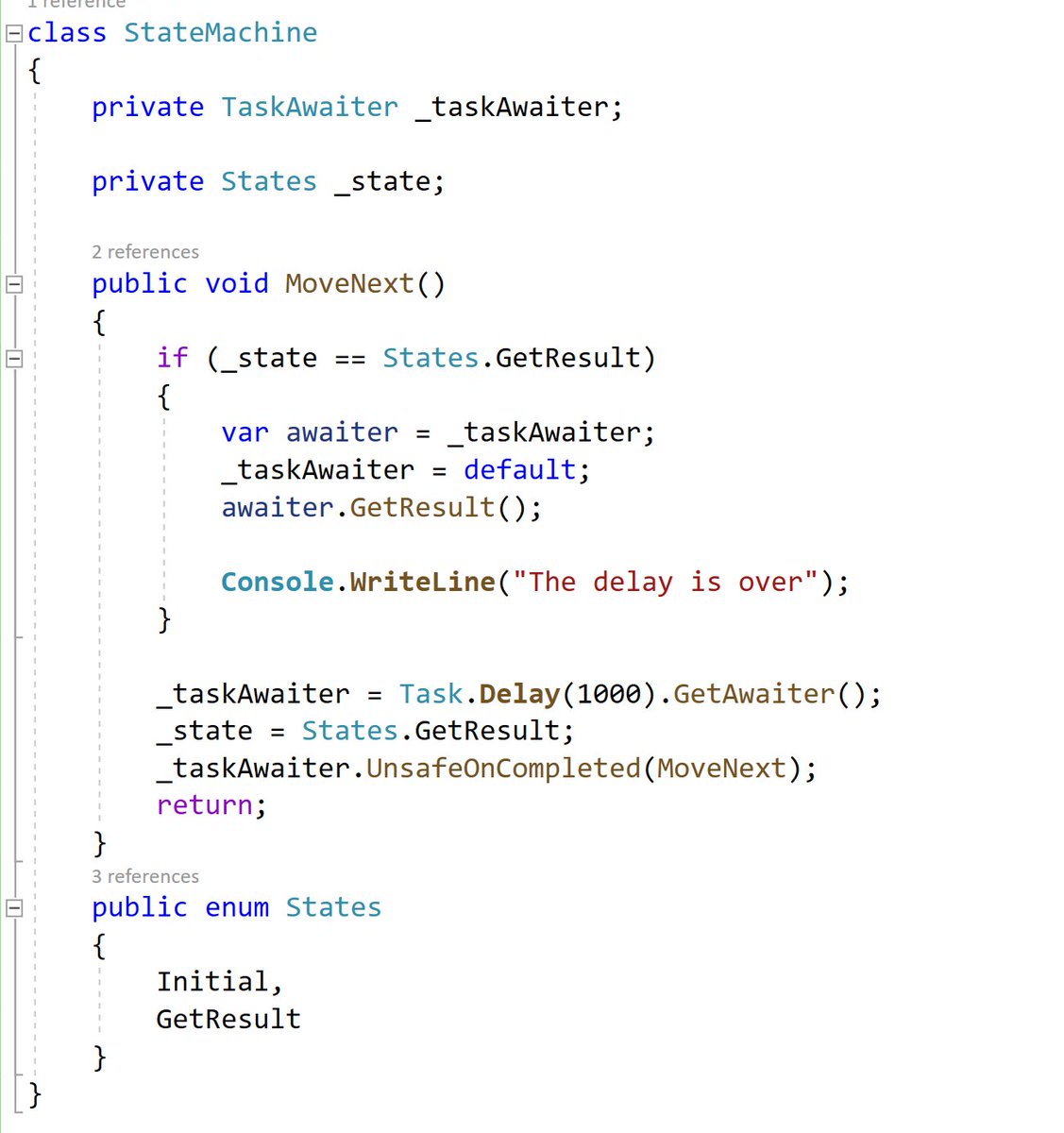

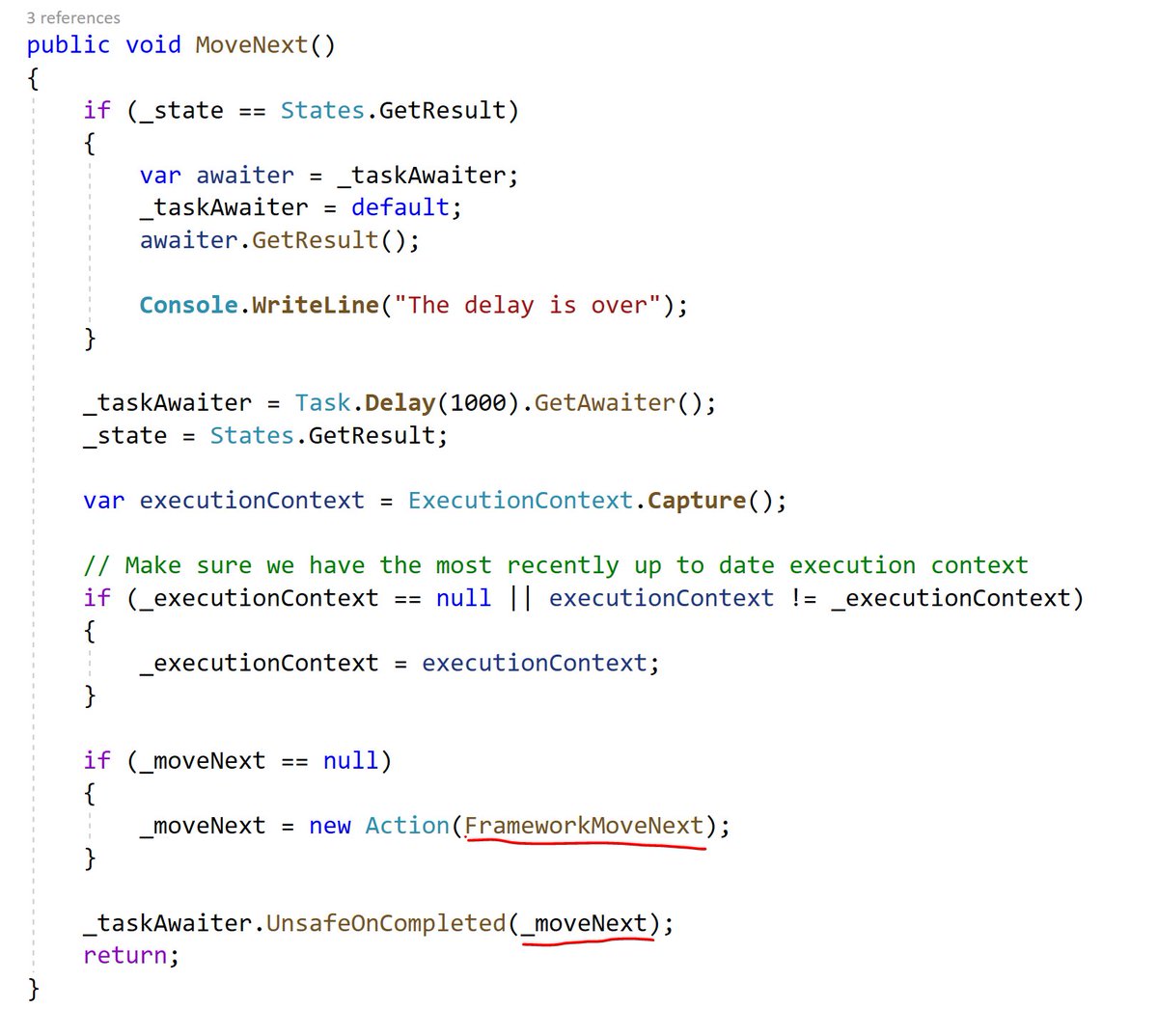

- Moving the state machine from the stack to the heap (boxing)

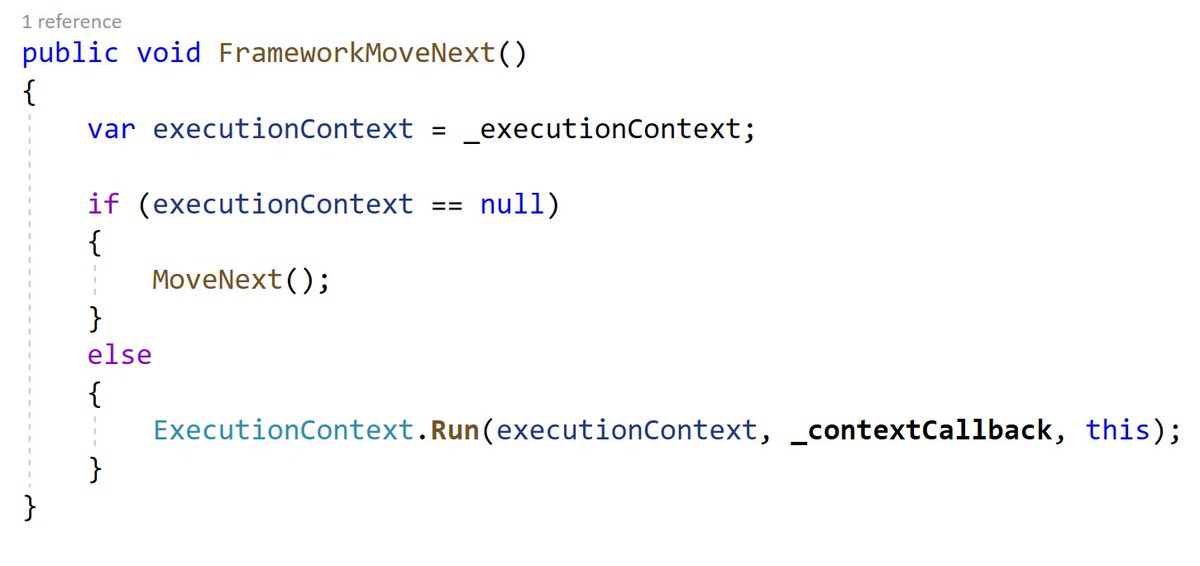

- It needs to capture the execution context (remember this!?)

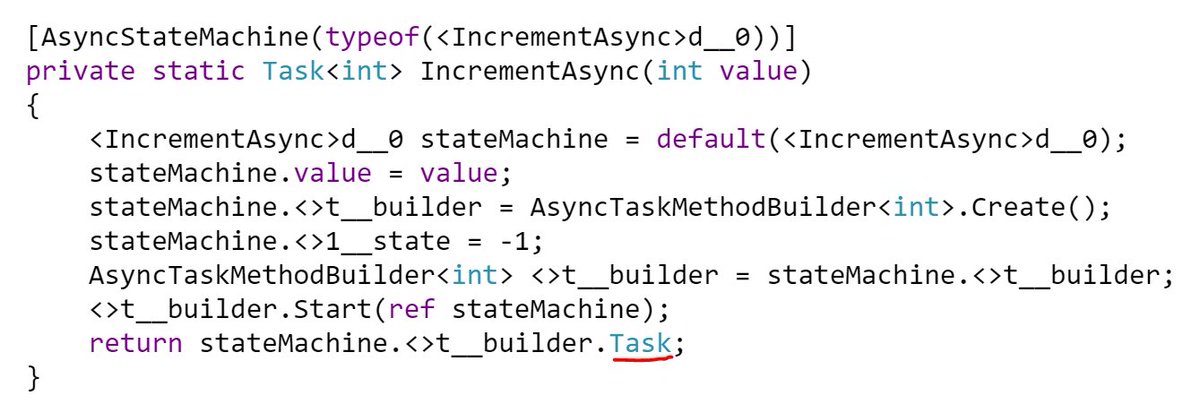

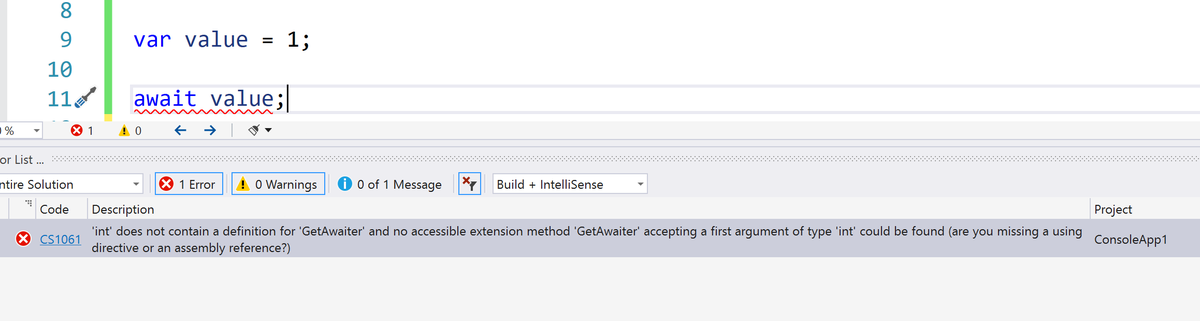

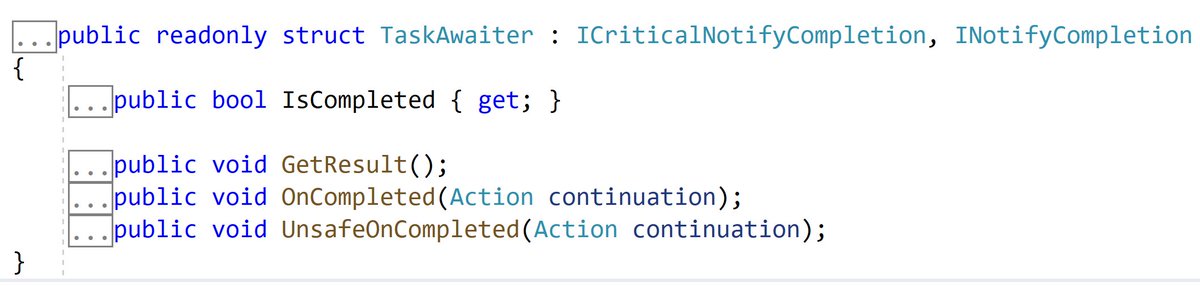

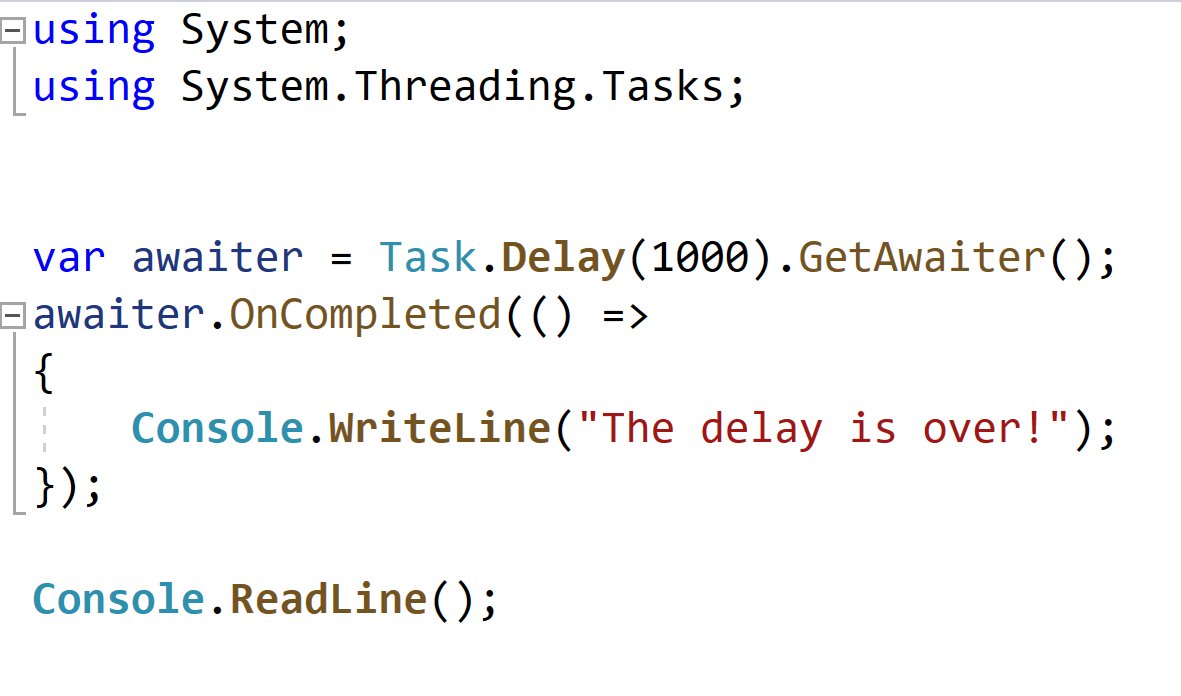

Side note: C# allows you to write your own Task-like types (invent your own promise type) and you can make any object awaitable.

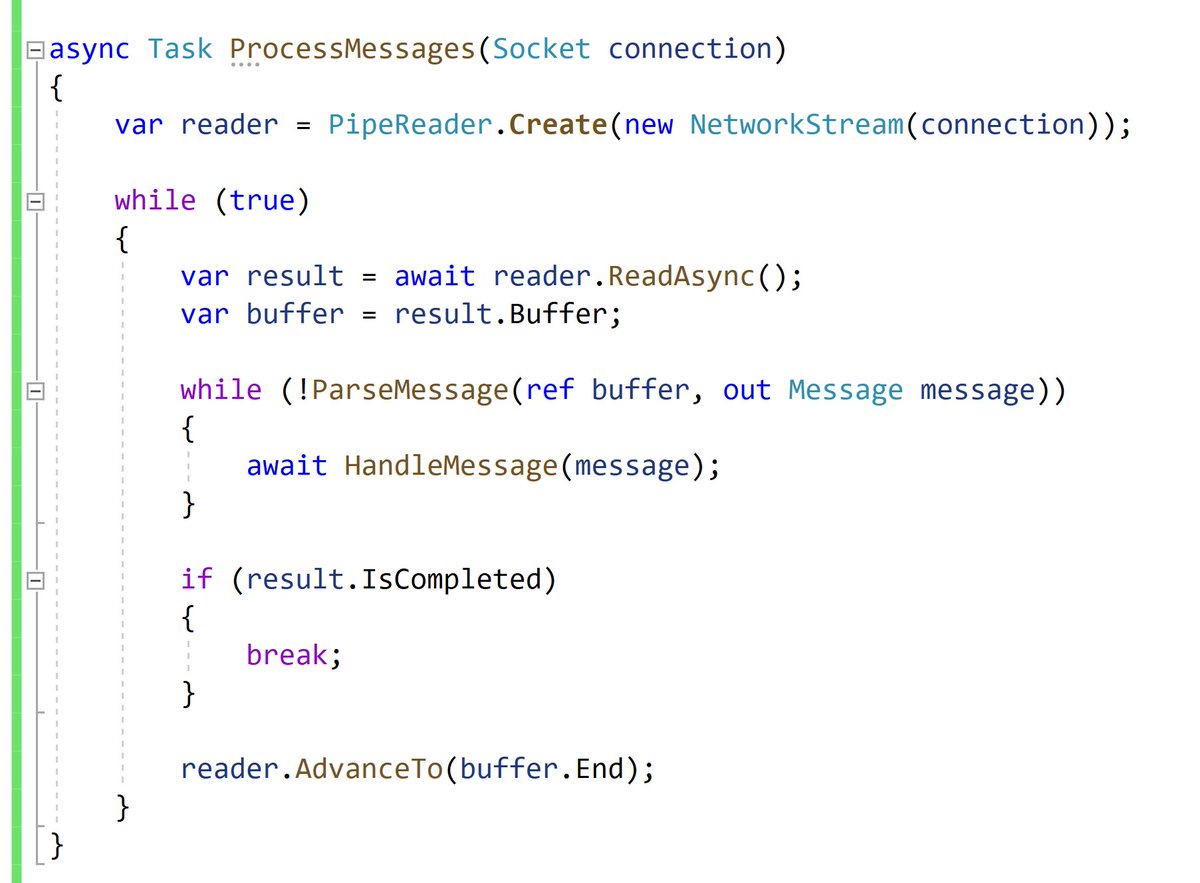

- Reusable allocations for things like socket reads and writes

- Reusable state machine for scheduling

- Less allocations per state machine