New pre-print with Katrin Erk:

"How to marry a star? Probabilistic constraints for meaning in context."

A theoretical paper on evocation: how people ‘imagine' an entire situation from a few words, and how meaning is contextualised in the process.

arxiv.org/abs/2009.07936 /1

"How to marry a star? Probabilistic constraints for meaning in context."

A theoretical paper on evocation: how people ‘imagine' an entire situation from a few words, and how meaning is contextualised in the process.

arxiv.org/abs/2009.07936 /1

#lightreading summary ⬇️

When you hear 'the batter ran to the ball', what do you imagine? A batter? A ball? But perhaps also a pitch, an audience, the sun and a cap on the head of the batter.

What do you imagine when you hear 'the duchess drove to the ball'? /2

When you hear 'the batter ran to the ball', what do you imagine? A batter? A ball? But perhaps also a pitch, an audience, the sun and a cap on the head of the batter.

What do you imagine when you hear 'the duchess drove to the ball'? /2

We propose a computational model which takes a (simple) sentence and builds a conceptual description for it. In the process of doing so, it captures appropriate word senses or other lexical meaning variations. E.g. that the batter's ball is not a dancing event. /3

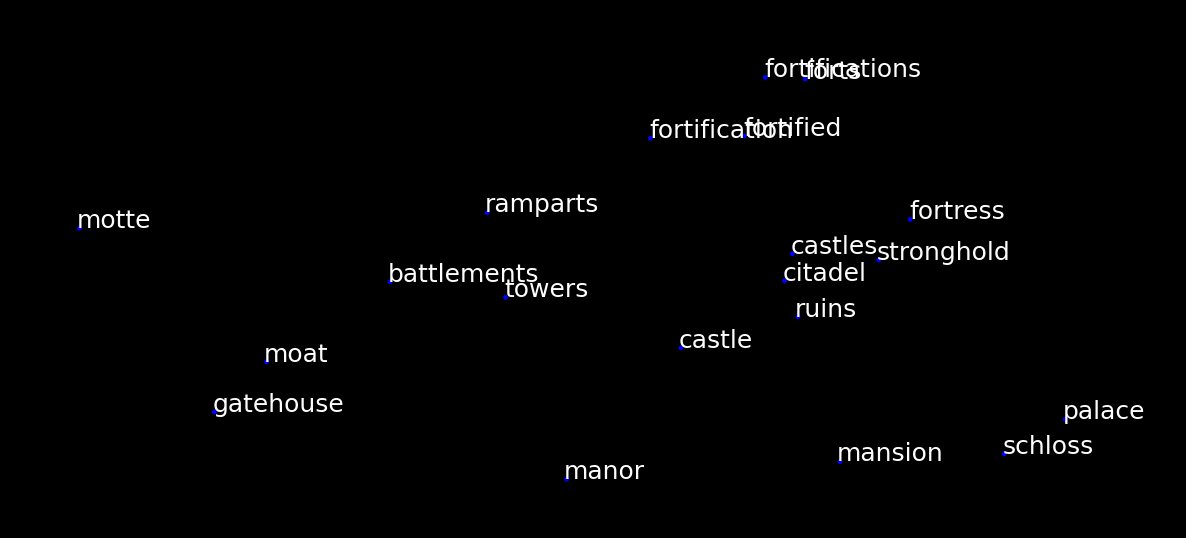

Simple example: hearing 'bat' in the context of 'vampire' should make you much more likely to understand that the speaker is talking about animals: /4

Hearing 'the vampire is eating' should activate the concept of an object (*what* is the vampire eating?) and that object might be more likely to be a blood orange than another vampire or a castle: /5

Sometimes, different interpretations compete with each other. Consider the sentence:

"The astronomer married the star."

What comes to your mind? The Hollywood star or the celestial object? Both? /6

"The astronomer married the star."

What comes to your mind? The Hollywood star or the celestial object? Both? /6

To take care of this, the model generates not a single situation description but many, each one with its own probability. For some speaker, the description with the Hollywood star might be more likely than the one where the astronomer married Betelgeuse. Or vice-versa. /7

A situation description consists of:

* scenarios (at-the-restaurant, gothic-novel)

* concepts (champagne, vampire)

* individuals (this bottle of champagne, Dracula)

* features of individuals (having a cork, being pale)

* roles (being the agent of a drinking event) /8

* scenarios (at-the-restaurant, gothic-novel)

* concepts (champagne, vampire)

* individuals (this bottle of champagne, Dracula)

* features of individuals (having a cork, being pale)

* roles (being the agent of a drinking event) /8

Technically speaking, the account is implemented as a probabilistic generative model. It takes the logical form of a sentence:

∃x,y [astronomer(x)∧star(y)∧marry(x,y)]

and generates the conceptual descriptions most likely to account for that logical form. /9

∃x,y [astronomer(x)∧star(y)∧marry(x,y)]

and generates the conceptual descriptions most likely to account for that logical form. /9

For those interested, please check out the pre-print 🙂arxiv.org/abs/2009.07936

A jupyter notebook with working examples: github.com/minimalparts/M…

/10

A jupyter notebook with working examples: github.com/minimalparts/M…

/10

• • •

Missing some Tweet in this thread? You can try to

force a refresh