Everyone hates college rankings, but they're not going away. So why not make them do some actual good instead? roanoke.com/opinion/column…

This Atlantic piece provides links to 2 decades of people criticizing college rankings, from Malcolm @Gladwell to @opinion_joe. theatlantic.com/education/arch…

The complaints about US News and World Report's rankings go back further than that, however. Here's a story from 1989 about college presidents meeting with the magazine in 1987 to complain.

33 years after that visit, the magazine is gone but the rankings persist.

33 years after that visit, the magazine is gone but the rankings persist.

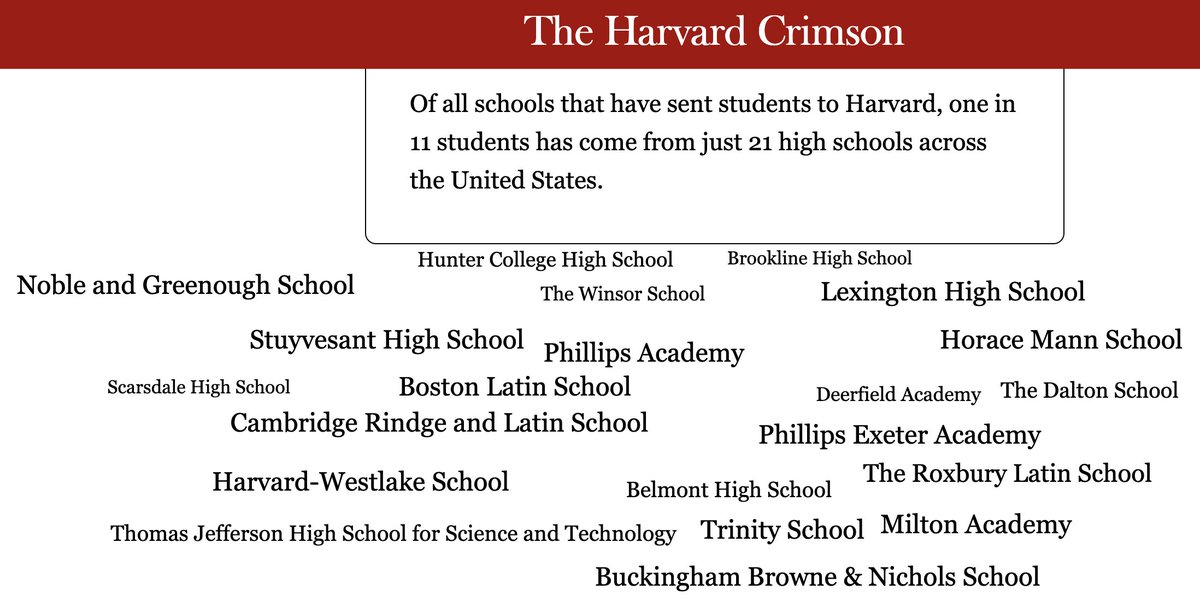

I won't rehearse all the complaints about the rankings here. They're well-known, largely correct, and utterly ineffectual. The basic complaint is that they motivate bad behaviors and decision among students, high schools, and institutions.

What is the rankings could motivate good behaviors, however? That's what Sen. @ChrisCoons pushed US News to do in 2016, when he called on them to make accessibility and affordability part of the rankings. coons.senate.gov/news/press-rel…

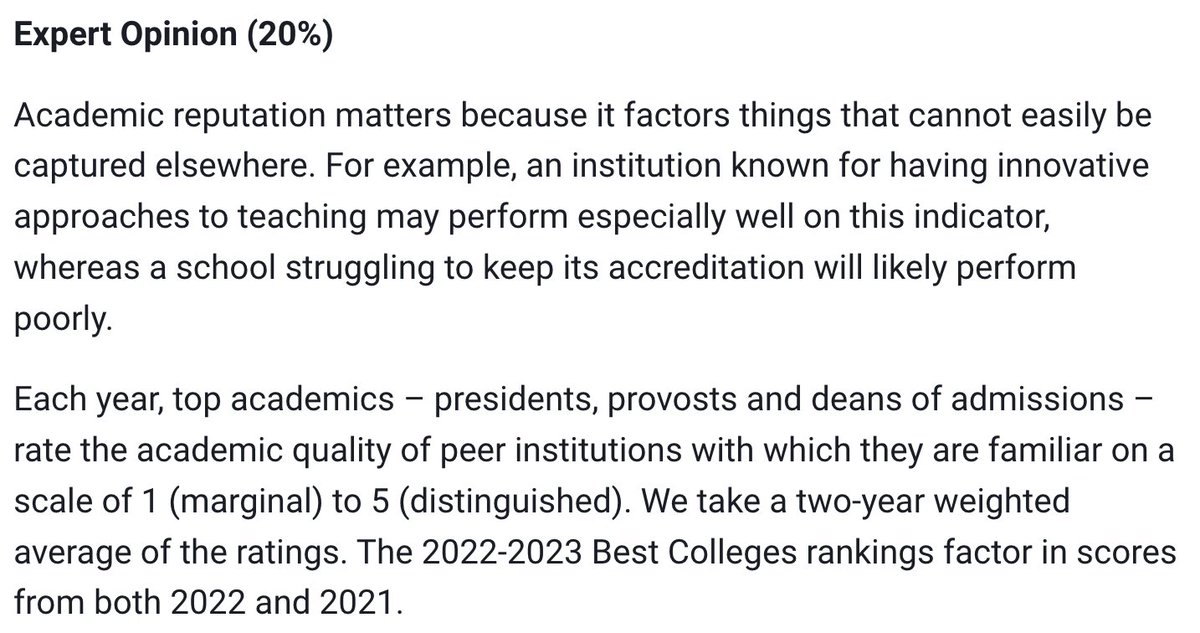

US News did it. They added a social mobility component that looks at the graduation rates of students on Pell Grants and factored in how many students at a school got a Pell. usnews.com/best-colleges/…

It's hard to entirely disentangle all the inputs and assign a cause without full access to the data, but almost all of the 25 schools that are the worst at enrolling low income students dropped in the rankings. Miami (OH) and Dayton both dropped more than 20 spots.

Currently, the social mobility score counts for just 5% of a school's overall score. **Imagine if it counted for 25%!** I think we'd see a radical shift in the rankings, which would force a lot of schools to correct their priorities.

I'm aware that some other magazines place more emphasis on social mobility rankings, but there's 2 issues here:

1. Their rankings have methodological problems that perversely reward wealthy schools with low Pell shares.

2. USN has cornered the market.

1. Their rankings have methodological problems that perversely reward wealthy schools with low Pell shares.

2. USN has cornered the market.

https://twitter.com/James_S_Murphy/status/1304428309636435968?s=20

I think that USNWR's Best Colleges lists actually do a better job at ranking social mobility by focusing on what we can focus on, based on the data available: access and completion.

Given the dire state of the economy and of university budgets, it's vital to protect the interests of low-income students. It's in everyone's interest to do so.

And let's celebrate places like @UCRiverside @UCIrvine @Rutgers_Newark @GeorgiaStateU and @HowardU that topped the list not the same old same olds that don't need the attention.

• • •

Missing some Tweet in this thread? You can try to

force a refresh