Have to say I’m somewhat surprised by these pronouncements, and modus thereof (RR without AR etc), particular given the expertise.

The replies here on methods are important, especially this:

⚠️ of “more research” feel cursory.

#classiccovid

The replies here on methods are important, especially this:

https://twitter.com/boulware_dr/status/1311332673969848325?s=21

⚠️ of “more research” feel cursory.

#classiccovid

https://twitter.com/_miguelhernan/status/1311304453484679174

Also not pre-registered and certainly not PRISMA compliant in pre-print version.

Hmmm....

Hmmm....

Also, did the authors check if their review was needed?

There’s a living systematic review published back in August with sound methods:

acpjournals.org/doi/10.7326/M2…

Conveniently its last search date just preceded the 3 studies including in this pre-print.

There’s a living systematic review published back in August with sound methods:

acpjournals.org/doi/10.7326/M2…

Conveniently its last search date just preceded the 3 studies including in this pre-print.

There are currently 12 pages of systematic reviews on hydroxychloroquine returner in @epistemonikos

epistemonikos.org/en/search?q=Hy…

Many of these looking at prophylaxis.

#racetobethewinner

#classiccovid

epistemonikos.org/en/search?q=Hy…

Many of these looking at prophylaxis.

#racetobethewinner

#classiccovid

Another is the fact that no consideration has been given to the certainty of the evidence e.g. risk of systematic bias (2 of the studies are pre-prints), random error (amount of information, precision). Given one of the authors has written the book on causal inference = ironic.

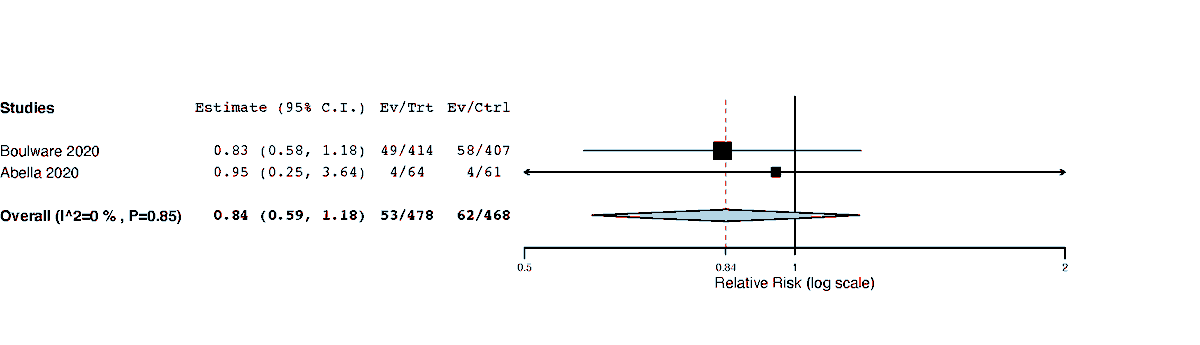

So @_MiguelHernan has updated the MA to include this latest study: jamanetwork.com/journals/jamai…

If we include the only two studies that have been peer-reviewed then the MA looks like this.

That's before we again look at the overall certainty of evidence.

If we include the only two studies that have been peer-reviewed then the MA looks like this.

That's before we again look at the overall certainty of evidence.

Also, I did that meta-analysis in 1 min. This shows the absolute pointlessness of MA's outside of the context of a properly conducted and reported systematic review, that includes an assessment of the certainty of evidence (i.e. GRADE or similar).

So poor.

So poor.

If the risk of bias of the trials is high, then we downgrade the evidence from high to mod.

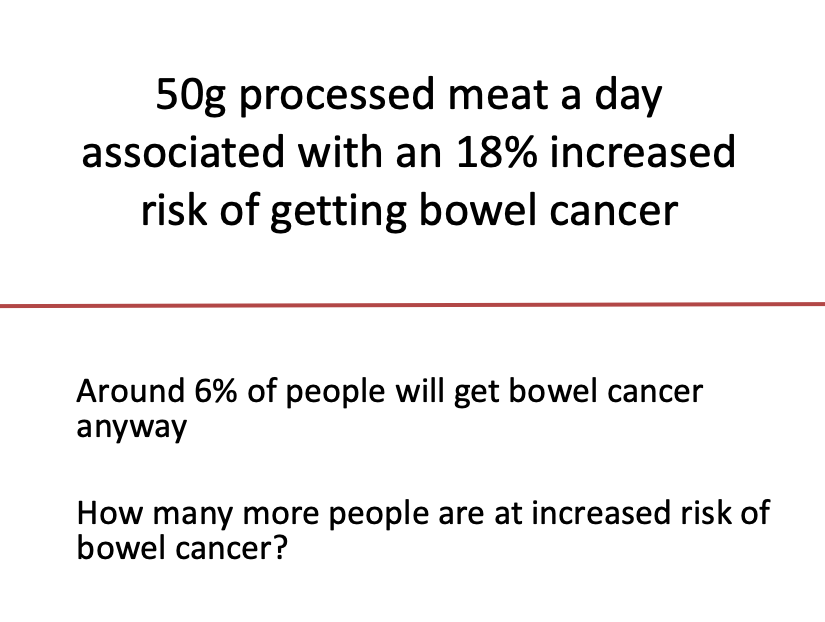

We have to make a decision as what a clinically meaningful effect here is? 25% RR is considered by some as 'meaningful' for estimates of non-life threatening outcomes eg risk of COVID +ve

We have to make a decision as what a clinically meaningful effect here is? 25% RR is considered by some as 'meaningful' for estimates of non-life threatening outcomes eg risk of COVID +ve

In pre-print MA - CI crosses meaningful (low as 0.61) but also includes trivial/no benefit. I would downgrade here by one again meaning we low quality/certainty of evidence.

For my MA, would consider downgrading two levels as shows potential meaningful harm (so very low qual)

For my MA, would consider downgrading two levels as shows potential meaningful harm (so very low qual)

I haven't the time to look at whether there is indirectness (the studies don't include relevant/similar populations, intervention, comparator, outcomes) for the research question.

Probably also fails on the optimal information size (enough info) as here we have <300 events

Probably also fails on the optimal information size (enough info) as here we have <300 events

Only thing it (the totality of evidence) doesn't get downgraded on is 'consistency' (results go in same direction, overlapping CIs etc).

Publication bias also needs looking at. No idea what the authors of the pre-print did on this.

Publication bias also needs looking at. No idea what the authors of the pre-print did on this.

How many trials on hydroxychloroquine have been registered/non-registered.

How many of these unpublished? Though the fact that the existing trials are all null trials does indicate that studies with p>0.05 are being published.

How many of these unpublished? Though the fact that the existing trials are all null trials does indicate that studies with p>0.05 are being published.

A good SR+MA would do all these checks.

It's a pre-print so we can give benefit of the doubt.

But a press-release tweet of a single point estimate that considers little of these CRITICAL issues from a MA that wasn't pre-registered + poor reporting = almost ignorable.

It's a pre-print so we can give benefit of the doubt.

But a press-release tweet of a single point estimate that considers little of these CRITICAL issues from a MA that wasn't pre-registered + poor reporting = almost ignorable.

A tweet to nicely end this thread on:

https://twitter.com/mendel_random/status/1311372732660174848

• • •

Missing some Tweet in this thread? You can try to

force a refresh