8x10Gb backplane for the basement has arrived - will install this after work not interrupting school internet and such.

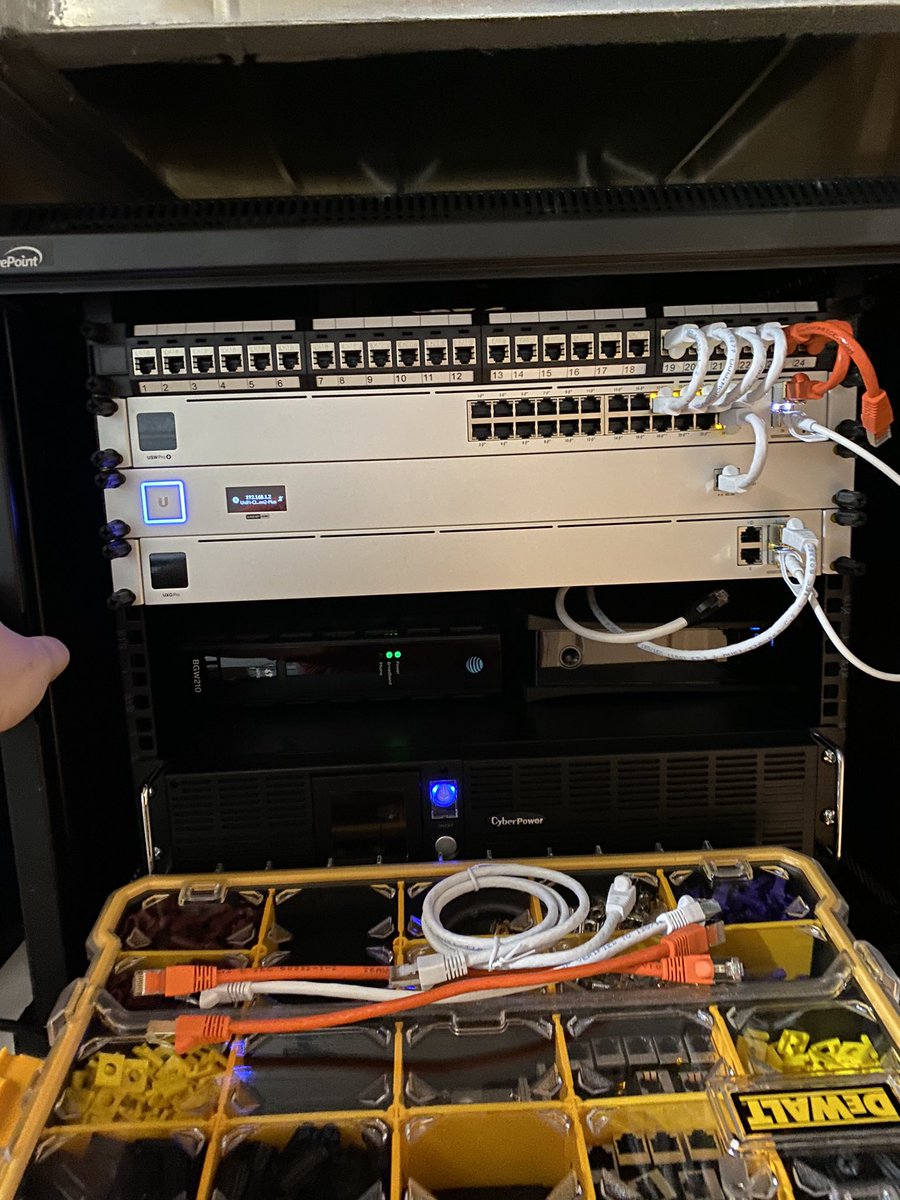

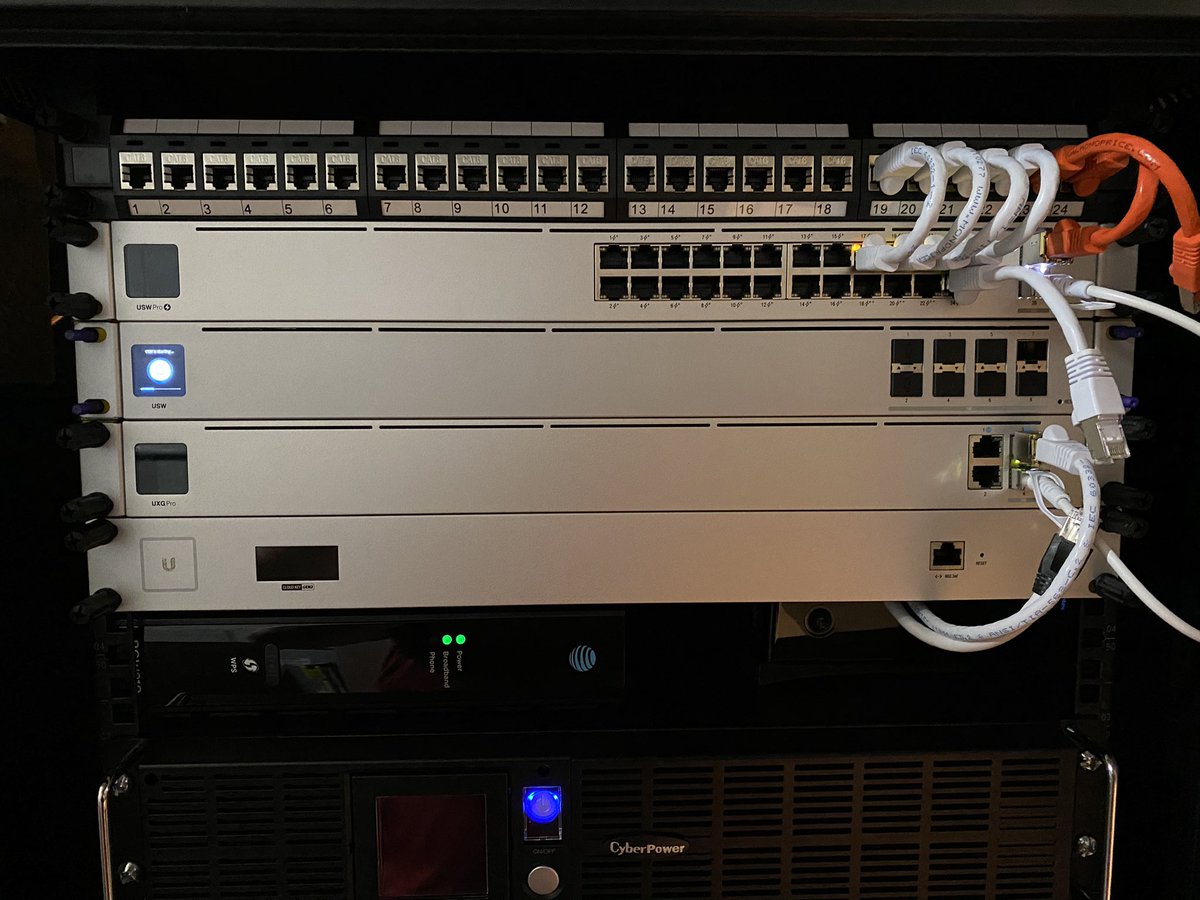

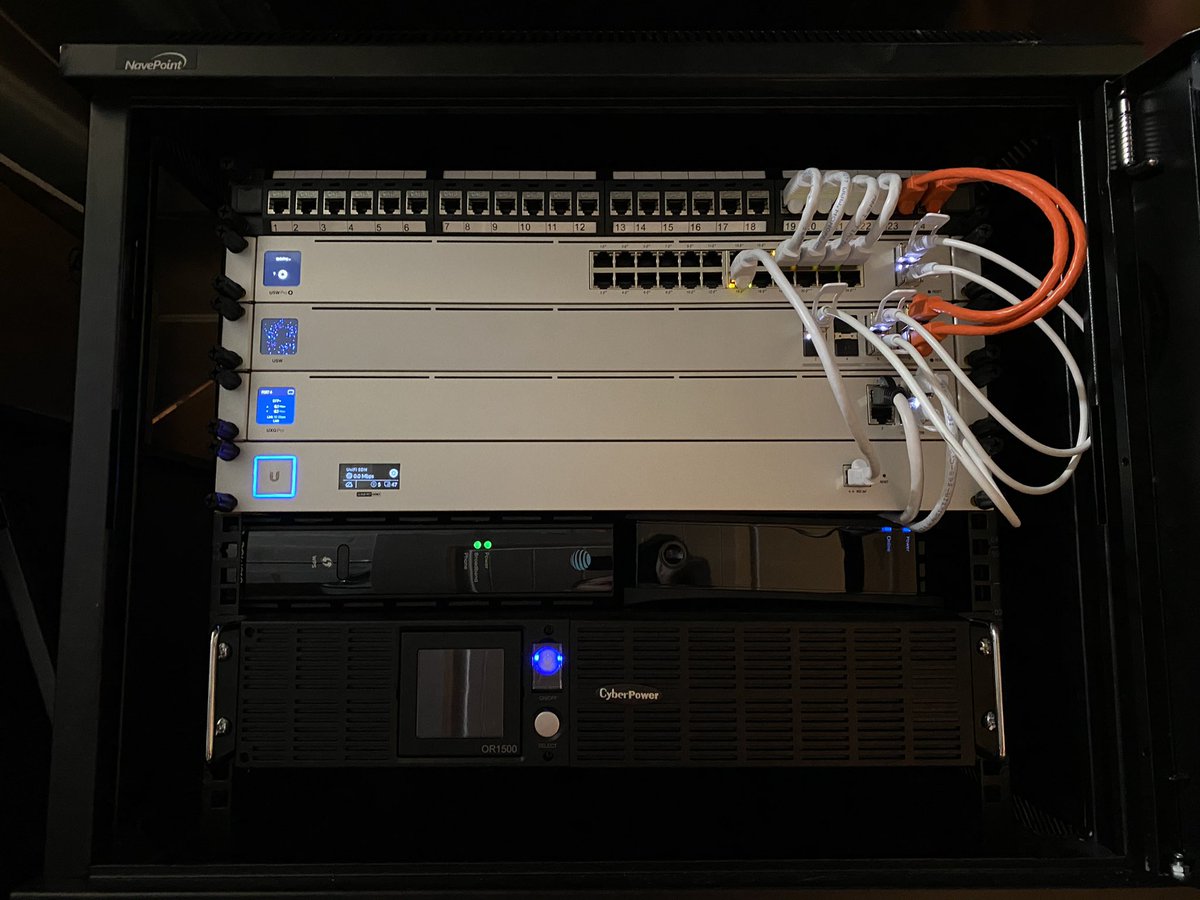

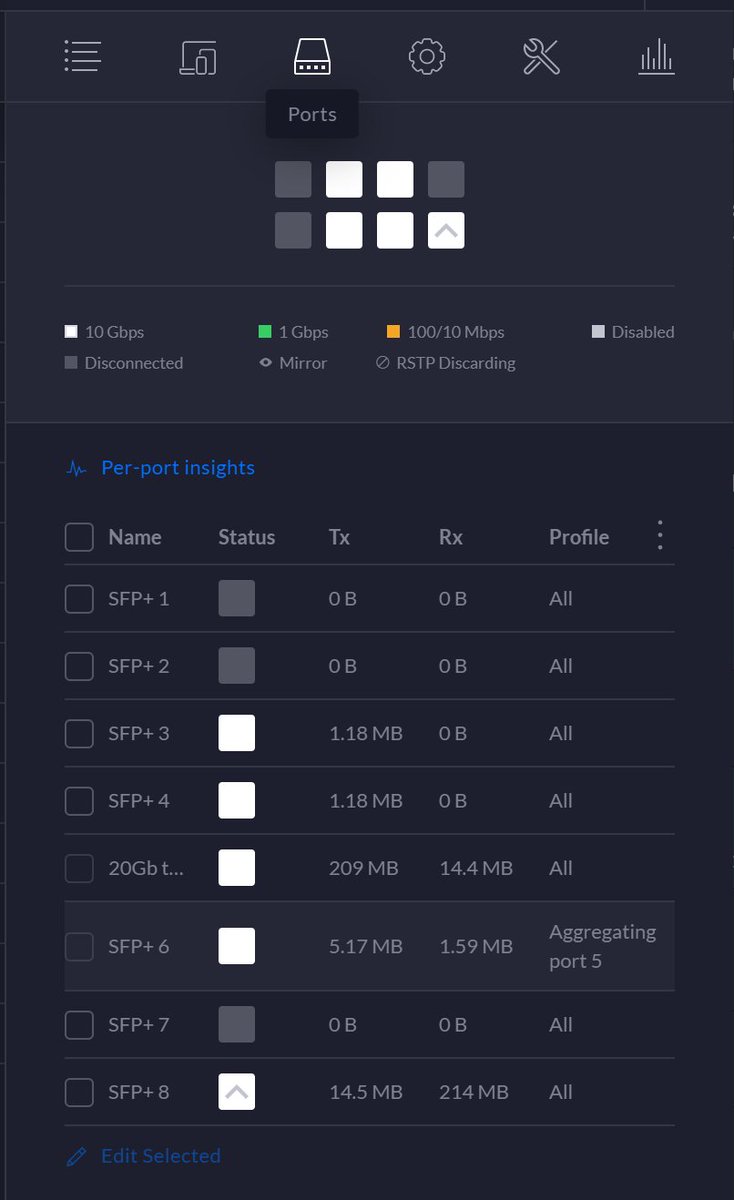

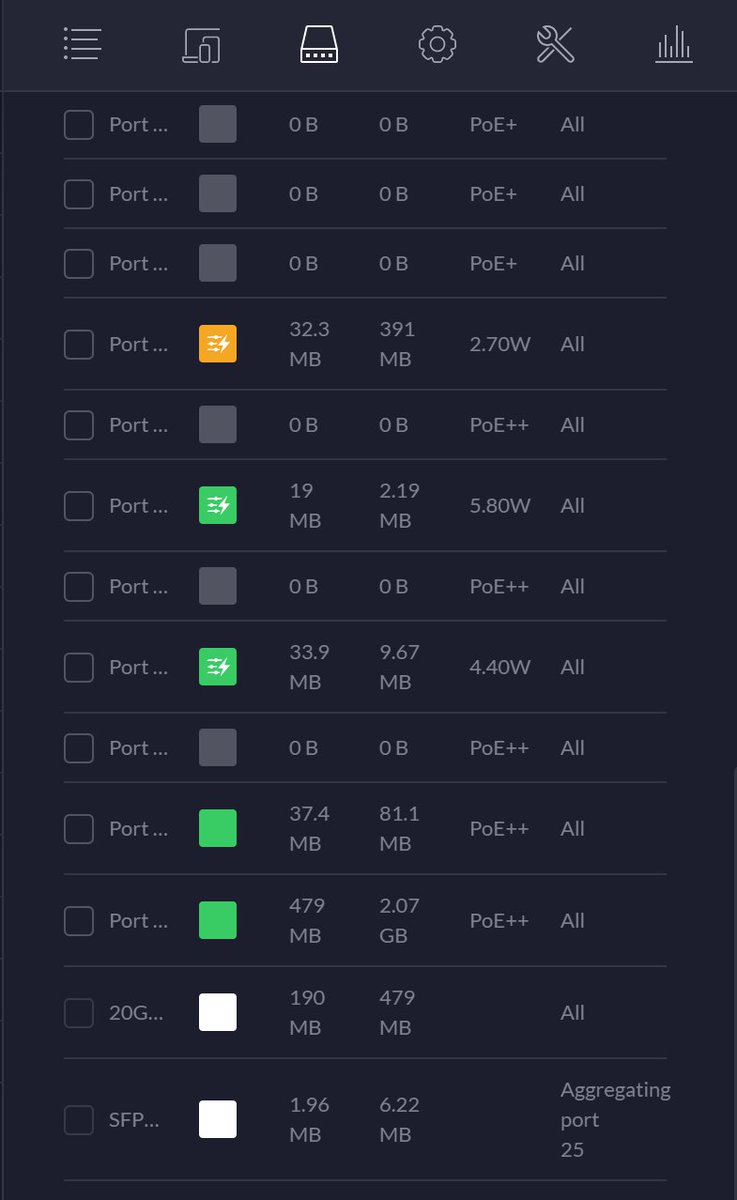

Alrighty, all configured (I kept doing it backwards after moving the controller...that's on me). 20Gb to the 24x PoE, currently 2Gb to upstairs (ready to go 20Gb), and UXG-Pro routing 10Gb through to this spine. Overall: looking good.

Also wasn’t happy with front panel on the CloudKey - so routed back through the rear port and patch panel:

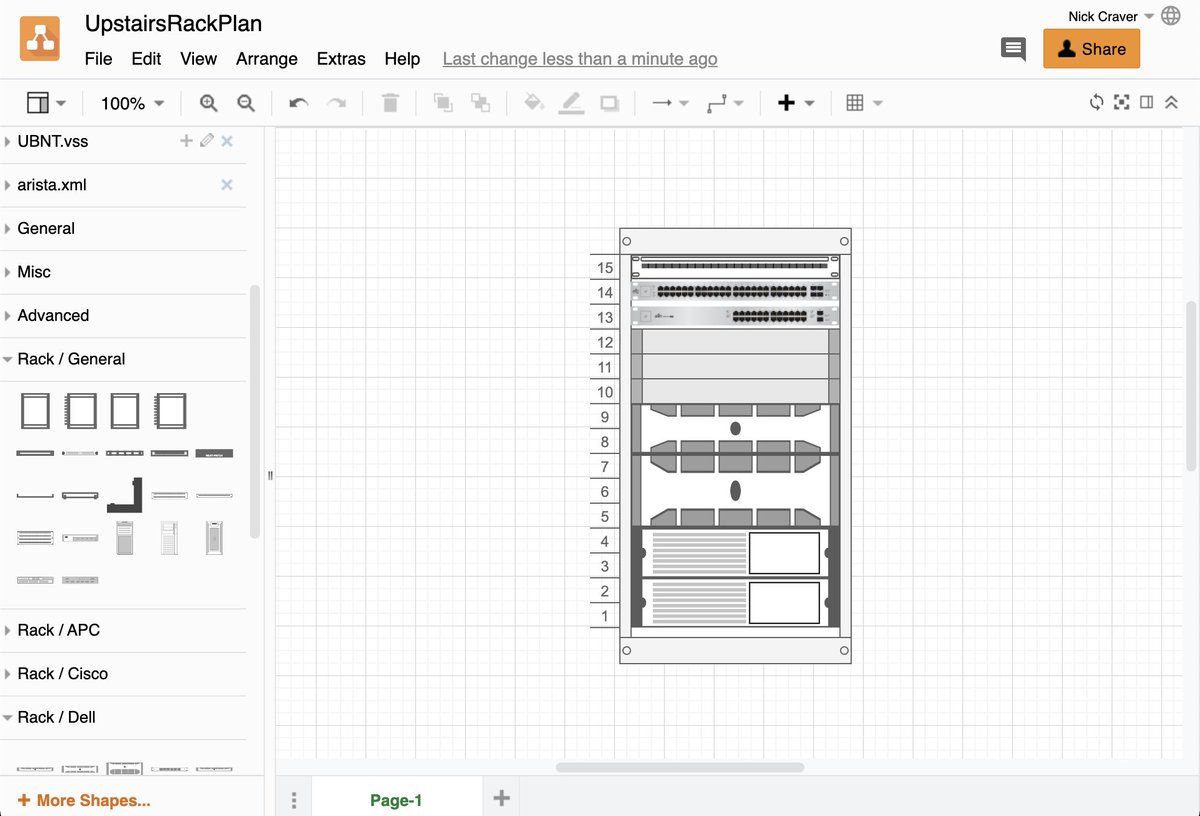

Alrighty, let's start upstairs. First up is rack, patch panel, keystone bits, and a USW-Pro-24-POE. I'm not sure if I'm going with a 8x 10Gb spine switch upstairs or the slightly larger 28x 10Gb + 4x 25Gb option (depends on availability). Still, step 1 is 2x 10Gb to basement.

Overall: I'm converting my existing layout of some network gear, UPSes, and drives in 2 IKEA units with desktop systems on top to...a rack! That'll give me more office space overall. Here's a recent-ish pic of the current layout. Picture the right as a 15U rack instead:

I'll have overall less gear in the end - that second black tower will be a 2U-3U server chassis. Main desktop (new build, more to come) will be a 3U chassis most likely. 2U for switches, 1 for patch, and 2-4U for UPS down bottom (likely 1500VA + expansion battery...we'll see!)

The drives and Synology in the upper left, time capsule in the upper right, etc. will all be collapsed into that second tower replacement. Maybe a custom build with TrueNAS, maybe Synology, not sure yet. Likely a ~6-8x 12TB option for photo backup, Plex, etc. Suggestions welcome!

People keep telling me a rack in the office is too noisy, but I don't buy that!

First: racks are silent, they make no noise. Things *in* them make noise, and we're going to be paying attention to that. Lower wattage everywhere possible means less to cool and longer UPS runtime.

First: racks are silent, they make no noise. Things *in* them make noise, and we're going to be paying attention to that. Lower wattage everywhere possible means less to cool and longer UPS runtime.

The rack will have soundproofing and cooling - and this will likely be my first water cooling build for the 3U server chassis for my main machine. The file/Plex/etc. server I'd like to have a GPU in for transcode offline and a lower TDP processor, basically: quiet less playing.

Alrighty, we’re back from a short rest - let’s pick this back up. Time to build the 15U rack for the office:

Vertical rails in and sides on. Note for anyone doing this: I recommend different steps ordering: swap 2 and 4 since rails easily block top screw access.

Annnnnd we’ve hit a big snag. Evidently they didn’t drill holes for the hinges on one side. Or more precisely, the side positions matter and I have to disassemble it and invert the left side, blahhhhhh fuck, what a waste of time. Instructions are just the 1 sheet from earlier.

Luckily with the vertical rails supporting, we could do some surgery without a lot of hassle…just the 2 panels. Now we’re back on track:

Cable panel installed - cords exit through here (sound baffling has a slot in it) for minimum noise leak:

Alrighty - hinges, doors, and locks installed. Fully assembled! Really not a bad experience other than the panel direction oops. I’m exhausted though, because it’s quite heavy overall…kids and school tomorrow, after that we’ll move stuff into it :)

Been lazy about this - finally settled on (ordered) a UPS setup. I'm going with a PR3000RTXL2U unit (3000VA, sinewave) with a BP48VP2U02 expansion unit (+3360VA). That should be a decent amount of backup runtime for the desktop and network gear in the bottom 4U of rack space.

Well, oops. SOMEBODY (...it was me...) read input specs on the wrong UPS model the other way. A 30 amp circuit install for the office has now been added to the project - needs a NEMA L5-30P for power.

As I'm listing parts out to run a new 30 amp circuit to my office for the rack...okay, yes, the UPS I'm going with miiiiiiight be overkill. Only a little though. If we sell this house, explaining the NEMA L5-30P in a bedroom will be fun at least.

If we ever sell this house, I've decided I'm going to advertise this as the ultimate guest bedroom. You could even hookup an RV.

"Why is there a dryer outlet in here?"

"That's not a dryer outlet, it's for an RV"

"..."

"It's a guest room"

"That's not a dryer outlet, it's for an RV"

"..."

"It's a guest room"

First up is the battery backup expansion unit. I’m installing it on the bottom so that the plugs on the main unit are more easily accessible (being a bit higher). Rails in first:

If anyone's curious - the backup unit charges itself (it'll be on a separate 20 amp circuit already in the room). This pic isn't quite right because the main unit in my case uses a NEMA L5-30P, but same connection principal:

Still in a hardware shortage so I’ve been really lazy, but did rack the main UPS unit this afternoon. Here is is connected up. And yes, I’ll have to address that 30 amp outlet next:

Patch panel initial assembly. It’s not too beefy because local systems will be connecting via 10-25Gb SFP+/SFP28 cabling - this is purely for uplinks to other devices.

Time for a 20 amp & 30 amp circuit pair for the new UPS and expansion units…and since were crawling around in the attic, another Cat 6a run to the basement. Let’s do this.

Step 1: lay out all the tools & supplies you know you’ll use so there’s no pausing to go hunting.

Step 1: lay out all the tools & supplies you know you’ll use so there’s no pausing to go hunting.

In retrospect, it’s obvious I need to tear the office apart to get at where I want these outlets easily…so I guess we’re switching to the rack today too. Starting to tear tech out not required for the day:

But, the lines are run - breaking for dinner and probably the day. I was laying in insulation for a while fighting that one, ugh. My brother-in-law was with me for the latter part of this…can’t imagine doing that one alone.

• • •

Missing some Tweet in this thread? You can try to

force a refresh