New review in @TrendsCognSci “The Psychology of Fake News” w @DG_Rand

We synthesize research on belief in, sharing of, & interventions against misinformation, with a focus on false/misleading news cell.com/trends/cogniti…

And now a thread that synthesizes our synthesis!

1/

We synthesize research on belief in, sharing of, & interventions against misinformation, with a focus on false/misleading news cell.com/trends/cogniti…

And now a thread that synthesizes our synthesis!

1/

We make three major points in the paper, which I will summarize here. However, there are several other elements to the paper that may be of interest. E.g. a short review on the prevalence of fake news, a discussion of the heuristics that people use, such as familiarity, and more!

The first major point is that, contrary to narratives that focus on political partisanship/motivated reasoning, we find that a lot can be explained by mere lazy thinking (overreliance on intuition).

For eg, individual differences in reflective thinking (via the Cognitive Reflection Test, + others) are consistently associated with an increased ability to distinguish b/w true/false news *regardless* of whether the news is consistent *or* inconsistent with political ideology

In a combined analysis of 14 studies (N>15k). The effect of cognitive reflection is 2x larger than the effect of political consistency on discernment (which, anyways, shows that people are *better* at distinguishing between true/false news if it’s ideologically *consistent*)

There's also experimental evidence that supports the conclusion that analytic thinking is associated with increased truth discernment and not increased polarization (as assumed by motivated reasoning accounts). See:

https://twitter.com/bencebago/status/1220099034465144838

Of course, if one looks at *overall* belief, people find politically consistent news more plausible regardless of if it’s true or false. So, people believe things that are consistent with their ideology and (separately) being reflective is associated with more *accurate* beliefs.

Importantly, the general bias toward believing things that are consistent with one’s ideology is not evidence of a causal role of political identities per se. Can’t go into detail with so few characters, but the short story is that there are many confounds

https://twitter.com/Ben_Tappin/status/1332392560745189379

The 2nd major point is that social media sharing does not necessarily imply belief. People are often quite good at distinguishing between true/false news when asked to do it directly. However, when it comes to sharing, they barely do so.

I.e., RT!=endorsement is actually true

I.e., RT!=endorsement is actually true

This indicates that, again, the spread of fake news may be driven (to some extent) by mere inattention to accuracy. People may be getting distracted from thinking about accuracy when deciding what to share (Note: People *say* that accuracy is important to them)

This brings me to the third major point: Points 1&2 indicate that maybe people can make better choices if they slow down and consider accuracy before sharing. And, in fact, there is good evidence that this is the case See:

https://twitter.com/DG_Rand/status/1372217700626411527?s=20

The broader conclusion is that interventions against misinformation should be informed by an understanding of the underlying psychological mechanisms. Things that intuitively seem that they may work may not be effective (and vice versa). nytimes.com/2020/03/24/opi…

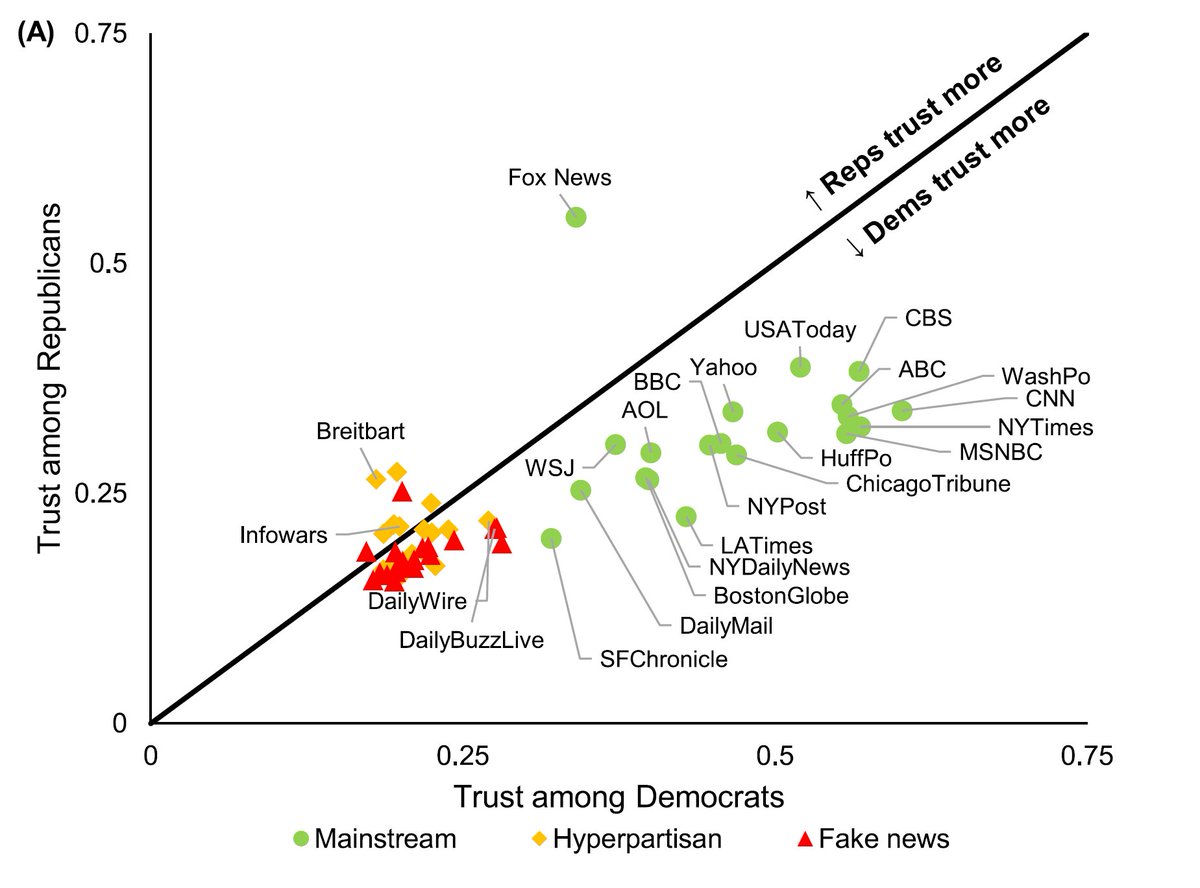

For eg, ppl are surprisingly good at distinguishing between high/low quality news sources (when asked to do so – doesn’t mean they do it in practice, see misinforeview.hks.harvard.edu/wp-content/upl…) & crowdsourced judgments of news headlines could be used to inform algorithms

https://twitter.com/DG_Rand/status/1314212731826794499?s=20

Another example is that fact-checks that directly follow a news headline actually work better than ones that come directly before the headline (a lot of people would assume that preparing people mentally for falsehood works better, but it doesn’t)

https://twitter.com/nadiabrashier/status/1354127505150631941?s=20

There is still so much to learn about this topic, though. For the psychologists in the crowd, misinformation reveals a lot about how our minds work. And, at the same time, it’s a nascent area where we need research to inform policy. Hopefully, this review is outdated in 5 years!

This paper is a capstone of the amazingly fun collaboration between me and @DG_Rand (+ many others) that started back in 2016. We're still going and hopefully will be for a long time! A frequently updated list of our misinfo (and related) projects is here: docs.google.com/document/d/1k2…

• • •

Missing some Tweet in this thread? You can try to

force a refresh