This is major breakthrough 👇

We're now using only seq^2 (4Mi) elements for each attention tensor instead of batch*heads*seq^2 (128Gi) for a PanGu-Alpha-200B-sized model, without reducing the performance or ability to scale.

We're now using only seq^2 (4Mi) elements for each attention tensor instead of batch*heads*seq^2 (128Gi) for a PanGu-Alpha-200B-sized model, without reducing the performance or ability to scale.

https://twitter.com/Hanxiao_6/status/1394742841033641985

I'll implement it immediately in our GPT codebase and share its performance on 2B-equivalent models.

@Hanxiao_6, is the split across channels necessary? You briefly describe it as "effective". Is that on TPU?

@Hanxiao_6, is the split across channels necessary? You briefly describe it as "effective". Is that on TPU?

I can't figure out what "small initialization" means.

I finally arrived at 0.02 / context_size, which gives the blue curve (500M body + 400M embedding).

It looks very promising, but still NaNs after just 3000 steps with lr=1e-5.

I finally arrived at 0.02 / context_size, which gives the blue curve (500M body + 400M embedding).

It looks very promising, but still NaNs after just 3000 steps with lr=1e-5.

The orange one is the baseline with 1.7B + 400M.

The red one is the same as the orange run but uses ReZero as well.

arxiv.org/abs/2003.04887

The red one is the same as the orange run but uses ReZero as well.

arxiv.org/abs/2003.04887

Same problem (took 4hrs)

With normalization, activation, initialization, and everything else as mentioned in the paper. The only three differences are that

1) This uses ReZero

2) Single-Headed attention is not added

3) It's masked (GPT)

I'll start another run with MLP-Mixer.

With normalization, activation, initialization, and everything else as mentioned in the paper. The only three differences are that

1) This uses ReZero

2) Single-Headed attention is not added

3) It's masked (GPT)

I'll start another run with MLP-Mixer.

All runs clip the gradient norm at 0.3, compared to 1 above.

Pink is the same config as the light blue one above.

Red is the same as Pink, but with 8 MLP "heads".

Blue is Pink but with beta2=0.95

All runs have roughly 1B params

Pink is the same config as the light blue one above.

Red is the same as Pink, but with 8 MLP "heads".

Blue is Pink but with beta2=0.95

All runs have roughly 1B params

As a comparison, here is MLP-Mixer compared to the red run above.

Green and light blue use 8 "heads".

Light blue and red have ReZero.

So far Mixer looks like it's much more stable and could benefit from less gradient clipping and a higher learning rate.

Green and light blue use 8 "heads".

Light blue and red have ReZero.

So far Mixer looks like it's much more stable and could benefit from less gradient clipping and a higher learning rate.

While gMLP turned out to be disappointing, I'm incredibly excited to share my first results of MLP-Mixer.

Orange - Baseline (2B)

DarkBlue - gMLP (1B)

LightBlue - MLP-Mixer (1B)

Green - MLP-Mixer (1B, 10x learning rate)

(The baseline diverged in <2k steps with a higher LR)

Orange - Baseline (2B)

DarkBlue - gMLP (1B)

LightBlue - MLP-Mixer (1B)

Green - MLP-Mixer (1B, 10x learning rate)

(The baseline diverged in <2k steps with a higher LR)

The runs above all have the same batch size, resulting in 1.3B tokens seen every 10k steps.

The models themselves are wide and shallow (4 blocks) to improve training efficiency during the initial test.

I'll launch two more extensive runs in a few minutes.

The models themselves are wide and shallow (4 blocks) to improve training efficiency during the initial test.

I'll launch two more extensive runs in a few minutes.

Even with a massive warmup, gMLP (orange) and the tanh-constrained gMLP (light blue) still NaN after a few thousand steps. At this point, the learning rate barely crossed 5e-5.

Unfortunately, the baseline attention (dark blue) starts to look much better than MLP-Mixer (red).

Unfortunately, the baseline attention (dark blue) starts to look much better than MLP-Mixer (red).

All models have the same number of parameters (2.7B) and a depth of 16 attention-ff or ff-mixing blocks.

At this point, both models have barely seen 1.5B tokens, so it remains interesting.

At this point, both models have barely seen 1.5B tokens, so it remains interesting.

The training runs OOMed after a few thousand steps, but here is the progress they made.

Light/Dark Blue - 2.7B, attention

Red/Orange - 6.5B/2.6B, MLP-Mixer

Light blue and red also have a 4x larger context (2048 instead of 512) than the other two runs.

Light/Dark Blue - 2.7B, attention

Red/Orange - 6.5B/2.6B, MLP-Mixer

Light blue and red also have a 4x larger context (2048 instead of 512) than the other two runs.

I only just noticed that I cut off the x-axis in the step-wise plot.

1000 steps taken roughly equates to 260 million tokens seen in all four training runs. At this point, the models have just crossed 1B and 2B tokens, respectively.

I'll report back when they're above 10B.

1000 steps taken roughly equates to 260 million tokens seen in all four training runs. At this point, the models have just crossed 1B and 2B tokens, respectively.

I'll report back when they're above 10B.

I'm excited to share a new set of runs. This time, without control flow issues.

* gMLP: blue run with initial plateau (811M)

* Transformer: pink (1.5B)

* Synthesizer: blue curve that follows pink (1.4B)

* CONTAINER: red (1.65B)

* MLP-Mixer: orange (677M)

* gMLP: blue run with initial plateau (811M)

* Transformer: pink (1.5B)

* Synthesizer: blue curve that follows pink (1.4B)

* CONTAINER: red (1.65B)

* MLP-Mixer: orange (677M)

All models have a constant learning rate of 1e-3, batch size of 4096, adaptive gradient clipping of 0.003 and context size of 128 characters.

With that, the models have all seen 2.5B to 3.2B characters (or 19B to 25B when normalized), which should make them very comparable.

With that, the models have all seen 2.5B to 3.2B characters (or 19B to 25B when normalized), which should make them very comparable.

Considering that MLP-Mixer has by far the best convergence (which is likely because of the significantly improved gradient flow) while running twice as fast and using the least memory, I'd recommend seriously looking into these models for any kind of task.

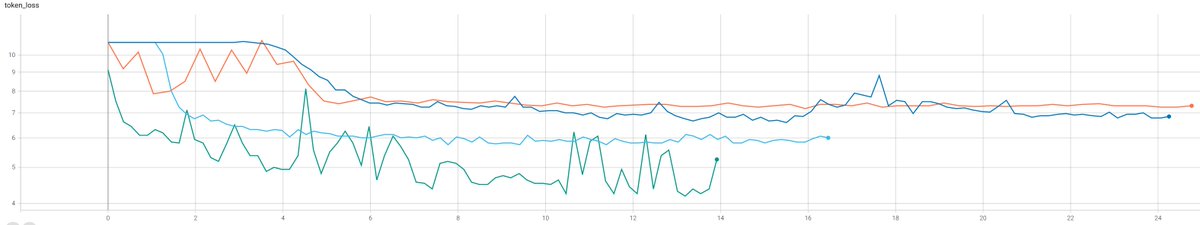

I ran some more tests with Mixer.

This time, SM3 vs Adam.

Both without custom hyperparameter tuning. (The Adam hyperparameters come straight from PanGu-Alpha, SM3's from the paper)

All runs have a batch size of 256 and a context of 2048, and, with that, 1000 steps = 524M chars.

This time, SM3 vs Adam.

Both without custom hyperparameter tuning. (The Adam hyperparameters come straight from PanGu-Alpha, SM3's from the paper)

All runs have a batch size of 256 and a context of 2048, and, with that, 1000 steps = 524M chars.

Just looking at the curves, It becomes apparent how much more stable @_arohan_'s SM3 with Nesterov momentum is.

SM3 with momentum uses only uses half the memory of adam, less compute and yet is more stable, making it perfect for training and finetuning.

SM3 with momentum uses only uses half the memory of adam, less compute and yet is more stable, making it perfect for training and finetuning.

Because of the reasons mentioned above, I'd love to see more usage of SM3.

If you want to try it out, check the code, or even reproduce the results, you can find everything here: github.com/tensorfork/OBS….

If you want to try it out, check the code, or even reproduce the results, you can find everything here: github.com/tensorfork/OBS….

• • •

Missing some Tweet in this thread? You can try to

force a refresh