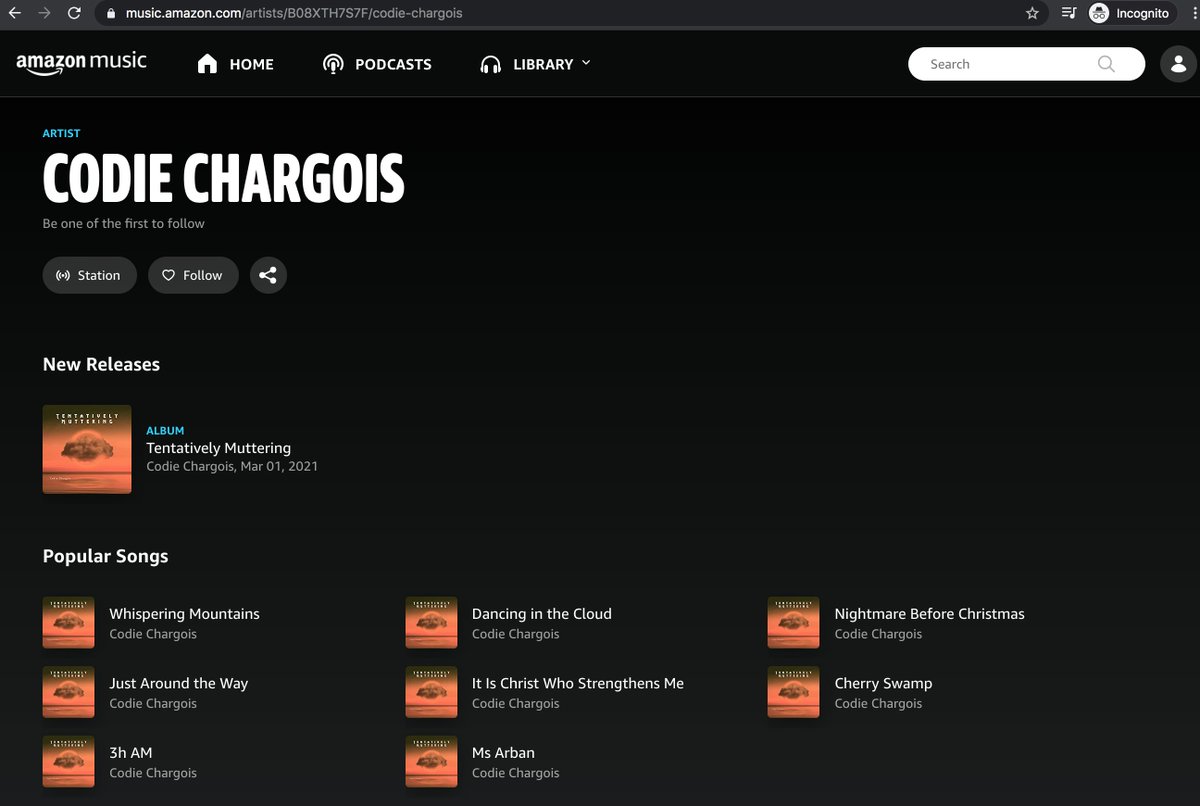

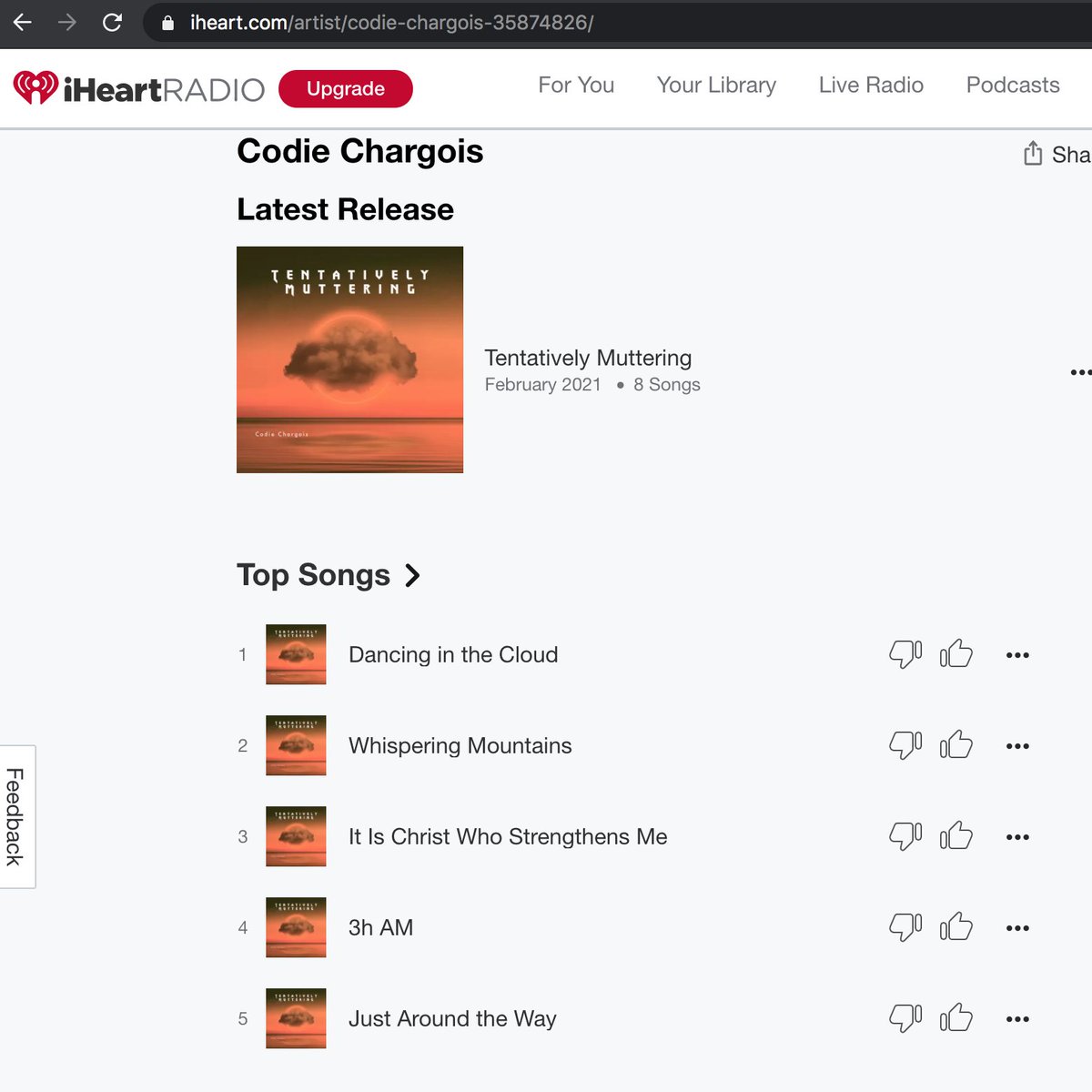

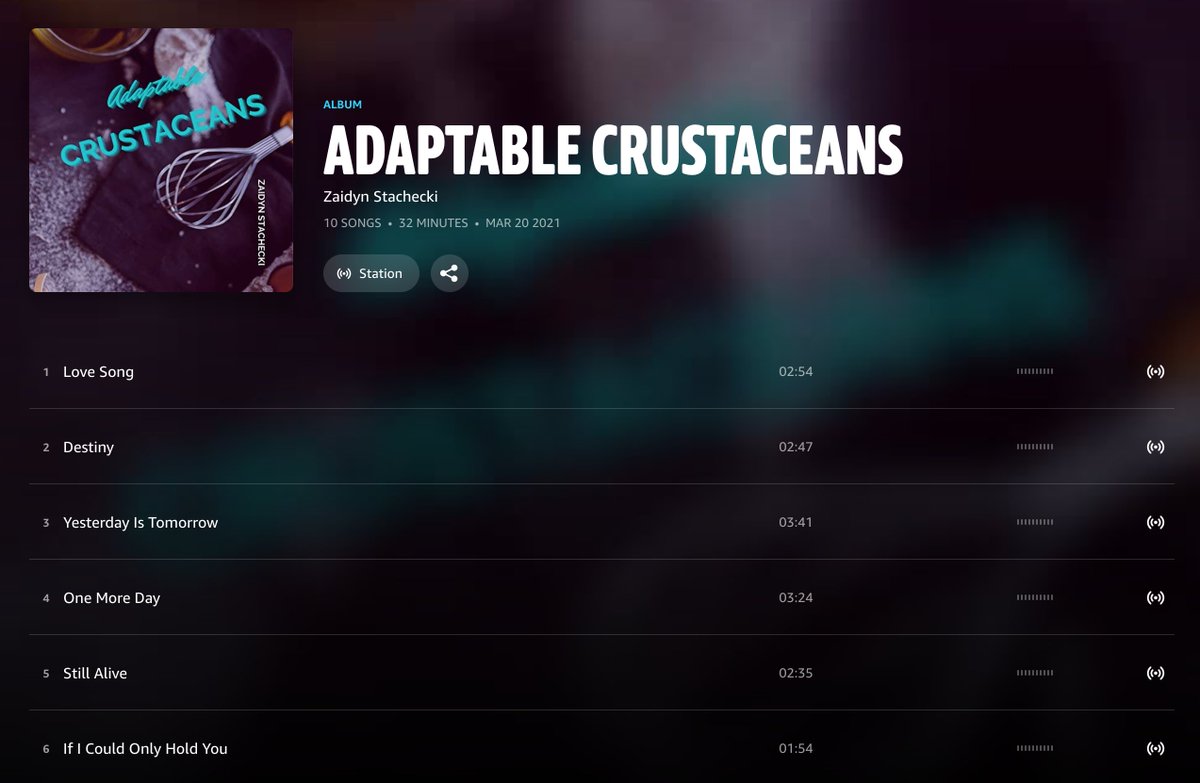

Meet @ChargoisCodie, whose profile pic is the cover of Codie Chargois's 2021 album "Tentatively Muttering", available on Amazon, iHeartRadio, and YouTube. One might infer that this is Codie Chargois's official Twitter account, but things are not as they seem.

cc: @ZellaQuixote

cc: @ZellaQuixote

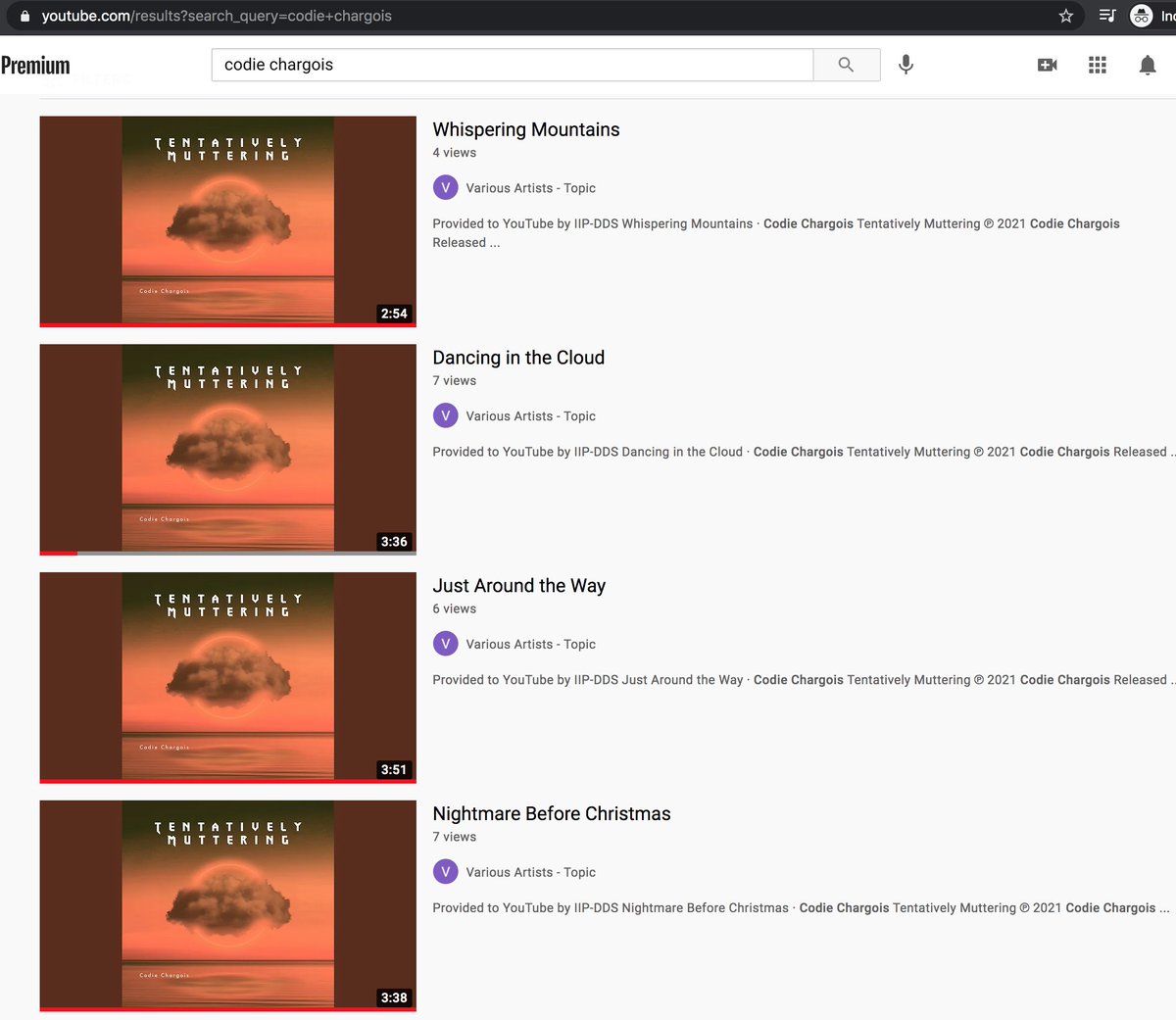

The Codie Chargois tracks on YouTube are all covers that have been given different names than the original songs. Two are identical - "Just Around the Way" () and "3h AM" () are the same version of "Ring of Fire". One more thing...

All of the songs allegedly recorded by "Codie Chargois" appear to have actually been recorded by 39 WEST, an Ohio country band (reverbnation.com/39west). Perhaps "Tentatively Plagiarizing" would've been a better album title than "Tentatively Muttering".

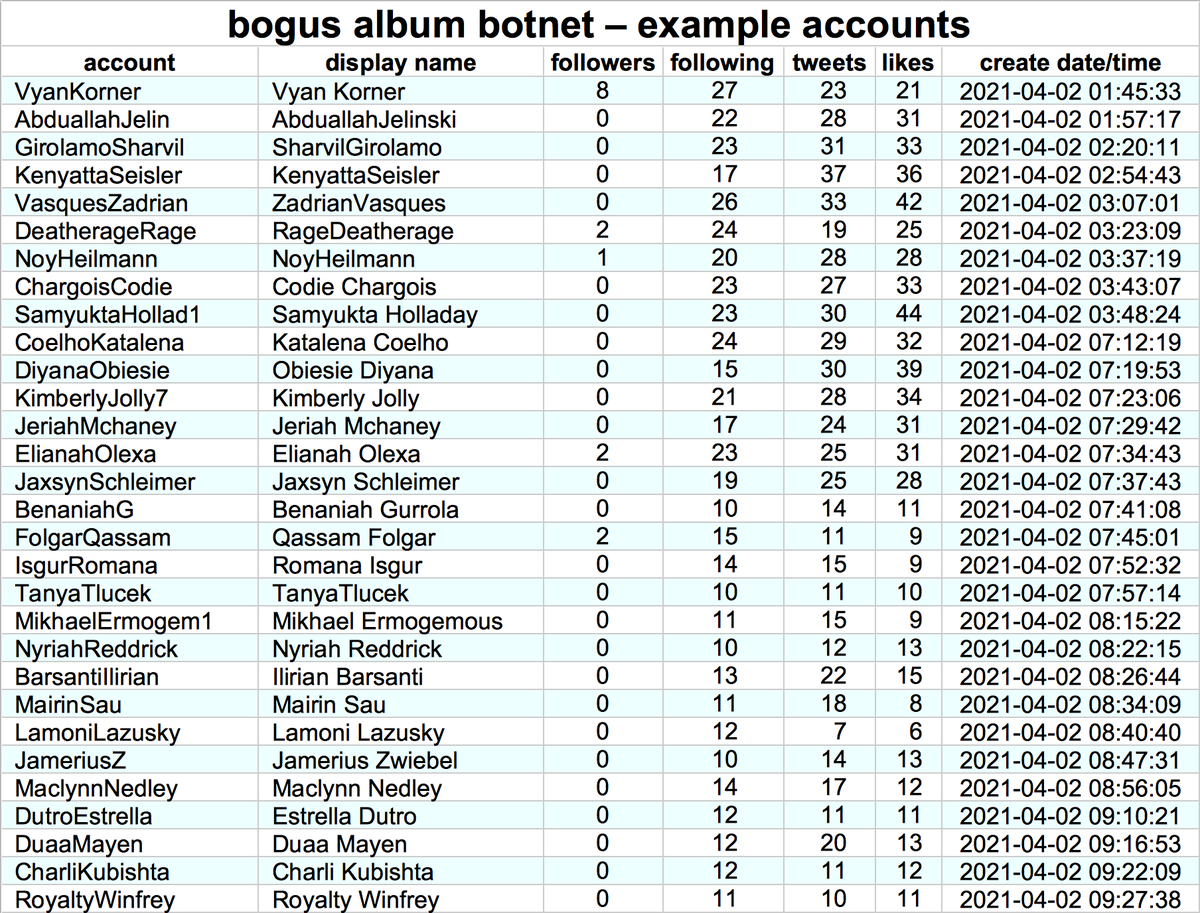

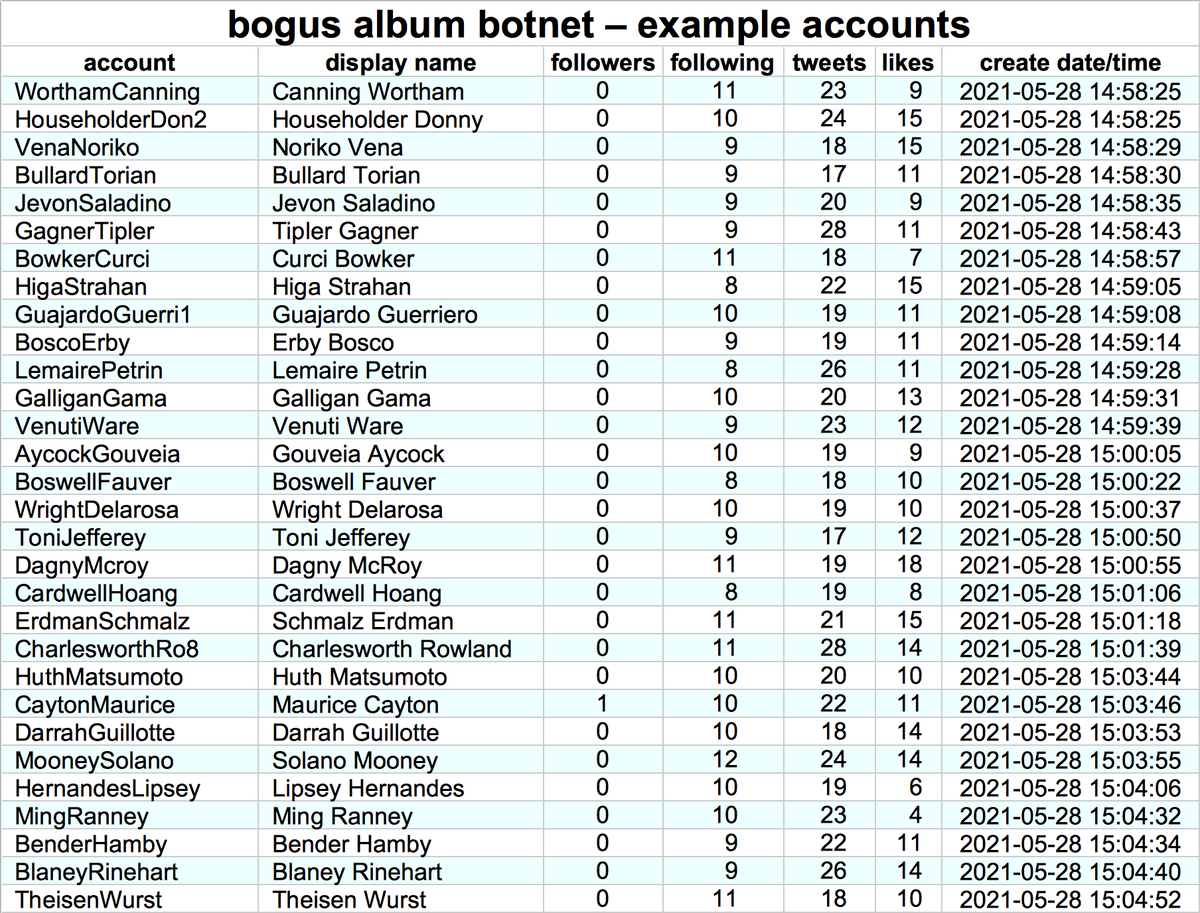

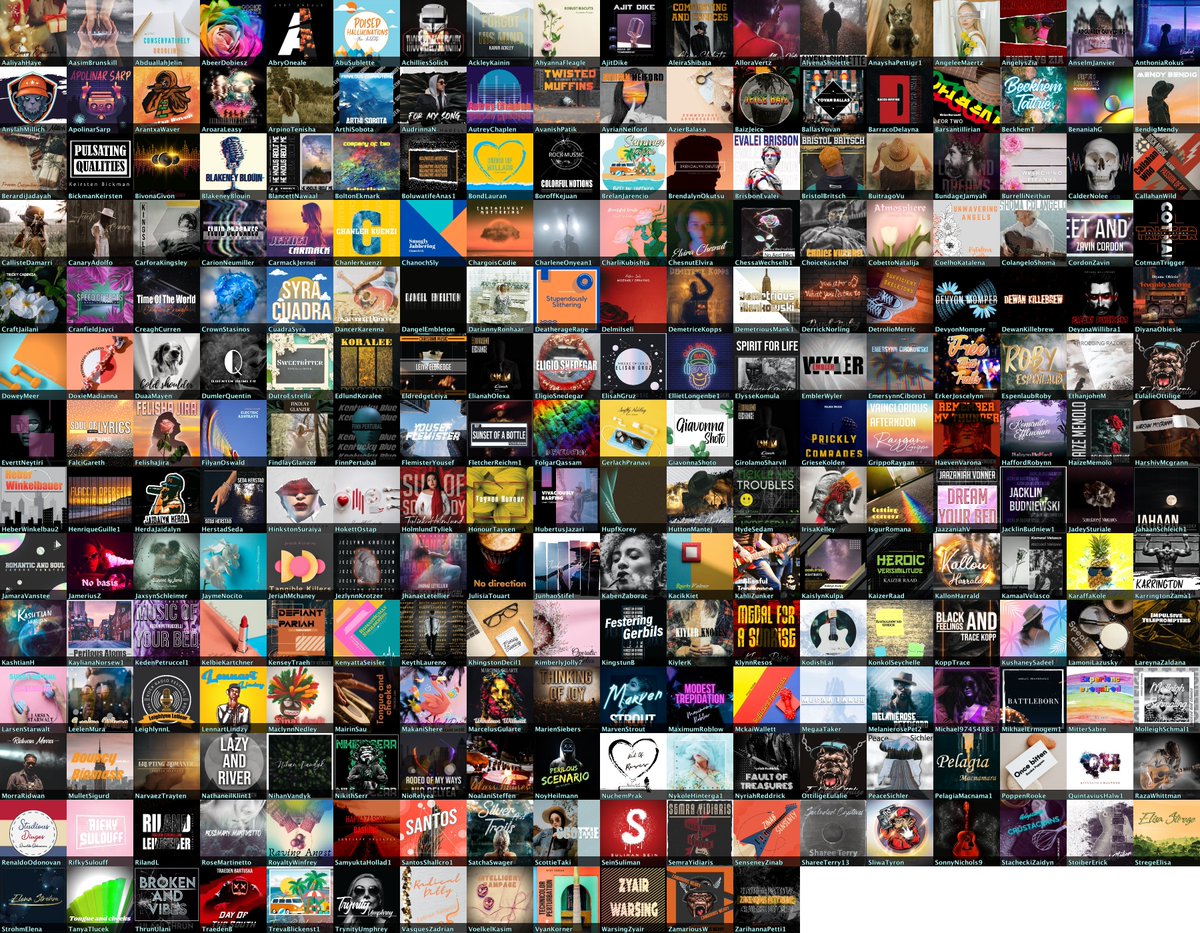

As it turns out, @ChargoisCodie is not alone. One of the accounts it follows (@SantosShallcro1) has quite a few followers with "album covers" as profile pics. The remainder have default profile pics and GAN-generated face pics similar to those from thispersondoesnotexist.com.

These accounts are part of a botnet consisting of 344 accounts created within the last four months. Five of the accounts (@ThrunUlani, @AckleyKainin, @alciGareth, @SantosShallcro1, and @ErkerJoscelynn) are followed by the majority of the remainder.

As mentioned earlier, this botnet uses three types of profile pics:

• album covers (likely bogus) - 246 accounts

• default pics - 53 accounts

• GAN-generated faces - 45 accounts

(GAN = "generative adversarial network", the AI technique used by thispersondoesnotexist.com)

• album covers (likely bogus) - 246 accounts

• default pics - 53 accounts

• GAN-generated faces - 45 accounts

(GAN = "generative adversarial network", the AI technique used by thispersondoesnotexist.com)

Some but not all of the album covers actually have corresponding albums (at least some of the content of which is plagiarized and renamed) available on music platforms, all published within the last few months. The album names are amazing, though.

As is the case with unmodified GAN-generated face pics, the major facial features (particularly the eyes) are in the exact same spot on each of the 45 profile pics with GAN profile pics. This trait becomes obvious when the images are blended.

What does this network actually tweet? The majority of its content is a mix of repetitive replies to (5205/8287 tweets, 62.8%) and retweets of (2449/8287 tweets, 29.5%) other bots in the network and Kpop accounts.

H/T @EJGibney/@FakeDrSebaceous for the lead.

*typo in this tweet - alciGareth should be @FalciGareth.

https://twitter.com/conspirator0/status/1400644806846693378

• • •

Missing some Tweet in this thread? You can try to

force a refresh