Machine Learning Monthly for May 2021 is live (video & audio)!

The latest and greatest (but not always the latest) from the machine learning world in the past month + plenty of dancing.

This month we've got...

The latest and greatest (but not always the latest) from the machine learning world in the past month + plenty of dancing.

This month we've got...

Huuuuuuge updates to @TensorFlow:

• TensorFlow Lite models now work with TensorFlow.js (train once, deploy twice)

• Google's on-device machine learning page tailors ML guides for your smaller device needs

• TF Lite model maker library helps you train on-device models faster

• TensorFlow Lite models now work with TensorFlow.js (train once, deploy twice)

• Google's on-device machine learning page tailors ML guides for your smaller device needs

• TF Lite model maker library helps you train on-device models faster

• TensorFlow Hub gets a facelift, plus, now you can try pretrained models before you buy them (jk the models are free)

• TensorFlow Cloud library helps you scale up your smaller experiments to cloud-scale in a few lines of code (e.g. Google Colab -> 8 GPUs)

• TensorFlow Cloud library helps you scale up your smaller experiments to cloud-scale in a few lines of code (e.g. Google Colab -> 8 GPUs)

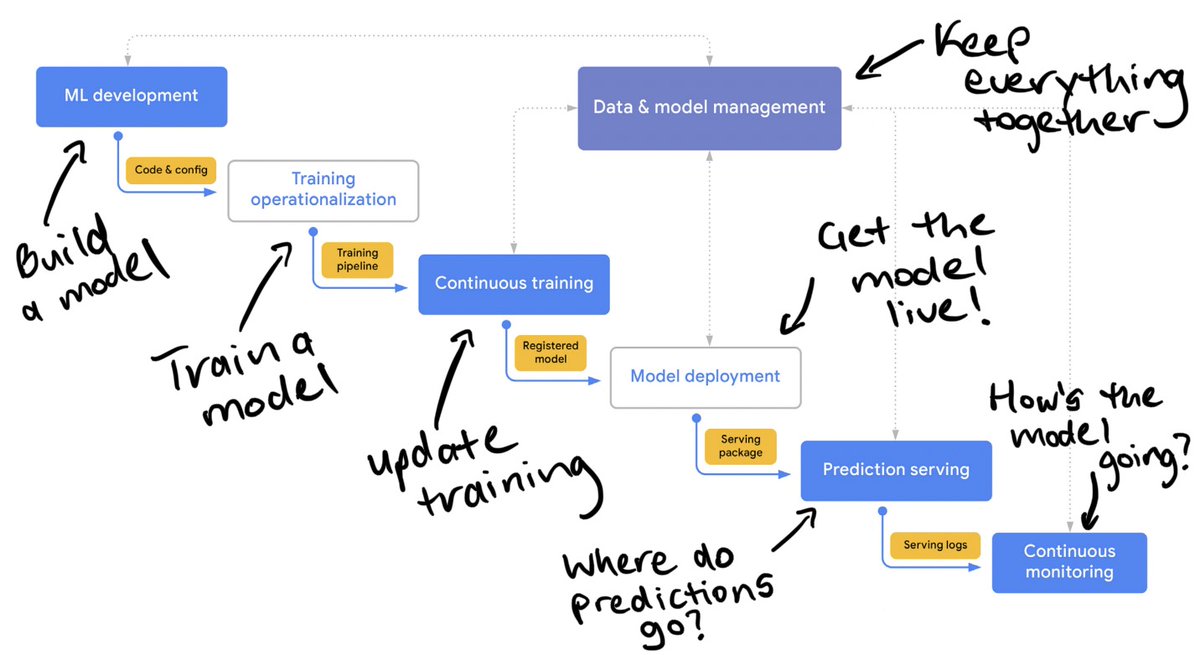

• Google Cloud's AI Platform gets renamed to Vertex AI and now Google Cloud's one-stop-shop for your ML needs (think data storage, feature storage, model training, model deployment etc)

• To go along with Vertex AI is a new MLOps White Paper piecing together everything ML

• To go along with Vertex AI is a new MLOps White Paper piecing together everything ML

• The new TensorFlow forum! Now there's a town square to meet and talk with TensorFlow developers from around the world

• The People and AI Guidebook 2.0 helps you design ML-powered applications by thinking about things like: "explain the benefit, not the technology"

• The People and AI Guidebook 2.0 helps you design ML-powered applications by thinking about things like: "explain the benefit, not the technology"

And from the rest of the internet:

• Next-generation pose detection with MoveNet and TensorFlow.js (17 body keypoints @ up to 51 FPS in the browser of an iPhone 12!!!)

• Datasets & code are on arXiv ala @paperswithcode (find the data and code associated with ML papers)

• Next-generation pose detection with MoveNet and TensorFlow.js (17 body keypoints @ up to 51 FPS in the browser of an iPhone 12!!!)

• Datasets & code are on arXiv ala @paperswithcode (find the data and code associated with ML papers)

• @facebookai's wav2vec-U (unsupervised) speech recognition model performs equivalent to state of the art 2 years ago without *any* labelled data (previous model used ~1000 hours)

• What is active learning? by @roboflow - doing practical ML? You'll want active learning

• What is active learning? by @roboflow - doing practical ML? You'll want active learning

• Reproducible Deep Learning by @s_scardapane - Ever tried to build a reproducible deep learning model? It's harder than you think. Not to worry, Simone's course goes through steps to help you do so.

See more: sscardapane.it/teaching/repro…

See more: sscardapane.it/teaching/repro…

• The Rise of @huggingface by @marksaroufim - an outstanding take on how ML companies like HuggingFace and @weights_biases have built incredible value by creating community around their product offerings.

Far out...

As usual a massive month on tour for the world of ML!

See the full write up: zerotomastery.io/blog/machine-l…

See the video version:

As usual a massive month on tour for the world of ML!

See the full write up: zerotomastery.io/blog/machine-l…

See the video version:

• • •

Missing some Tweet in this thread? You can try to

force a refresh