Most digital assistants that follow NL instructions focus on uncovering concrete pred-arg structures, such as "book a flight" or "send a mail". However, when humans convey complex instructions they often resort to abstractions, such as emerging shapes, iterations, conditions 1/n

Can #nlproc models learn to interpret such abstract structures, and ground them in a concrete world?

We set out to elicit non-trivial natural lang instructions that contain multiple levels of abstraction, and evaluate models ability to execute them.

arxiv.org/abs/2106.14321

2/n

We set out to elicit non-trivial natural lang instructions that contain multiple levels of abstraction, and evaluate models ability to execute them.

arxiv.org/abs/2106.14321

2/n

We do this by means of a collaborative online game, where an instructor receives an image on a 2dim hexagons board, to instruct a remote friend how to draw it on their empty board.

The executer needs to follow the instructions to recreate the image

nlp.biu.ac.il/~royi/hexagon-…

3/n

The executer needs to follow the instructions to recreate the image

nlp.biu.ac.il/~royi/hexagon-…

3/n

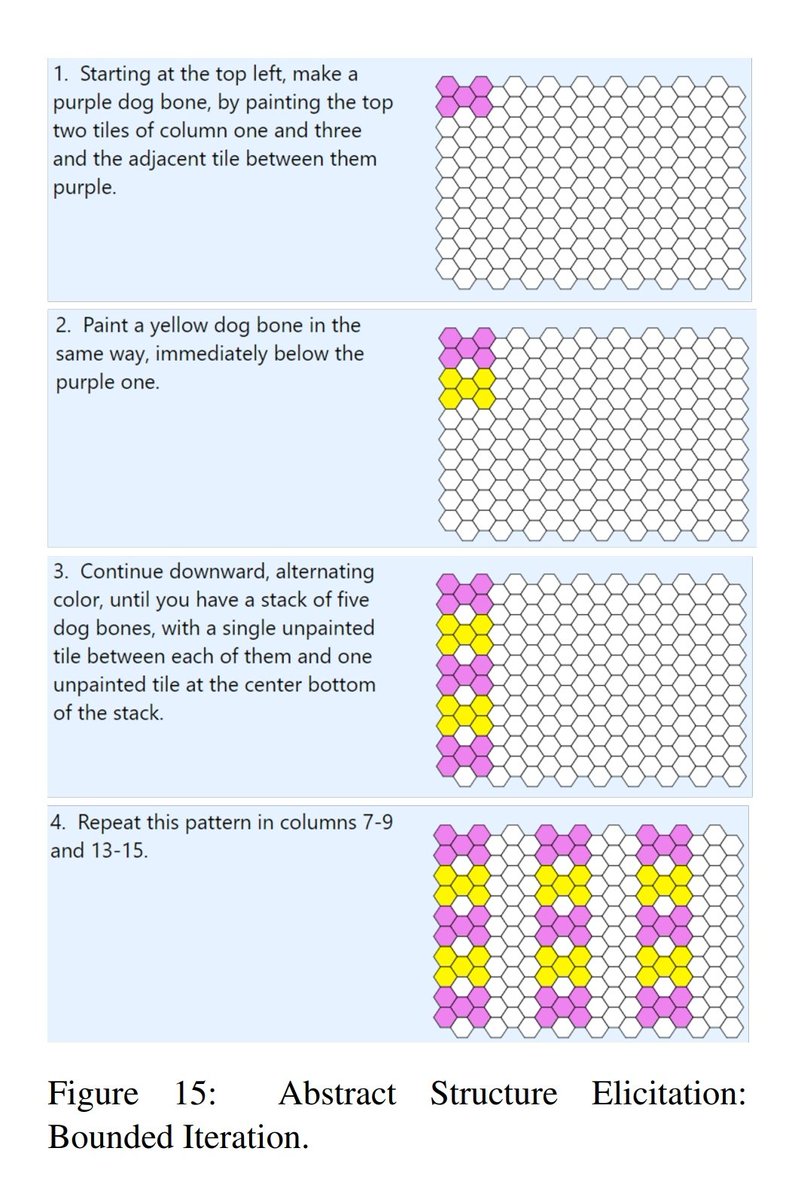

The key to eliciting abstraction is to design proper stimuli. The images should be: abstract rather than figurative; their description should evoke diverse abstract structures; they should compose different kinds of abstract struct; and reflect increasing levels of complexity 4/n

We designed a gallery of 101 images and elicited above 3K non-trivial NL instructions, reflecting diverse, non-trivial, formal abstract structures expressed in free informal language 5/n

how do #nlproc models do on these? lets look at a typical example. Here a human provided an instruction to recreate the attached image:

“On fifth row from right going leftward, vertically,

color the second tile from the bottom Blue, all tiles

touching it should be Green"

“On fifth row from right going leftward, vertically,

color the second tile from the bottom Blue, all tiles

touching it should be Green"

This is the model's result. as you see, it managed to get the direct, predicate-argument like instruction right, correctly positiining the first blue tile.

However, it went downhill from there..

However, it went downhill from there..

As expected, the model failed to identify the more abstract loop to construct "all tiles touching it".

And this is a case of a very simple image -- you can imagine what happens on the more complex ones. 8/n

And this is a case of a very simple image -- you can imagine what happens on the more complex ones. 8/n

Our resulting corpus, we find, is in fact much more interesting than just demonstrating the obvious --- that models fail in complex tasks --- it provides a window into a very rich area of grounded language, that was not tackled and documented before. 9/n

This opens up many research opportunities, on many questions, eg. how do ppl informally express abstraction? What are the diverse ways to *efficiently* convey a complex pattern? Can models "parse" the deeper thought process that reflect the flow underlying the process described?

• • •

Missing some Tweet in this thread? You can try to

force a refresh