NEW PROJECT — I made a "personal search engine" that lets me search all my blogs, tweets, journals, notes, contacts, & more at once 🚀

It's called Monocle, and features a full text search system written in Ink 👇

GitHub ⌨️ github.com/thesephist/mon…

Demo 🔍 monocle.surge.sh

It's called Monocle, and features a full text search system written in Ink 👇

GitHub ⌨️ github.com/thesephist/mon…

Demo 🔍 monocle.surge.sh

One of my goals for this project was to learn about full text search systems, and how a basic FTS engine worked. So I wrote a FTS engine in Ink.

The project's readme goes into a little detail about how each step works, and how it all fits together.

📖 github.com/thesephist/mon…

The project's readme goes into a little detail about how each step works, and how it all fits together.

📖 github.com/thesephist/mon…

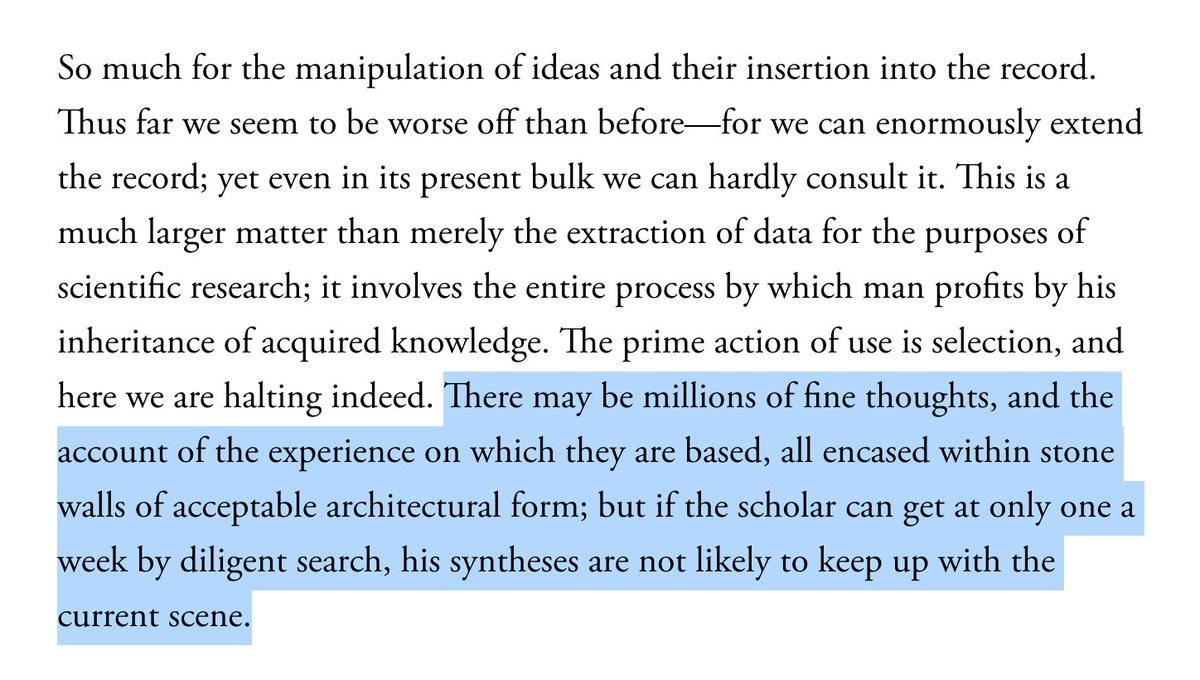

The more I've been using it (since Saturday, when I had an MVP), the more I realize that this kind of a tool is probably my best shot at building a Memex, a system that knows about and lets me search through my entire landscape of knowledge — theatlantic.com/magazine/archi…

I've probably performed ~100 searches for various names, ideas, memories, blogs, and other random things in the last week, and the most interesting thing is how searching for one thing helps me stumble into some unexpected insight or memory from my past. Creative randomness.

Lastly, great search and recall is a centerpiece of the "incremental note-taking" concept I discussed last week — monocle.surge.sh/?q=incremental…

Monocle is a system that doesn't need me to take notes; it gathers knowledge by looking through my existing digital footprint.

Monocle is a system that doesn't need me to take notes; it gathers knowledge by looking through my existing digital footprint.

I've spent a bunch of time on this this weekend so probably going to take a small break, but hopefully in the coming weeks and months I'll add a few more data sources to my search index:

- Browser history, YouTube watch history

- Reading list from Pocket

- Email (maybe?)

- Browser history, YouTube watch history

- Reading list from Pocket

- Email (maybe?)

Lastly, a question I'm definitely expecting is "can I run this on my own data?"

Uhh... .probably not right now? The system is pretty custom-built for my setup. But if I like it, I might make a version that's open for other people to try ✌️

Uhh... .probably not right now? The system is pretty custom-built for my setup. But if I like it, I might make a version that's open for other people to try ✌️

• • •

Missing some Tweet in this thread? You can try to

force a refresh