I read the information Apple put out yesterday and I'm concerned. I think this is the wrong approach and a setback for people's privacy all over the world.

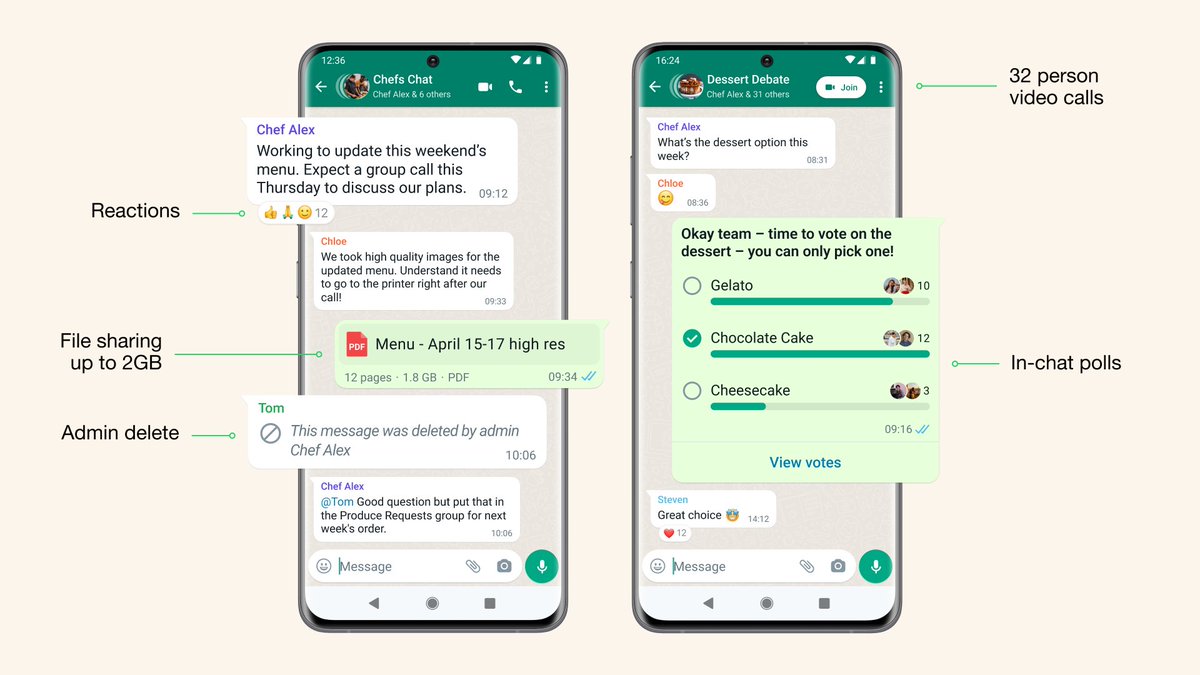

People have asked if we'll adopt this system for WhatsApp. The answer is no.

People have asked if we'll adopt this system for WhatsApp. The answer is no.

Child sexual abuse material and the abusers who traffic in it are repugnant, and everyone wants to see those abusers caught.

We've worked hard to ban and report people who traffic in it based on appropriate measures, like making it easy for people to report when it's shared. We reported more than 400,000 cases to NCMEC last year from @WhatsApp, all without breaking encryption. faq.whatsapp.com/general/how-wh…

Apple has long needed to do more to fight CSAM, but the approach they are taking introduces something very concerning into the world.

Instead of focusing on making it easy for people to report content that's shared with them, Apple has built software that can scan all the private photos on your phone -- even photos you haven't shared with anyone. That's not privacy.

We’ve had personal computers for decades and there has never been a mandate to scan the private content of all desktops, laptops or phones globally for unlawful content. It’s not how technology built in free countries works.

This is an Apple built and operated surveillance system that could very easily be used to scan private content for anything they or a government decides it wants to control. Countries where iPhones are sold will have different definitions on what is acceptable.

Will this system be used in China? What content will they consider illegal there and how will we ever know? How will they manage requests from governments all around the world to add other types of content to the list for scanning?

Can this scanning software running on your phone be error proof? Researchers have not been allowed to find out. Why not? How will we know how often mistakes are violating people’s privacy?

What will happen when spyware companies find a way to exploit this software? Recent reporting showed the cost of vulnerabilities in iOS software as is. What happens if someone figures out how to exploit this new system?

There are so many problems with this approach, and it’s troubling to see them act without engaging experts that have long documented their technical and broader concerns with this.

Apple once said “We believe it would be in the best interest of everyone to step back and consider the implications …” apple.com/customer-lette…

…”it would be wrong for the government to force us to build a backdoor into our products. And ultimately, we fear that this demand would undermine the very freedoms and liberty our government is meant to protect.” Those words were wise then, and worth heeding here now.

• • •

Missing some Tweet in this thread? You can try to

force a refresh