Here's a thread about bots: what bots are and (some of) what bots do, and also things that aren't bots but frequently get mistaken for them.

cc: @ZellaQuixote

cc: @ZellaQuixote

First, what is a bot? The Oxford English Dictionary defines "bot" as "an autonomous program on the internet... that can interact with systems or users". A Twitter bot is simply an automated Twitter account (operated by a piece of computer software rather than a human).

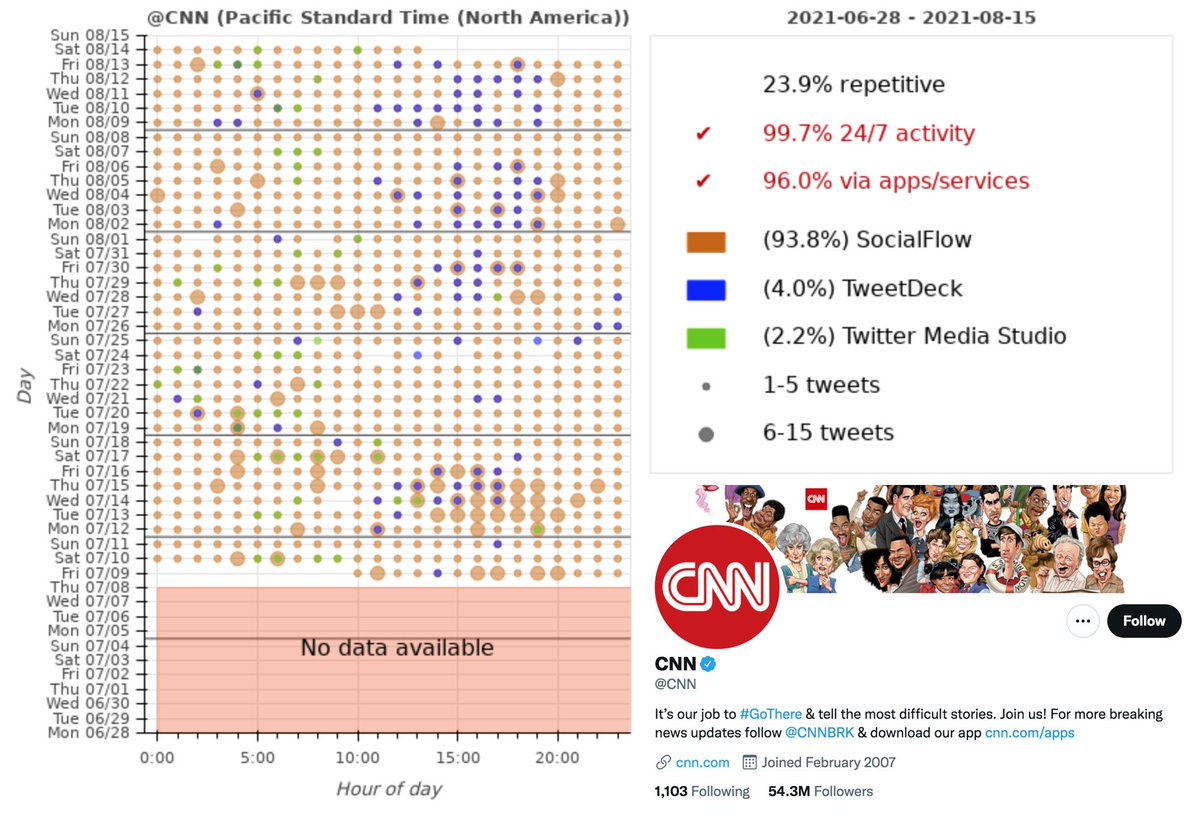

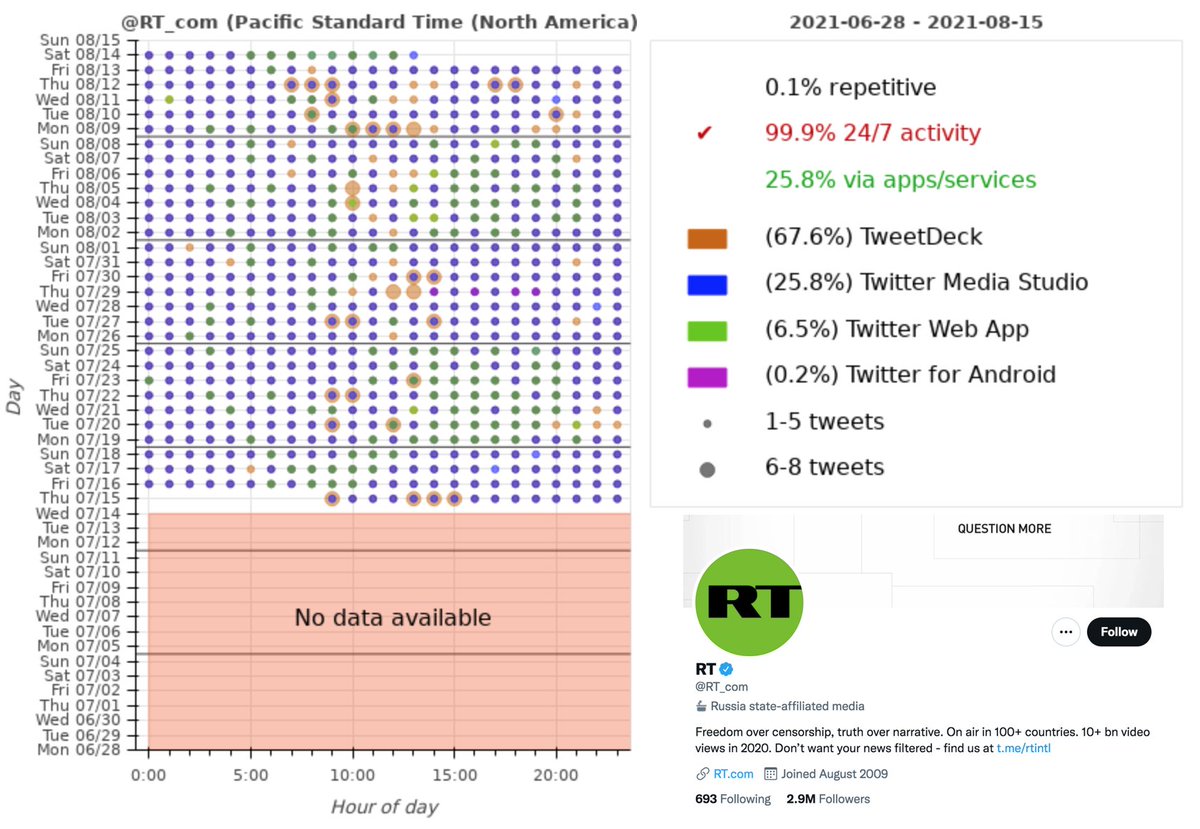

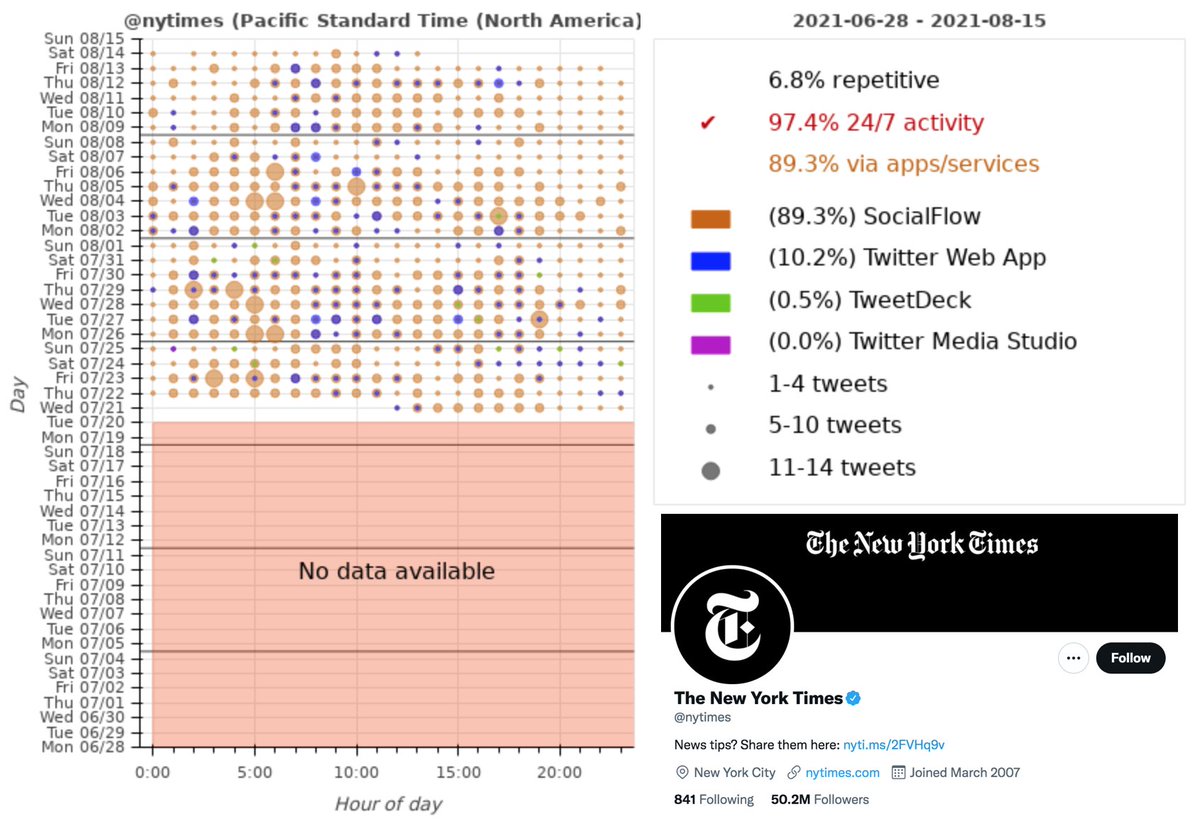

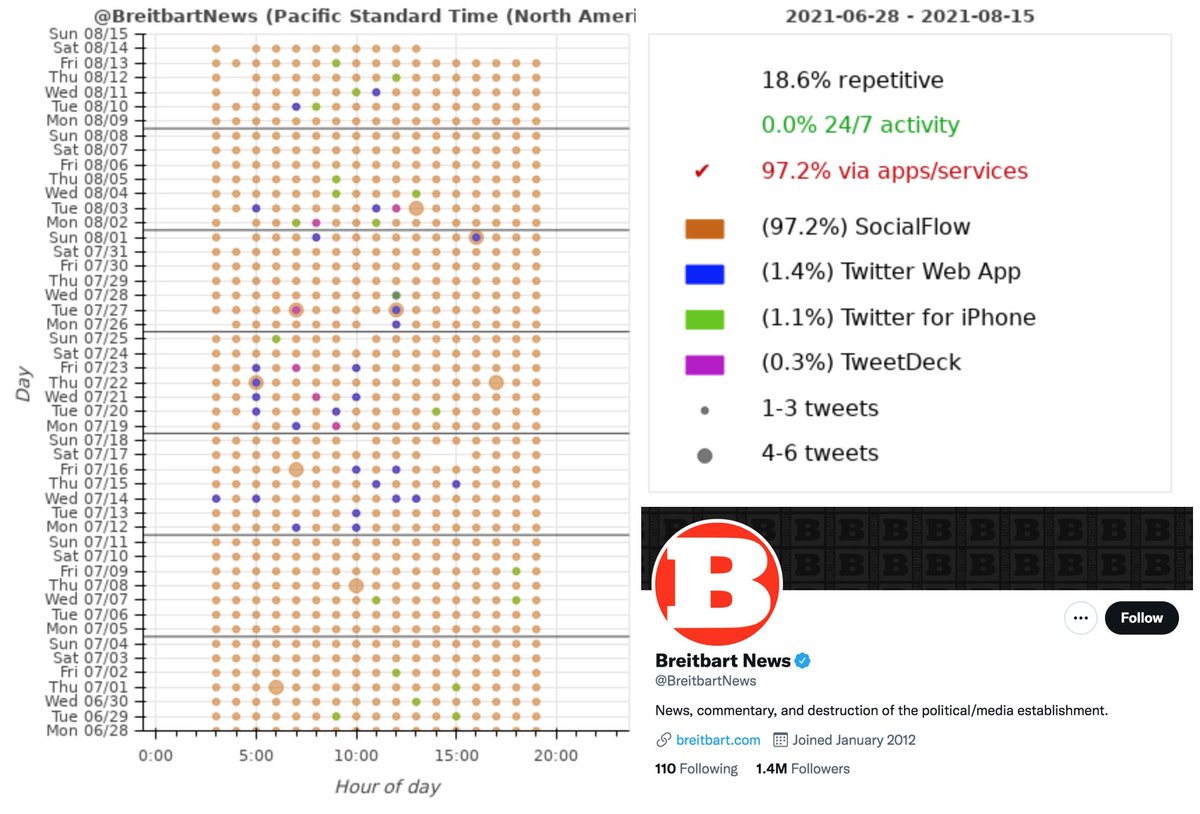

Although much public discussion of "bots" centers on malicious or spammy accounts, there are plenty of legitimate uses of automation. Many news outlets use automation tools to automatically share their articles and videos on Twitter, for example.

There are a variety of fun and useful Twitter bots that freely disclose that they're automated. Some examples:

@everycolorbot - tweets hex values of random colors

@archillect - tweets images

@earthquakeBot - tweets earthquake info

@BidenInsultBot - insults you if you tag it

@everycolorbot - tweets hex values of random colors

@archillect - tweets images

@earthquakeBot - tweets earthquake info

@BidenInsultBot - insults you if you tag it

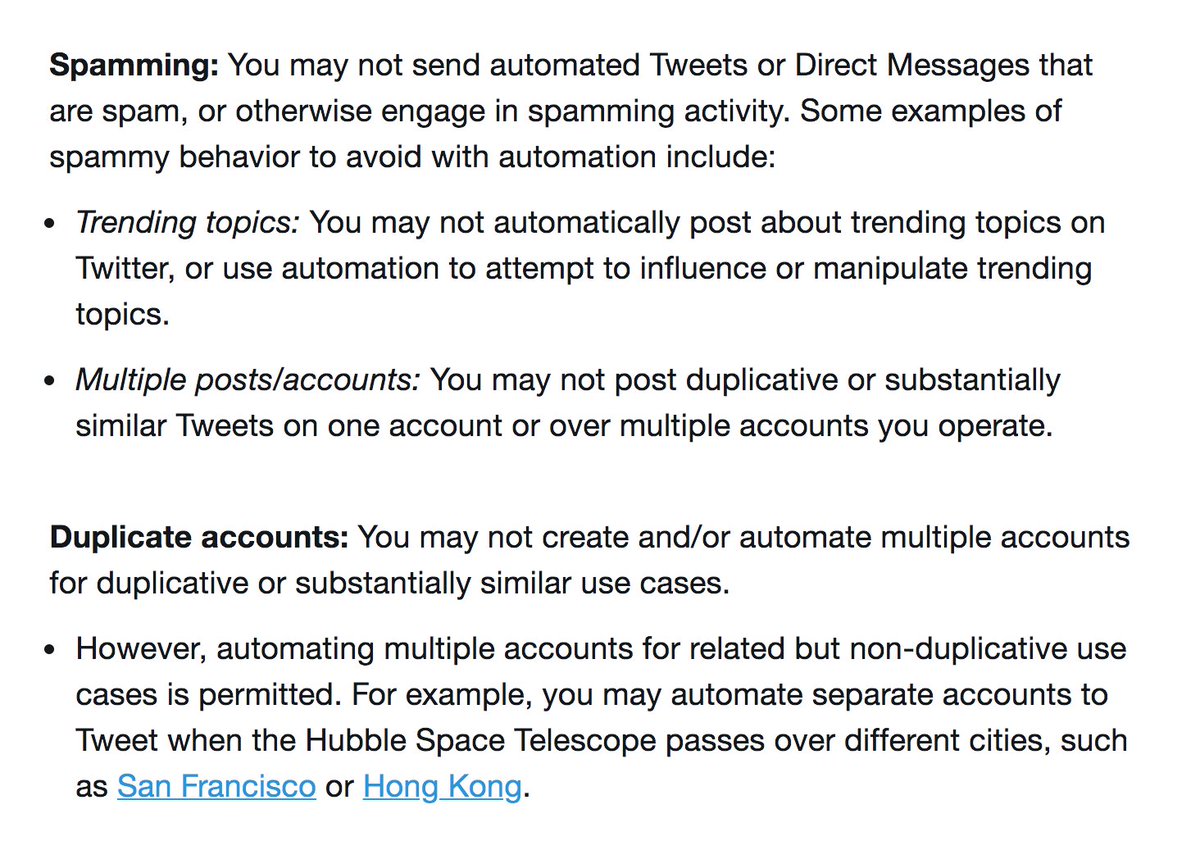

Although forbidden by Twitter's automation rules (help.twitter.com/en/rules-and-p…), spam networks (large groups of accounts operated by a single entity tweeting the same stuff) are a frequent use of automation.

What do spambots spam? Cryptocurrency has been a hot topic for automated spam networks in recent months, with networks ranging in size from a few dozen accounts to tens of thousands. Some examples:

https://twitter.com/conspirator0/status/1425909449391317002

https://twitter.com/conspirator0/status/1406645999381196805

https://twitter.com/conspirator0/status/1317565827311456259

Porn is (unsurprisingly, since it's the Internet) another frequent use for spambot networks:

https://twitter.com/conspirator0/status/1386438872750510080

https://twitter.com/conspirator0/status/1343254073630310400

https://twitter.com/conspirator0/status/1281396137270939650

Spammy retweet botnets are also sometimes used to astroturf political topics. A few examples:

Xinjiang human rights abuse denialism:

2021 Ecuador election:

Random US Congressional tweets:

Xinjiang human rights abuse denialism:

https://twitter.com/conspirator0/status/1372007492276916224

2021 Ecuador election:

https://twitter.com/conspirator0/status/1377447092847792128

Random US Congressional tweets:

https://twitter.com/conspirator0/status/1324565273324802049

Sometimes automated spam exists alongside organic activity on same group of accounts. An example of this is the now-defunct Power10 automation tool, which caused its users to automatically retweet large numbers of pro-Trump tweets.

businessinsider.com/power10-activi…

businessinsider.com/power10-activi…

https://twitter.com/conspirator0/status/1172974935146467329

Services that sell retweets, likes, and follows (all of which are TOS violations) frequently use botnets to provide the aforementions retweets, likes, and follows. A couple examples:

https://twitter.com/conspirator0/status/1424477966302683145

https://twitter.com/conspirator0/status/1294824510085050374

The above is by no means a comprehensive survey of every bot or every type of bot on Twitter, but is a decent rough overview of common uses of automation, both legitimate and illicit.

Onward to the next topic: types of accounts that people think are bots, but aren't.

Onward to the next topic: types of accounts that people think are bots, but aren't.

Folks who participate in retweet rooms (where everyone retweets every tweet shared in the room) often get mistaken for bots due to their high tweet volume, as users who are in multiple rooms often retweet hundreds of tweets a day.

politico.eu/article/twitte…

politico.eu/article/twitte…

https://twitter.com/conspirator0/status/1273077389493485570

Copypastas (cases where real humans copy and paste the same of text with few or no alterations) frequently get mistaken for bot activity, as identical tweets appear on multiple accounts.

https://twitter.com/conspirator0/status/1328479128908132358

https://twitter.com/PeterD_Adams/status/1420863663762776075

In a similar vein, accounts that share a lot of news articles or YouTube videos by using the "Share" buttons on the respective sites get mistaken for bots because the article/video title is generally used as the tweet text, resulting in identical (but not automated) tweets.

Impostor accounts and fake personas also frequently get erroneously referred to as "bots", although many of these are human-operated rather than automated.

buzzfeednews.com/article/craigs…

buzzfeednews.com/article/craigs…

https://twitter.com/conspirator0/status/1000835015033479168

https://twitter.com/camillefrancois/status/1238223779815456768

How does one know if a given account is a bot? Unfortunately, there's no quick way to tell, and in many cases without finding a large number of accounts that belong to the same network, it may be impossible to be certain. Here are a couple of things that sometimes work...

Every tweet is labeled with the software it was tweeted with, which can be used to identify automated tweets. Most human tweets are sent with Twitter Web App, Twitter for iPhone/iPad/Android, or TweetDeck and most tweets sent with other apps are automated.

Anomalies in timing can sometimes indicate automation as well: constant activity without breaks for sleep, for example. The accounts described in the linked thread are examples (and have other timing anomalies as well).

https://twitter.com/conspirator0/status/1386439092355756033

Every rule has exceptions, of course:

• some 24/7 accounts are run by multiple people rather than being automated

• web browsers and phones can be automated, so some accounts that post via web/smartphone are actually bots

• etc

• some 24/7 accounts are run by multiple people rather than being automated

• web browsers and phones can be automated, so some accounts that post via web/smartphone are actually bots

• etc

(just to clarify - the articles linked in this tweet aren't erroneously claiming that things that the fake accounts described are bots, they're accurate articles about fake/impostor accounts that sometimes have been mistaken for bots.)

https://twitter.com/conspirator0/status/1426723497460518949

Also, be wary of overly simplistic checklists that purport to be advice on "bot detection". Most of the stuff on this list has nothing to do with automation, and will be of little to no use in determining whether or not a given account is a bot.

Recent Twitter blog post that covers various misconceptions regarding bots:

blog.twitter.com/common-thread/…

blog.twitter.com/common-thread/…

• • •

Missing some Tweet in this thread? You can try to

force a refresh