+"Tom of Finland" (the ultimate Finnish LGBTQ collaboration?) I really love the trees in the background.

That one had a fun instability, shifting from low saturation line art to fabulous jungle. Likely a slow drift along the image manifold to the high dimensional colourful image subspace from the constrained initial state.

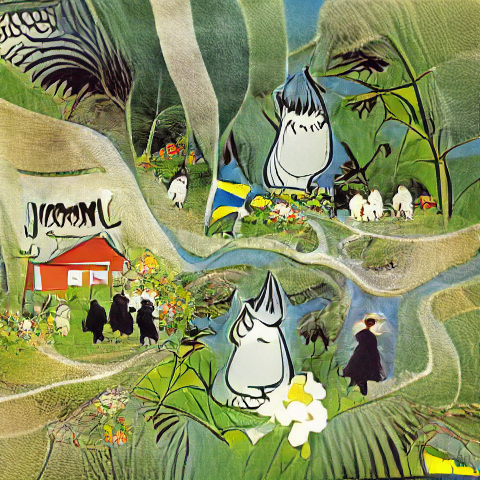

+"Simon Stålenhag", "+M.C. Escher" (Again, the ultra-Scandinavianness of @simonstalenhag meshes well with Tove Jansson - note the background detail.)

+Anders Zorn, +Carl Larsson (Yes, the background lakes and birch tree continue. And Mrs Fillyjonk is perfectly at home in Carl Larsson's house.)

+John Bauer. Here I got disappointed, it just went for watercolours rather than any moody deep forests. Still, definite troll feeling.

• • •

Missing some Tweet in this thread? You can try to

force a refresh