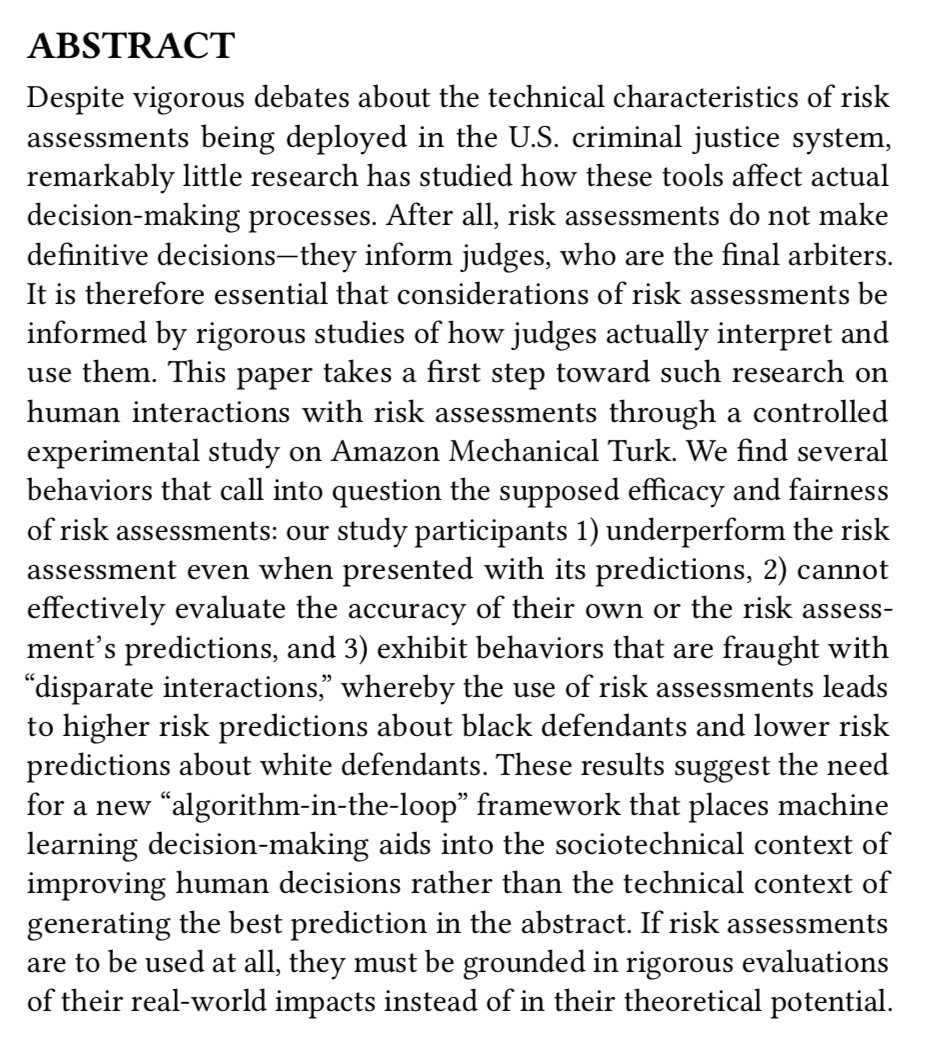

How do people collaborate with algorithms? In a new paper for #CSCW2021, Yiling Chen and I show that even when risk assessments improve people's predictions, they don't actually improve people's *decisions*. Additional details in thread 👇

Paper: benzevgreen.com/21-cscw/

Paper: benzevgreen.com/21-cscw/

This finding challenges a key argument for adopting algorithms in government. Algorithms are adopted for their predictive accuracy, yet decisions require more than just predictions. If improving human predictions doesn't improve human decisions, then algorithms aren't beneficial.

Instead of improving human decisions, algorithms could generate unintended and unjust shifts in public policy without being subject to democratic deliberation or oversight.

Take the example of pretrial risk assessments. Even though our algorithm improved predictions of risk, it also made people more sensitive to risk when making decisions about which defendants to release. Showing the risk assessment therefore increased racial disparities.

This has implications for how we evaluate algorithms. Rather than testing only an algorithm's performance on its own, we also need to test how people interact with the algorithm. We shouldn't incorporate an algorithm into decision-making without first studying how people use it.

This is a research question I've been wanting to answer since I started working on human-AI interactions several years ago. It cuts right to the heart of arguments for why governments should adopt algorithms for high-stakes decisions.

But it's actually a difficult question to test because you have to account for how algorithms alter people's predictions of risk. So there are some interesting experimental design details in the paper, if you're into that sort of thing.

• • •

Missing some Tweet in this thread? You can try to

force a refresh