We're grateful that deeply understanding and improving datasets is acknowledged as a non-negotiable part of training pipelines 🙏

However exploring datasets can be cumbersome, and documenting and sharing findings is often messy

W&B Tables fixes this 👇

However exploring datasets can be cumbersome, and documenting and sharing findings is often messy

W&B Tables fixes this 👇

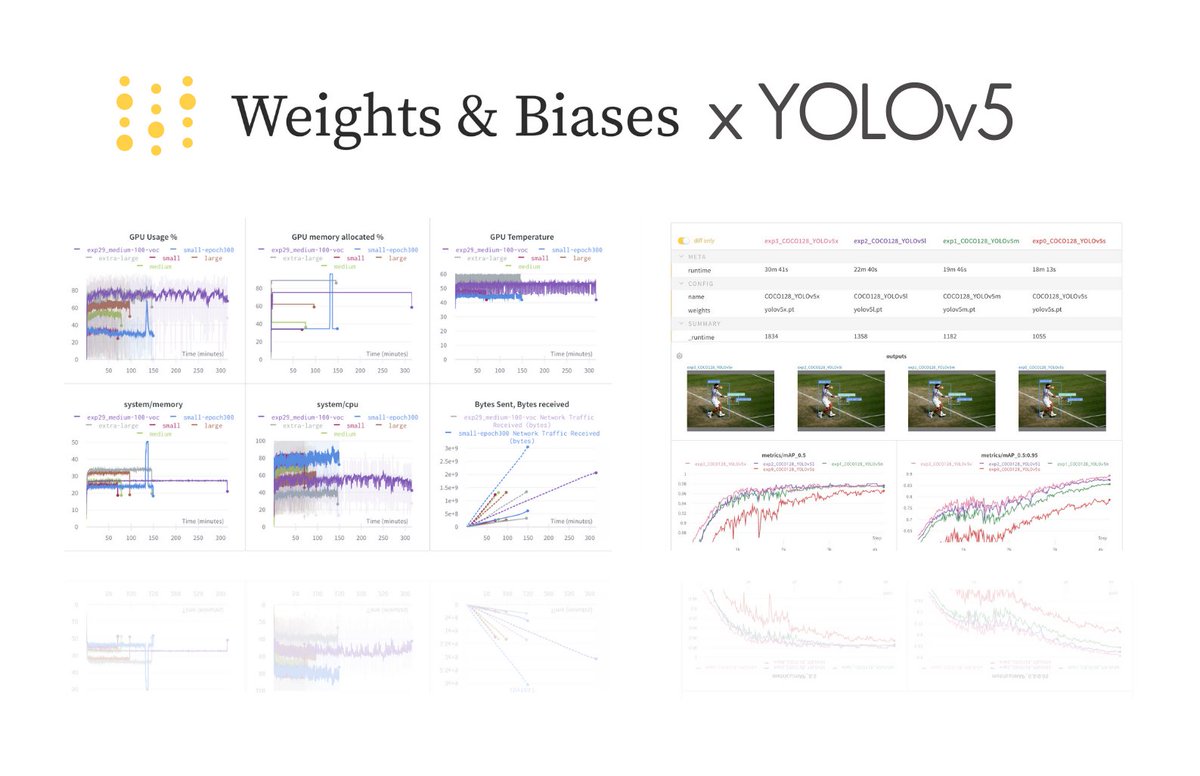

https://twitter.com/weights_biases/status/1417942580579479555

W&B Tables enables quick and powerful exploration of image, video, audio, tabular, molecule and NLP datasets.

@metaphdor used Tables to explore 100k rows from @Reddit's Go Emotions dataset:

📺: )

🛑 First, filtering for multiple column values

@metaphdor used Tables to explore 100k rows from @Reddit's Go Emotions dataset:

📺: )

🛑 First, filtering for multiple column values

🔎 Exploring the distribution of reddit comments by sub-reddit name:

🪄 Creating additional calculated columns; here we get the count of comments per sub-reddit. Looks like the "farcry" sub has the fewest comments:

🤗 We can find which sub had the highest fraction of "caring" comments:

And which sub had the highest ratio of gratitude 🙏 to excitement 🥳 (i.e. thankful but maybe kinda boring) - sorry r/legaladvice 😐 :

✍️ Documenting and sharing these findings with collaborators is a breeze by sharing them W&B Reports.

Your collaborators can also start their own exploration in Tables that you've added to a Report ** in the Report UI itself ** and persist these changes between visits.

Your collaborators can also start their own exploration in Tables that you've added to a Report ** in the Report UI itself ** and persist these changes between visits.

💻 Logging to W&B Tables is super easy, here we downloaded the Go Emotions dataset from the @huggingface Datasets library and logged it as a pandas dataframe

To log to W&B Tables and start your own exploration, you can run this colab:

🏃♀️ wandb.me/go-emotions-co…

🏃♀️ wandb.me/go-emotions-co…

🖼️ This is only 1 example for NLP; Tables supports exploration of a wide variety of data types, here @sbxrobotics used Tables to demonstrate how to evaluate image segmentation modes:

wandb.ai/artem_sbx/sbx-…

wandb.ai/artem_sbx/sbx-…

Finally, you can get started with our W&B Tables docs here:

📙 docs.wandb.ai/guides/data-vis

We're incredibly excited about Tables and will be continuously improving functionality and performance over the coming months. We'd love to know what you think: support@wandb.com

📙 docs.wandb.ai/guides/data-vis

We're incredibly excited about Tables and will be continuously improving functionality and performance over the coming months. We'd love to know what you think: support@wandb.com

• • •

Missing some Tweet in this thread? You can try to

force a refresh