How can human-AI teams outperform both AI alone and human alone, i.e. achieving complementary performance? In our new paper presenting at #CSCW2021, we propose two directions: out-of-distribution examples and interactive explanations. Here’s a thread about these new perspectives:

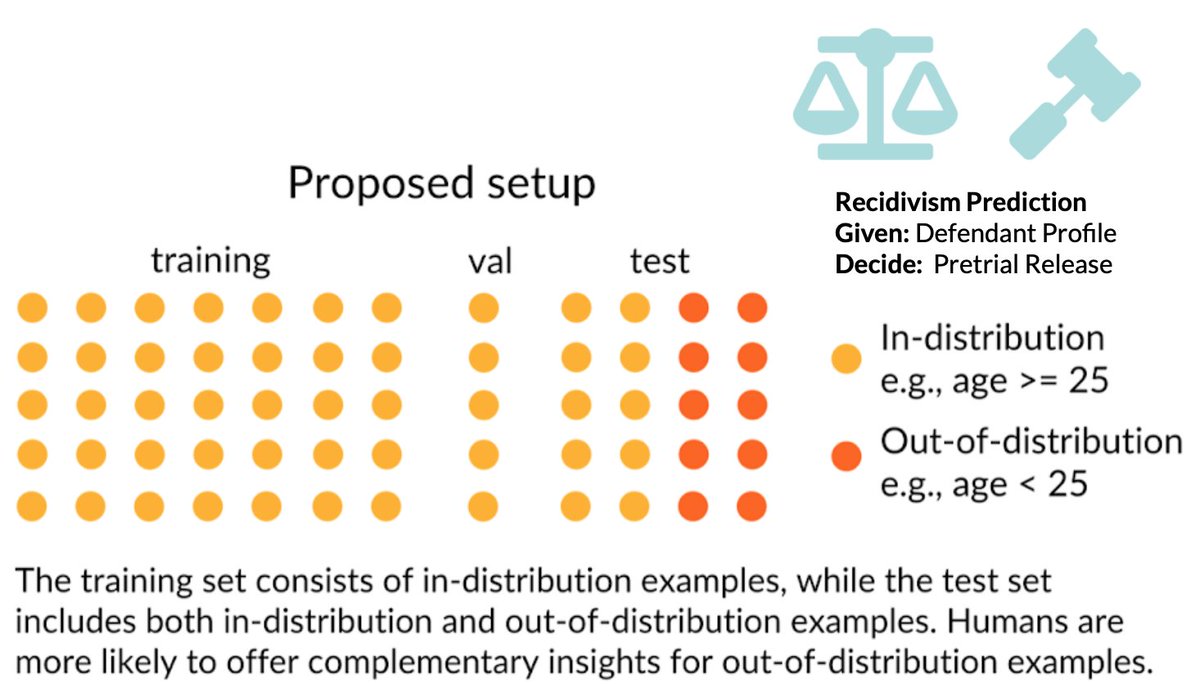

1/n First, prior work adopts an over-optimistic scenario for AI: test set follows the same distribution as training set (in-distribution). In practice, examples during testing may differ substantially from training, and AI performance can drop significantly (out-of-distribution).

2/n Thus, we propose experimental designs with both out-of-distribution examples and in-distribution examples in the test set. When AI fails in out-of-distribution examples, humans may be better at detecting problematic patterns in AI predictions and offer complementary insights.

3/n For instance, in recidivism prediction (predict whether a defendant will violate terms of pretrial release given their profile) the AI model only sees defendants older than 25 in training. Then during test time, defendants younger than 25 are the out-of-distribution examples.

4/n Second, we develop interactive interfaces to enable a two-way conversation between decision makers and AI. For instance, we allow humans to change the input and observe how AI predictions would have changed in these counterfactual scenarios.

5/n We conduct both virtual pilot studies and large-scale human experiments. After instructions, participants go through a training phase, a prediction phase, and complete an exit survey. We adopt a two-factor design with two distribution types and six explanation types.

6/n Looking at team accuracy gain, we find the performance gap between human-AI teams and AI is smaller for out-of-distribution examples than for in-distribution examples. This confirms humans are more likely to achieve complementary performance for out-of-distribution examples.

7/n Looking at human agreement with AI, we find agreement with wrong AI predictions is lower for out-of-distribution examples across all explanation types. This suggests humans may be better at providing complementary insights into AI mistakes for out-of-distribution examples.

8/n Finally we find interactive explanations do not lead to improved performance of human-AI teams nor lower agreement with wrong AI predictions. However, they significantly increase the human perception of AI assistance’s usefulness, as participants report in the exit survey.

9/n Interactive explanations might reinforce existing human biases and lead to suboptimal decisions. In recidivism prediction, participants with interactive explanations are more likely to focus on demographic features and less likely to identify computationally important ones.

10/10 In summary, we propose a novel setup highlighting the importance of exploring out-of-distribution examples and their difference with in-distribution examples. We also investigate the potential of interactive explanations for better communication between humans and AI.

This is joint work with @vivwylai and @ChenhaoTan. We also thank members of the @ChicagoHAI Lab for their helpful suggestions and anonymous reviewers for their insightful reviews!!

Paper: arxiv.org/abs/2101.05303

Video:

Demo: machineintheloop.com

Paper: arxiv.org/abs/2101.05303

Video:

Demo: machineintheloop.com

• • •

Missing some Tweet in this thread? You can try to

force a refresh