A coordinated network of fake accounts posed as Sikh personas to promote the Indian Govt, nationalism & label Sikh activists as terrorists.

They claimed they were #RealSikhs, but were far from it. News on our investigation: bbc.co.uk/news/world-asi… Here is an explainer thread👇

They claimed they were #RealSikhs, but were far from it. News on our investigation: bbc.co.uk/news/world-asi… Here is an explainer thread👇

What we know is that their aims were to label Sikh political interests as extremist, stoke cultural tensions within India & overseas & promote the Indian Govt.

So WHO are the #RealSikhs?

WHAT was their aim?

And HOW did they attempt to distort perceptions?

So WHO are the #RealSikhs?

WHAT was their aim?

And HOW did they attempt to distort perceptions?

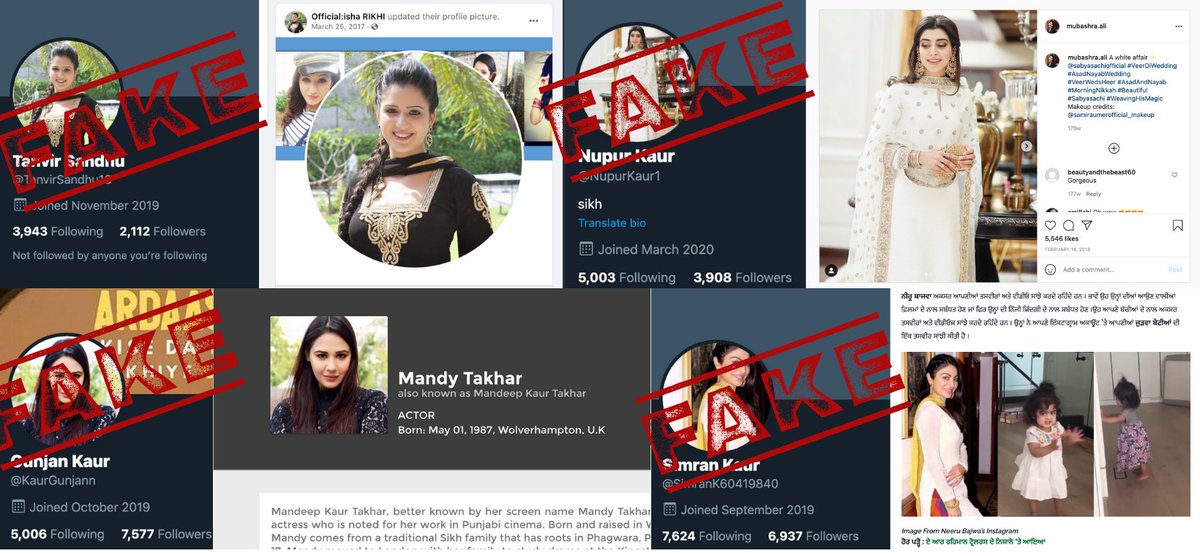

First, WHO were these accounts trying to be and what did they look like?

The accounts were well-curated. They had relatively high follower numbers & were very active.

The personas were replicated across Twitter, Facebook and Instagram.

The accounts were well-curated. They had relatively high follower numbers & were very active.

The personas were replicated across Twitter, Facebook and Instagram.

Let's take a look at some of the accounts. The first pattern identified in this network is the profile images.

Most of the accounts in this network used the images of Bollywood actresses & celebrities.

Here is @jimmykaur3. It's actually an image of an actress.

Most of the accounts in this network used the images of Bollywood actresses & celebrities.

Here is @jimmykaur3. It's actually an image of an actress.

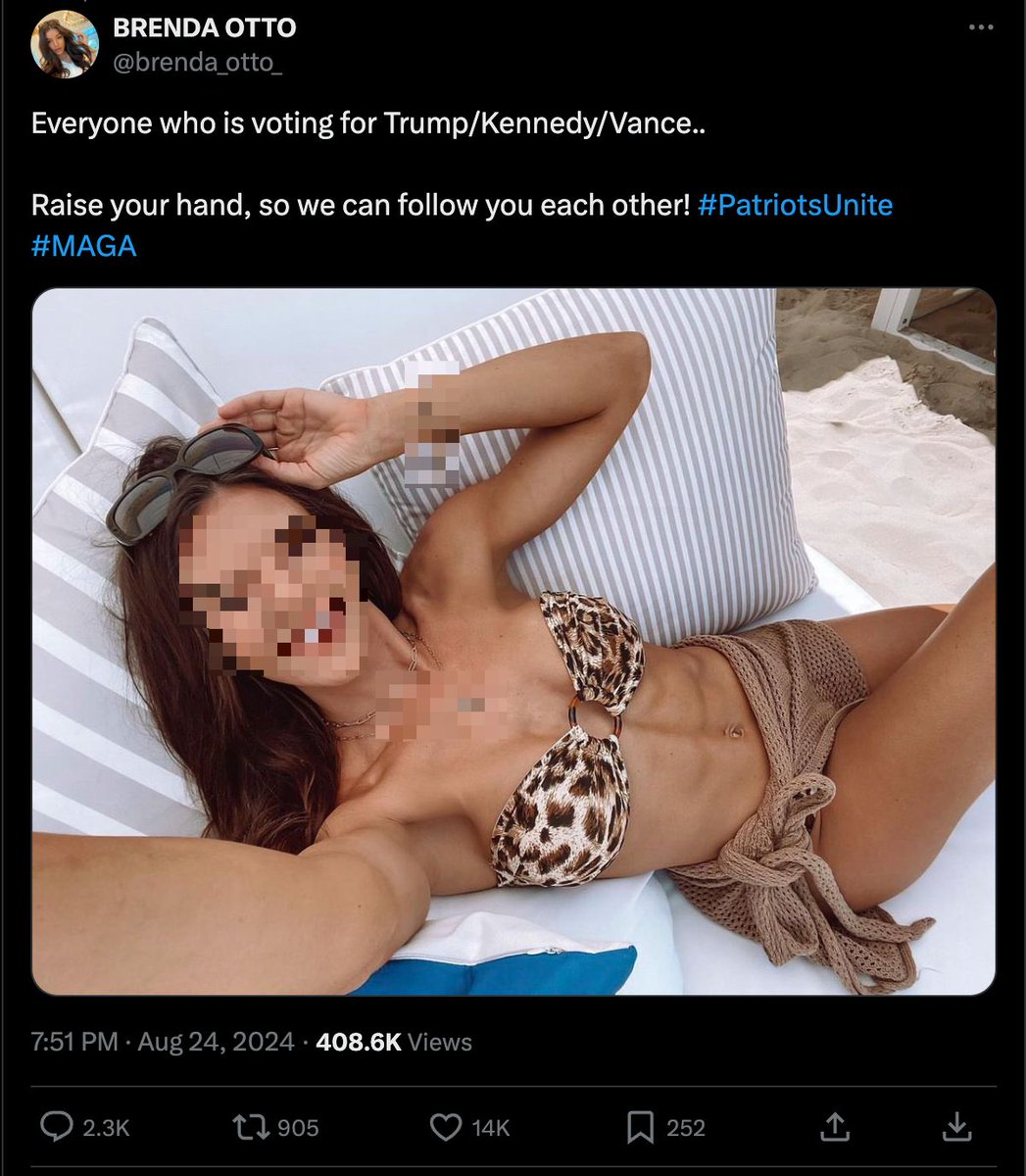

Here is @Amarjot42854873? Again - fake. This person does exist and it's not Amarjot Kaur.

Next up @TanvirSandhu16. Again, also fake. This is actually an image of Isha Rikhi.

Let's take a look at @nupurkaur1. This is actually a photo of the sister of Mubashra Aslam.

Are we seeing a pattern here?

Here is @kaurgunjann with more than 7000 followers.

This is actually actress Mandy Takhar.

Here is @kaurgunjann with more than 7000 followers.

This is actually actress Mandy Takhar.

I call these accounts sock puppets. They are fictitious online identities attempting to be a specific persona.

And these ones have a story that doesn't just exist on Twitter - let's take a look.

And these ones have a story that doesn't just exist on Twitter - let's take a look.

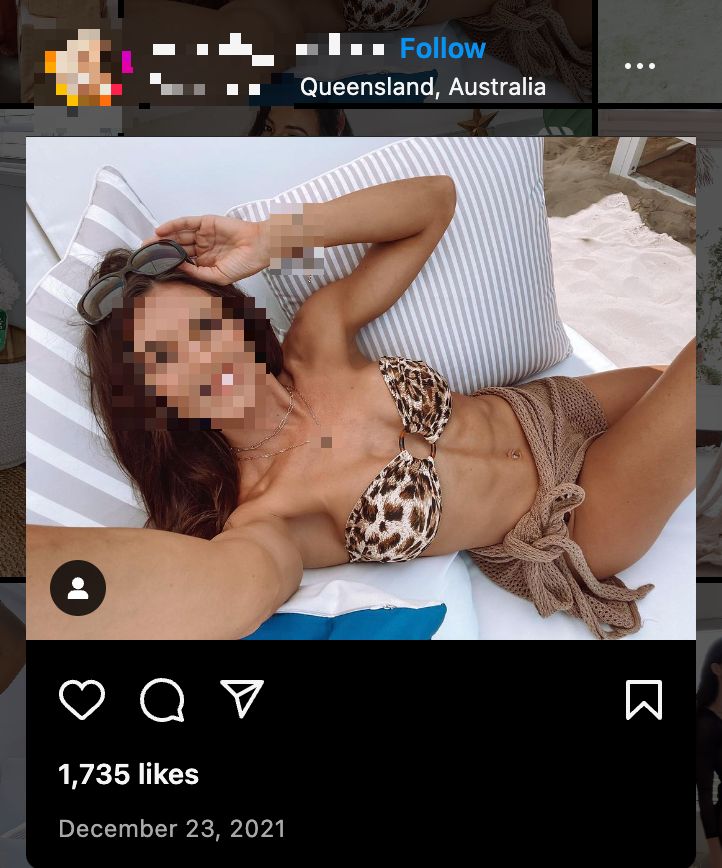

These fake accounts carry their personas over to different platforms.

We found that 22 accounts that were the same personas on Twitter & Facebook. They used the same image, name, cover photo, and posted the same content.

Here is an example of 'Sanpreet' on both platforms.

We found that 22 accounts that were the same personas on Twitter & Facebook. They used the same image, name, cover photo, and posted the same content.

Here is an example of 'Sanpreet' on both platforms.

Many of the personas were present on Instagram as well.

Note: the accounts had significantly less success with metrics on Instagram and Facebook in comparison to the traction they gained on Twitter.

Note: the accounts had significantly less success with metrics on Instagram and Facebook in comparison to the traction they gained on Twitter.

So WHAT was their aim?

We can glean some obvious details from the content the accounts post. Here is some of the Facebook activity we looked at. It shows a strong focus on countering Sikh independence. Note the prominence of tags such as #PakistanBehindKhalistan

We can glean some obvious details from the content the accounts post. Here is some of the Facebook activity we looked at. It shows a strong focus on countering Sikh independence. Note the prominence of tags such as #PakistanBehindKhalistan

That same tag, as seen on Twitter, shows extremely similar content. Again targeting Sikh independence.

You can see much of the content had few interactions.

You can see much of the content had few interactions.

However, some of the content gained significant traction. For example this tweet about independence groups overseas received more than 3000 retweets and 16,000 likes.

Not bad for a sock puppet with a set list of talking points to promote certain narratives.

Not bad for a sock puppet with a set list of talking points to promote certain narratives.

The coordinated fake network also amplified concerning narratives that attempted to define what 'real Sikh' and a 'fake Sikh' is.

The fake network of accounts also attempted to push specific narratives about the farmers' protests using messaging claiming that ‘Khalistani terrorists’ hijacked the protests.

There was also a common theme throughout the network of fake personas to retweet or tweet about the Indian Armed Forces and Indian Army content.

This content was unique as it was not related to Sikh independence much like the other content.

This content was unique as it was not related to Sikh independence much like the other content.

While most of the content appears to push specific talking points, or narratives, some tweets (example below) went further in a call for 'nationalists' to 'counter & expose' groups this network labelled as extremists.

While the fake personas appeared to gain significant traction on Twitter, their tweets and images were also linked, embedded, or reposted on news sites and blogs indicating platform breakout.

Below are two examples of this.

Below are two examples of this.

Often with influence operations, looking at a network through visualised data helps with a lot of things - namely identifying how it operates, size, spread and also finding more accounts.

So let's take a look at that.

So let's take a look at that.

I captured data using the Twitter API on three tags used by the network: #RealSikhsAgainstKhalistan, #SikhsRejectKhalistan & #RealSikhs.

I've set out that data using @Gephi to show the core network of primarily fake accounts and the wider network of amplifiers in the outer ring.

I've set out that data using @Gephi to show the core network of primarily fake accounts and the wider network of amplifiers in the outer ring.

Doing helps to visualise the interactions (likes, retweets) between accounts.

For example, here I have highlighted the interactions of @SimranK60419840 to show its personal spread within the network.

For example, here I have highlighted the interactions of @SimranK60419840 to show its personal spread within the network.

Though the captured activity was only a sample of these accounts, it did show that there was consistent amplification from smaller fake accounts (on right in inner circle).

Using those accounts on the right, we could find more that were being retweeted within the fake network

Using those accounts on the right, we could find more that were being retweeted within the fake network

These findings are documented in a full transparent influence operations research report here @Cen4infoRes: info-res.org/post/revealed-…

This report was shared with teams at Twitter and Meta. All of the accounts identified in this research have subsequently been suspended 👊

This report was shared with teams at Twitter and Meta. All of the accounts identified in this research have subsequently been suspended 👊

Well done @FloraCarmichael @shrutimenon10 for turning this in-depth influence operations research into a newsworthy report. bbc.co.uk/news/world-asi…. Also a big thanks to @elisethoma5 and staff at @Cen4infoRes for reviewing this research.

For those wanting to view this piece in other languages, here are the translated versions from the BBC.

Punjabi bbc.com/punjabi/india-…

Urdu bbc.com/urdu/regional-…

Gujaratihttps://www.bbc.com/gujarati/india-59388510

Hindi bbc.com/hindi/india-59…

Telugu bbc.com/telugu/india-5…

Punjabi bbc.com/punjabi/india-…

Urdu bbc.com/urdu/regional-…

Gujaratihttps://www.bbc.com/gujarati/india-59388510

Hindi bbc.com/hindi/india-59…

Telugu bbc.com/telugu/india-5…

• • •

Missing some Tweet in this thread? You can try to

force a refresh