This thread describes a research study that utilized deception and may have resulted in harm. I believe university IRBs are failing researchers who have been taught (incorrectly) to rely on them in good faith to flag & help navigate all possible ethical concerns in a study. 🧵

https://twitter.com/jkosseff/status/1471816212732596227

The methods described here are similar to resume and similar audit studies, and there has been debate over research ethics related to this kind of deception research for years, despite unquestionable benefit from findings that have provided evidence for discrimination.

To my knowledge, these kinds of studies have fallen under the purview of ethics review boards. For example, this paper about the ethical issues calls on ethics review to be more critical in requiring justification for deception. journals.sagepub.com/doi/full/10.11…

A similar issue came up not long ago regarding researchers who submitted faulty patches into the Linux kernel to see what would happen. Their IRB (post-hoc) said this did not constitute human subjects research. theverge.com/2021/4/30/2241…

In this more recent study, the researchers sent emails to contact-email addresses on websites, and then used responses as data. I am absolutely baffled by how any IRB would not consider this to be human subjects research under the federal definition.

Apparently an IRB said this study wasn't human subjects research because they didn't collect personally identifiable information - but that's only half of the definition. There's an OR and the other part includes obtaining information through communication with the researcher.

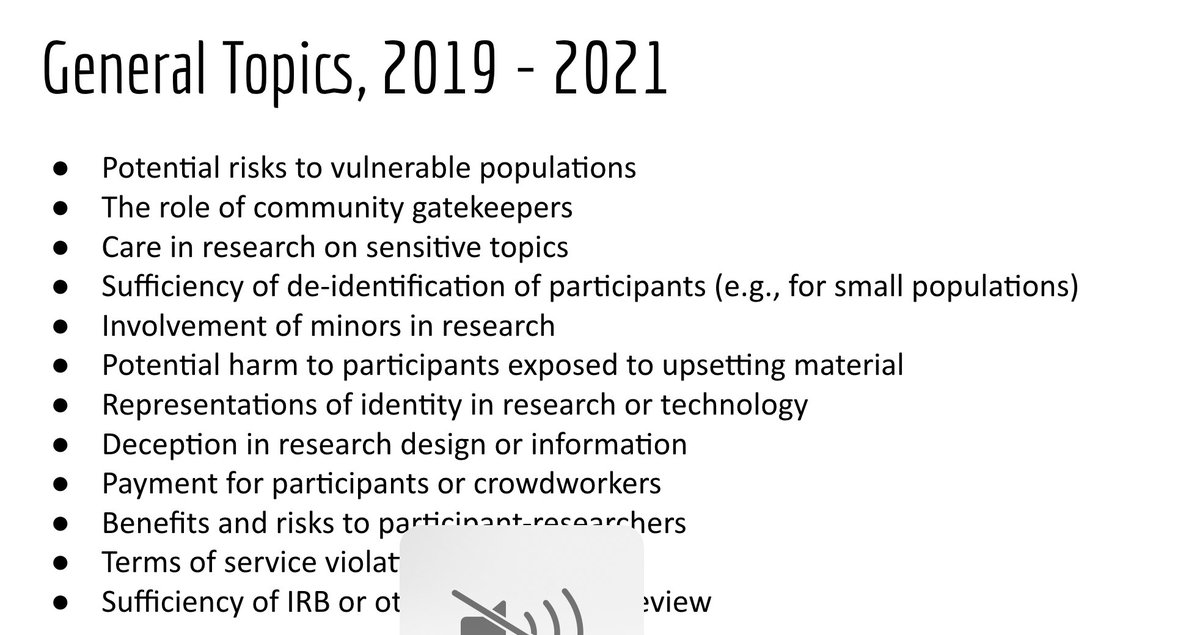

You've all heard me say that IRBs are about compliance, not ethics. But at least for deception research an IRB requires justification and consideration for harms. It's actually a challenging kind of research to get approved. And this study could have benefited from that scrutiny.

For example, here is one IRB's guidelines for using deception in research. There are a number of issues that if considered might have influenced the study design. And help from someone knowledgeable about research ethics could have help identify harms. campusirb.duke.edu/irb-policies/u…

Unfortunately, it appears to be the case that this research resulted in *actual* harm, financial and emotional. I have seen multiple reports that people who received these emails either (a) paid a lawyer to help; or (b) experienced anxiety that they might be in trouble.

I suspect that an underlying issue in thinking about the potential ethical issues (possibly from both the researchers and the IRB) is thinking about emails as going to a *website* rather than a *person*. Unfortunately websites cannot answer emails.

I have my own thoughts about changes that could have been made to this study design such that much of this harm could have been mitigated. I am also sympathetic that well-intentioned researchers might rely on an IRB evaluation in good faith.

So I think that this is something that we can all learn from, to inform education and our own research practices. IRB review is necessary but insufficient. And another example is, of course, use of public data which *actually* isn't human subjects research under the definition.

Researchers make mistakes. A good outcome when that happens is to learn from them- but also help others learn from them. I would of course like to see less ethical controversies, but they are helpful for (hopefully) avoiding similar mistakes in the future.

https://twitter.com/jonathanmayer/status/1472427321047101442?s=20

• • •

Missing some Tweet in this thread? You can try to

force a refresh