🎙️Microsoft open-sources new models at lightning-speech at the moment 🚀

We are excited to support @MicrosoftResearch's newest speech models as of🤗Transformers 4.15:

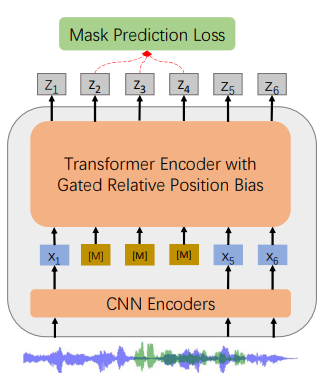

🎤WavLM

🗨️UniSpeech-SAT

💬UniSpeech

Try to get past WavLM at Speaker Verification😉

huggingface.co/spaces/microso…

We are excited to support @MicrosoftResearch's newest speech models as of🤗Transformers 4.15:

🎤WavLM

🗨️UniSpeech-SAT

💬UniSpeech

Try to get past WavLM at Speaker Verification😉

huggingface.co/spaces/microso…

WavLM currently holds the🥇place on the SUPERB benchmark.

👉 huggingface.co/models?other=w…

UniSpeech-SAT yields impressive results on all speaker-related tasks.

👉 huggingface.co/models?other=u…

UniSpeech can be used for phoneme classification.

👉 huggingface.co/models?other=u…

👉 huggingface.co/models?other=w…

UniSpeech-SAT yields impressive results on all speaker-related tasks.

👉 huggingface.co/models?other=u…

UniSpeech can be used for phoneme classification.

👉 huggingface.co/models?other=u…

Thanks @MSFTResearch for all the help!

• • •

Missing some Tweet in this thread? You can try to

force a refresh