Apropos Moxie’s thread, Telegram’s E2E is so obviously homebrewed, it’s like sending a chat to the late 1990s.

https://twitter.com/moxie/status/1474067555278999553

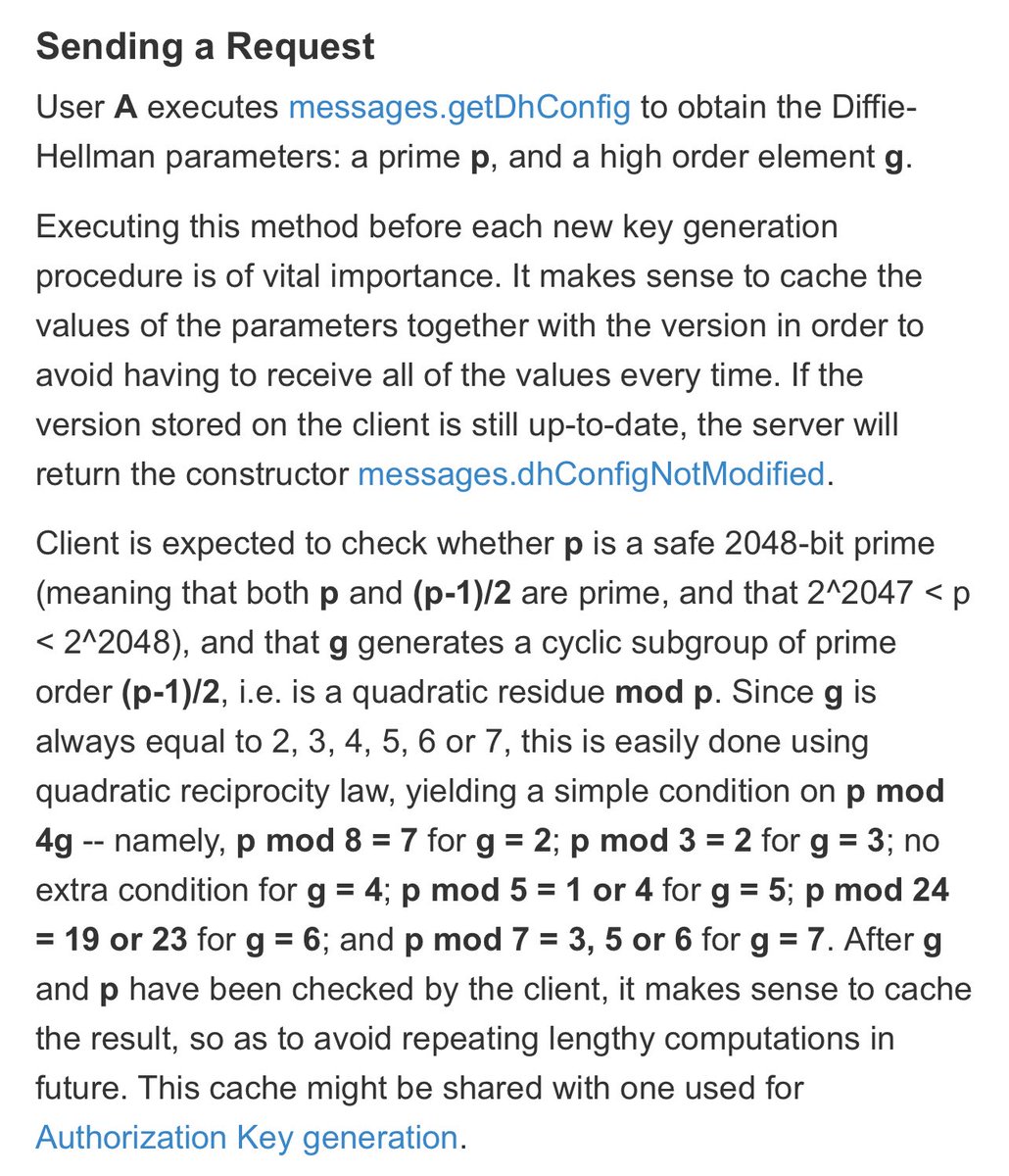

So the remote client picks the DH parameters (why!) and sends them to you, where you have to carefully check that they’re constructed correctly (pretty sure these checks were added later.)

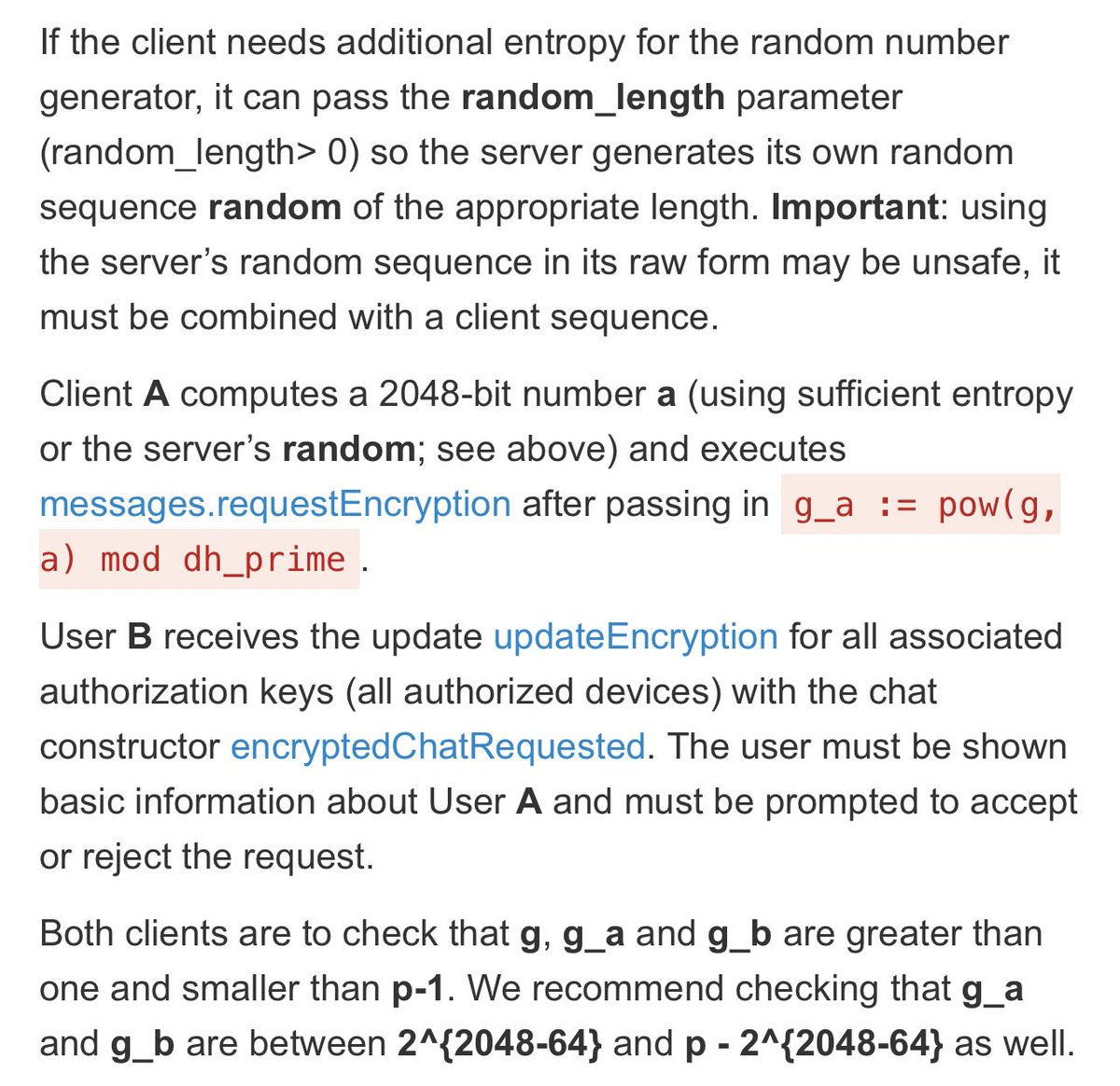

Then there’s this weird thing about the server picking randomness, which used to be a total security vulnerability since it allowed the server to pick the DH secret (now fixed, I think) and still more complicated group membership checks.

Oh god I didn’t even read that correctly but yes.

https://twitter.com/xnyhps/status/1475495456226619396

Then what should happen is some kind of key verification step, but it’s not clear that any of this happens. There are just so many opportunities for bugs in this thing.

It’s not so unusual for new apps to do some weird stuff in v1 of their crypto. What’s unique about Telegram is how stubborn they are about keeping this stuff in - many versions later.

• • •

Missing some Tweet in this thread? You can try to

force a refresh