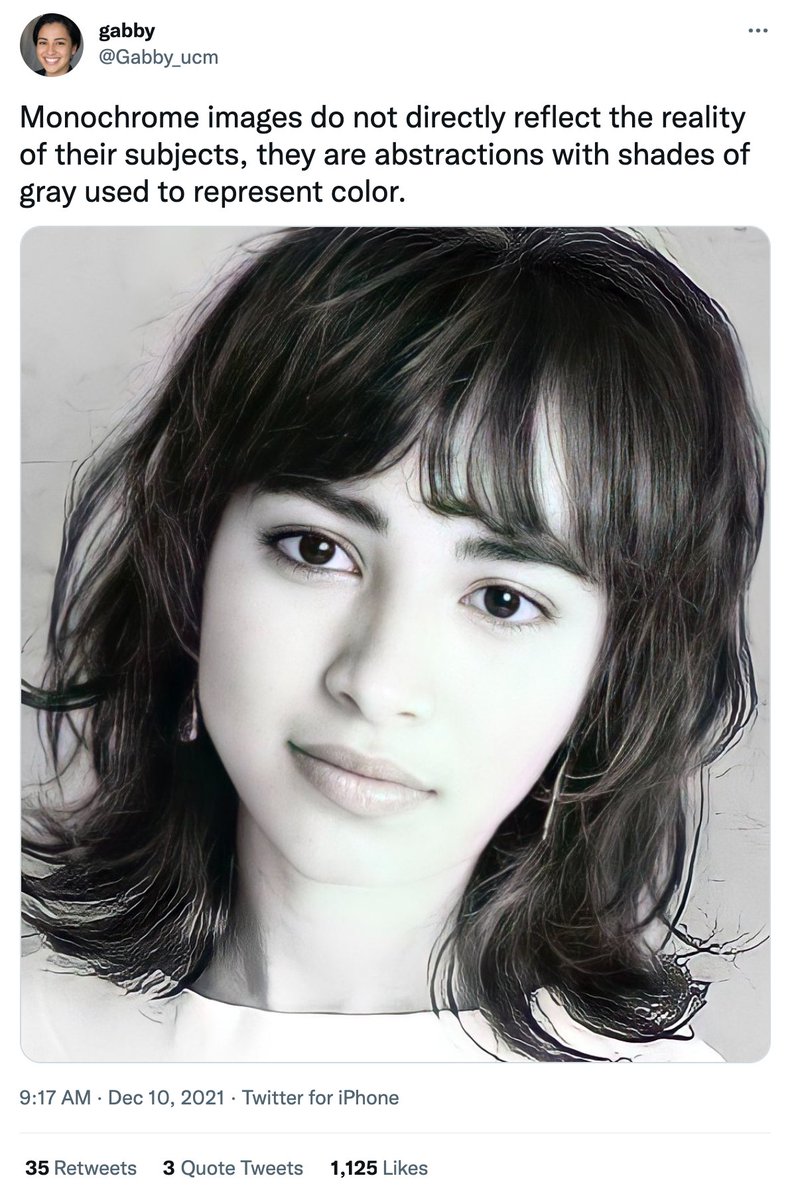

Meet @Gabby_ucm, a Twitter account with a GAN-generated profile pic that has gained over 20 thousand followers since being created in September 2021.

(GAN = "generative adversarial network", the technique used by thispersondoesnotexist.com to produce fake faces) #FridayShenaniGANs

(GAN = "generative adversarial network", the technique used by thispersondoesnotexist.com to produce fake faces) #FridayShenaniGANs

Unmodified GAN-generated face pics have the telltale trait that the major facial features (particularly the eyes) are in the same position on every image, and @Gabby_ucm's profile pic is no exception. There are also anomalies in the teeth, clothing, and hair of @Gabby_ucm's pic.

More on GAN-generated images and their use on Twitter in this set of threads:

https://twitter.com/conspirator0/status/1322704400226394112)

Although the use of a GAN-generated face is not necessarily deceptive, it becomes clear when looking at @Gabby_ucm's replies to appearance-based compliments that the operator of the account is quite willing to deceive its followers into believing the image depicts a real person.

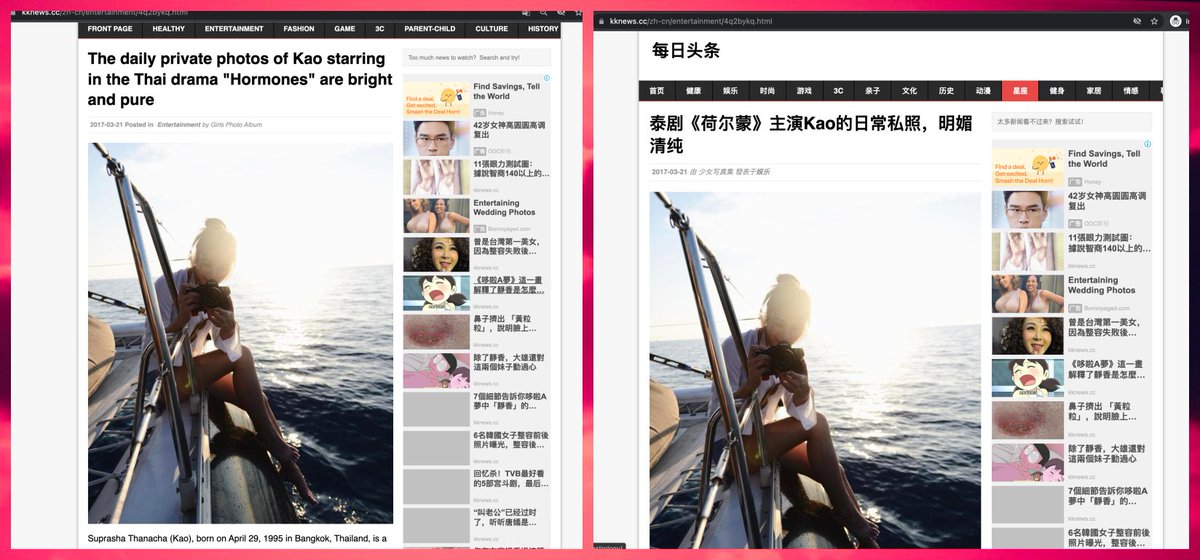

In addition to the fake profile pic, @Gabby_ucm also posted this image and encouraged their followers to believe that it depicts the person running the account. However, the image is an altered version of a photo snagged from a Japanese hair salon's Instagram page.

Although @Gabby_ucm claimed in late 2021 that the plagiarized photo was taken "recently", it is in fact from 2019 or earlier - and the allegedly US-based @Gabby_ucm's claim to have never visited Japan would seem to preclude appearing in a photo shoot at a Japanese hair salon.

How did @Gabby_ucm get 20,000 followers so quickly? There are at least two reasons, one of which is follow trains. The @Gabby_ucm account has been listed on at least 56 follow trains since December 1st, 2021, and has posted several trains of its own.

More information on follow trains in this thread:

https://twitter.com/conspirator0/status/1484801834586107904

The second reason for @Gabby_ucm's rapid growth: it's the recreation of a banned account with many followers (@gabby_UCMaroon) that used the same GAN-generated face pic. @Gabby_ucm says the old account was banned for platform manipulation, but claims not to know what that means.

The suspension for platform manipulation isn't particularly surprising, however, as the operator of the @Gabby_ucm account was running a second account (@GabbyRedux, now suspended) at the same time as the suspended @gabby_UCMaroon, and that account used a stolen profile pic.

As with the current @Gabby_ucm account, the old @gabby_UCMaroon account falsely presented a stolen photo as an image of the person running the account on at least one occasion.

• • •

Missing some Tweet in this thread? You can try to

force a refresh