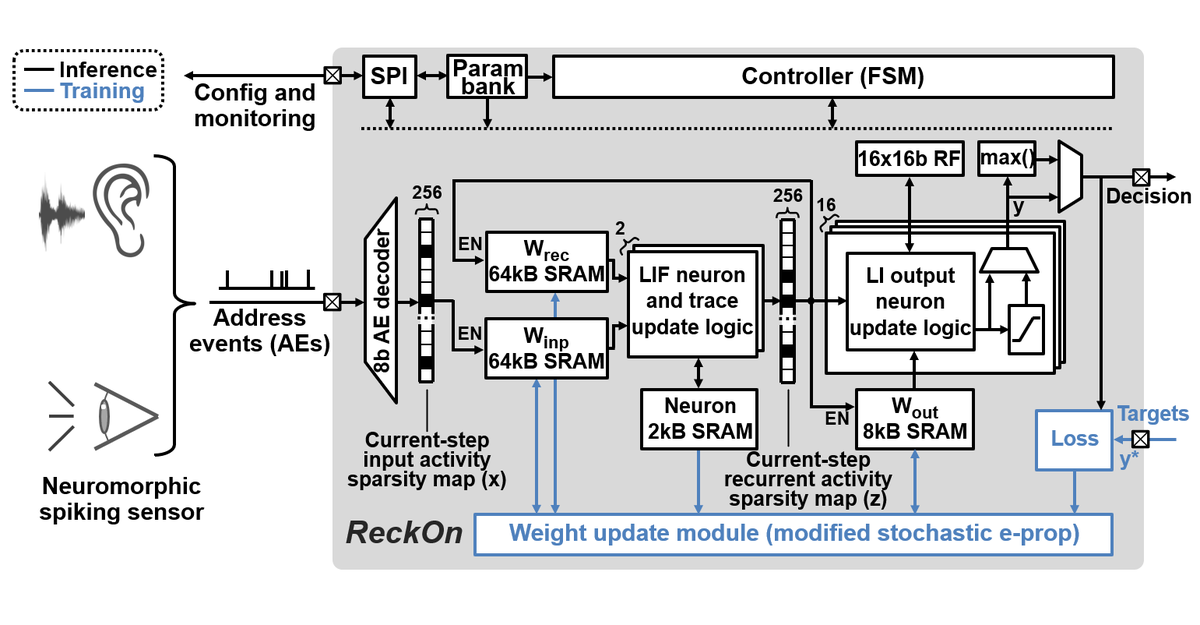

[1/14] Excited to share that our neuromorphic chip ReckOn made it to #ISSCC! With this spiking RNN processor, we demonstrate for the first time end-to-end on-chip learning over second-long timescales.

Tune in to Session 29 on Feb 24 9am PST for the live Q/A... or see 👇 for a 🧵

Tune in to Session 29 on Feb 24 9am PST for the live Q/A... or see 👇 for a 🧵

[2/14] First, why on-chip learning? For continuous adaption to new/uncontrolled user and environment features, evolving task requirements, and privacy. However, no end-to-end solution has been proposed to date for on-chip learning with dynamic stimuli such as speech/video.

[3/14] Indeed, backpropagation through time (BPTT) requires unrolling the network in time during training. Learning second-long stimuli while keeping a milli-second resolution would thus lead to intractable memory and latency requirements for tiny silicon devices at the edge.

[4/14] The e-prop algorithm [@BellecGuill, 2020] approximates BPTT gradients as the product of two terms: bio-plausible eligibility traces (ETs) computed during the forward pass, and error-dependent learning signals available locally in time. No more network unrolling in time!

[5/14] However, we have a dilemma. Networks of leaky integrate-and-fire (LIF) neurons with bio-plausible leak time constants have simple ETs but cannot learn long temporal dependencies. Adaptive LIF (ALIF) neurons are more powerful but ET computation would be intractable on-chip.

[6/14] To solve this challenge, we propose to use a LIF neuron model with a leakage time constant configurable up to seconds, at the expense of bio-plausibility. We show that this enables LIF networks to learn long temporal dependencies while retaining a simple ET formulation.

[7/14] We then modify the e-prop equations so that ET storage requirements scale with the number of neurons, instead of the number of synapses. This enables our approach to learn second-long dependencies with a memory overhead of only 0.8% compared to the inference-only network!

[8/14] We also leverage a nice property of spike-based computation, beyond the usual exploitation of sparse event-driven processing. Just as in the brain, spikes are sensor-agnostic. As our e-prop-based training scheme is also code-agnostic, we have a task-agnostic learning chip!

[9/14] To demonstrate this, we chose three benchmarks associated to vision, audition and navigation, whose durations range from half a second to several seconds. ReckOn learned to perform all of these tasks on-chip and from scratch (no access to external memory, no pre-training).

[10/14] ReckOn was fabricated in 28nm CMOS with a core area of 0.45mm². By scaling supply down to 0.5V, we show for these tasks a worst-case power budget of:

- 20µW for real-time inference,

- 46µW for real-time learning.

This is suitable for an always-on deployment at the edge!

- 20µW for real-time inference,

- 46µW for real-time learning.

This is suitable for an always-on deployment at the edge!

[11/14] Compared to prior work embedding end-to-end on-chip learning (both spiking & non-spiking), ReckOn has the following unique features:

- multi-layer learning over second-long timescales,

- yet the lowest area and highest synaptic density (with and w/o tech. normalization).

- multi-layer learning over second-long timescales,

- yet the lowest area and highest synaptic density (with and w/o tech. normalization).

[12/14] With our vision, audition and navigation benchmarks, we demonstrate task-agnostic learning at 1- to 5-ms temporal resolutions, with a competitive energy/step at 0.5V. Previous demonstrations of on-chip learning focused on image classification or instantaneous decisions.

[13/14] To sum up, we have 3 claims:

- end-to-end from-scratch learning over long timescales at a milli-second temporal resolution,

- low-cost solution (0.45mm² area, <50µW for real-time learning) thanks to a 0.8% memory overhead vs inference,

- spikes for task-agnostic learning.

- end-to-end from-scratch learning over long timescales at a milli-second temporal resolution,

- low-cost solution (0.45mm² area, <50µW for real-time learning) thanks to a 0.8% memory overhead vs inference,

- spikes for task-agnostic learning.

[14/14] The Verilog code and doc of ReckOn are open-source, a first release has been made here:

github.com/ChFrenkel/Reck…

A testbench is provided and FPGA prototyping is straightforward.

Will post the paper link as soon as on IEEEXplore (feel free to DM me for earlier access :) ).

github.com/ChFrenkel/Reck…

A testbench is provided and FPGA prototyping is straightforward.

Will post the paper link as soon as on IEEEXplore (feel free to DM me for earlier access :) ).

• • •

Missing some Tweet in this thread? You can try to

force a refresh