How far can we get by training Text-to-SQL models without any annotated SQL? Pretty far, it seems!

Our new work in Findings of #NAACL2022 with @JonathanBerant and Daniel Deutch

Paper: arxiv.org/abs/2112.06311

Code: github.com/tomerwolgithub…

🧵 1/5

Our new work in Findings of #NAACL2022 with @JonathanBerant and Daniel Deutch

Paper: arxiv.org/abs/2112.06311

Code: github.com/tomerwolgithub…

🧵 1/5

Text-to-SQL models should help non-experts easily query databases. But annotating examples to train them requires expertise (labeling NL utterances with SQL queries).

Can we train good enough models without any expert annotations?

2/5

Can we train good enough models without any expert annotations?

2/5

Instead of gold SQL, we train text-to-SQL models on weak supervision: (1) answers & (2) question decompositions (annotated / predicted by a model) ⛏️

3/5

3/5

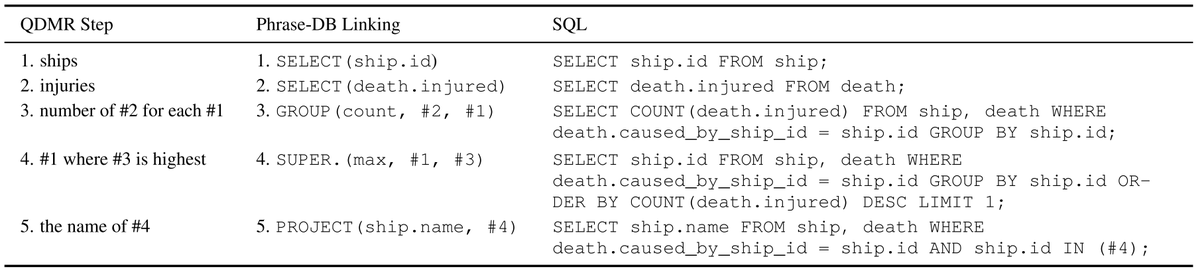

Using the database + question decomposition + answer we automatically synthesize a corresponding SQL query. This is well captured by mapping rules from the decomposition to SQL.

4/5

4/5

We test on five text-to-SQL benchmarks:

(1) Weakly supervised models reach ~94% of those trained on gold SQL

(2) Even models trained on few / zero in-domain decompositions still reach ~90% of the gold SQL ones

More results in our paper! 📃⚒️

👉🏽arxiv.org/pdf/2112.06311…

5/5

(1) Weakly supervised models reach ~94% of those trained on gold SQL

(2) Even models trained on few / zero in-domain decompositions still reach ~90% of the gold SQL ones

More results in our paper! 📃⚒️

👉🏽arxiv.org/pdf/2112.06311…

5/5

• • •

Missing some Tweet in this thread? You can try to

force a refresh