🌸 The @BigScienceLLM BLOOM 176B parameters model training has just passed 230B tokens: that’s more than a million books in two months!

🤔 But how did we decide what model to train with our one million GPU hours?

⬇️ Thread time! #acl2022nlp

🤔 But how did we decide what model to train with our one million GPU hours?

⬇️ Thread time! #acl2022nlp

🏅 We had five main considerations: it needed to be proven, scalable, efficient, multilingual, and to exhibit emergent capabilities (e.g. zero-shot generalization)

⏰ At the >100B scale, every inefficiency matters! We can’t afford an unoptimized setup…

⏰ At the >100B scale, every inefficiency matters! We can’t afford an unoptimized setup…

🤗 Thanks to a generous grant from @Genci_fr on #JeanZay, we had plenty of compute to benchmark our dream architecture.

📈 We ran our experiments with 1.3B models, pretraining on 100-300B tokens, to increase the likelihood our findings would transfer to the final >100B model.

📈 We ran our experiments with 1.3B models, pretraining on 100-300B tokens, to increase the likelihood our findings would transfer to the final >100B model.

📊 We focused on measuring zero-shot generalization with the EleutherAI LM harness, capturing performance across 27 diverse tasks. We also kept an eye on training speed.

🙅 No finetuning, because it would be difficult to maintain many sets of finetuned weights for the 176B model!

🙅 No finetuning, because it would be difficult to maintain many sets of finetuned weights for the 176B model!

🧱 We based our initial setup on the popular GPT-3 architecture (arxiv.org/abs/2005.14165), knowing it scales well and that it achieves excellent zero-shot performance.

🤔 Why not a BERT or a T5? That’s an excellent question we also answered, in a different paper!

🤔 Why not a BERT or a T5? That’s an excellent question we also answered, in a different paper!

👀As a teaser for now: arxiv.org/abs/2204.05832

🧪 We found causal decoder-only models to be best at zero-shot immediately after pretraining, and that it’s possible to adapt them efficiently into non-causal models to also get good performance on multitask finetuning (e.g. T0).

🧪 We found causal decoder-only models to be best at zero-shot immediately after pretraining, and that it’s possible to adapt them efficiently into non-causal models to also get good performance on multitask finetuning (e.g. T0).

💾 Onto our benchmark: first, the influence of pretraining data. We trained three models for 112B tokens on OSCAR, C4, and The Pile.

➡️ Proper filtering and the addition of cross-domain high-quality data improves zero-shot generalization! Scale can't compensate for bad data...

➡️ Proper filtering and the addition of cross-domain high-quality data improves zero-shot generalization! Scale can't compensate for bad data...

🧮 Then, we moved on to the recent hot topic of positional embeddings: we compared no embeddings, learned, rotary, and ALiBi.

➡️ We find that ALiBi positional embeddings significantly outperforms other embeddings (and, surprisingly, not using any embedding isn’t that bad!)

➡️ We find that ALiBi positional embeddings significantly outperforms other embeddings (and, surprisingly, not using any embedding isn’t that bad!)

⛩️ We also looked at activation functions, and found that Gated Linear Units provide a small improvement.

⚠️ However, SwiGLU was also 30% slower! It's likely because of a problem in our setup, but that made us steer away from it from the 176B model.

⚠️ However, SwiGLU was also 30% slower! It's likely because of a problem in our setup, but that made us steer away from it from the 176B model.

🔬 One last architectural choice: we investigated the addition of a layer normalization after the embedding.

🤔 This enhances training stability, but comes at a notable cost for zero-shot generalization.

🤔 This enhances training stability, but comes at a notable cost for zero-shot generalization.

🗺️ We also considered multilinguality, training a 1.3B model on 11 languages.

😥 Multilinguality comes at the expense of English-only performance, reducing it significantly. Our results are inline with the findings of XGLM, and are also verified on the final model (stay tuned!)

😥 Multilinguality comes at the expense of English-only performance, reducing it significantly. Our results are inline with the findings of XGLM, and are also verified on the final model (stay tuned!)

🚀 We wrapped-up all of these findings into the final @BigScience 176B model. If you want to learn more on how we decided on its size & shape, check out our previous thread:

https://twitter.com/slippylolo/status/1502274161326141443

⤵️ If you are interested in all the nitty gritty details: openreview.net/forum?id=rI7BL…

👨🏫If you are at #acl2022nlp, our poster: underline.io/events/284/ses…

🌸And check-out the BigScience workshop on Friday for more open LLM goodness: bigscience.huggingface.co/acl-2022

👨🏫If you are at #acl2022nlp, our poster: underline.io/events/284/ses…

🌸And check-out the BigScience workshop on Friday for more open LLM goodness: bigscience.huggingface.co/acl-2022

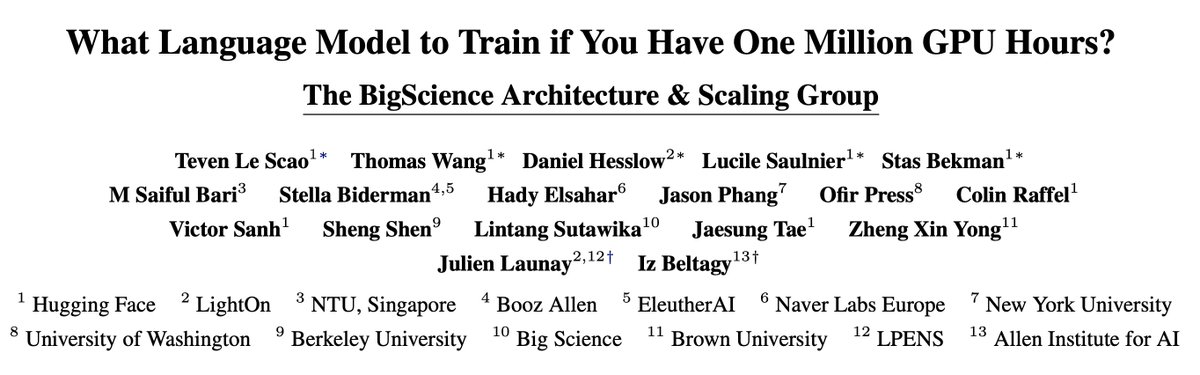

🙏 And thanks to everyone involved: @Fluke_Ellington @thomas_wang21 @DanielHesslow @LucileSaulnier @StasBekman @sbmaruf @BlancheMinerva @hadyelsahar @zhansheng @OfirPress @colinraffel @SanhEstPasMoi @shengs1123 @lintangsutawika @jaesungtae @yong_zhengxin @i_beltagy

• • •

Missing some Tweet in this thread? You can try to

force a refresh