There’s a very real possibility local dev may be dead in 10 years.

- @isamlambert “Planetscale doesnt believe in localhost”

- @ericsimons40 Stackblitz runs Node fast in the browser

- @github runs entirely on Codespaces

This would be the biggest shift in dev workflow since git.

- @isamlambert “Planetscale doesnt believe in localhost”

- @ericsimons40 Stackblitz runs Node fast in the browser

- @github runs entirely on Codespaces

This would be the biggest shift in dev workflow since git.

writing about “The Death of Localhost” in my next @DXTipsHQ piece, pls offer any relevant data/notable technologies. will acknowledge in writeup!

dx.tips

dx.tips

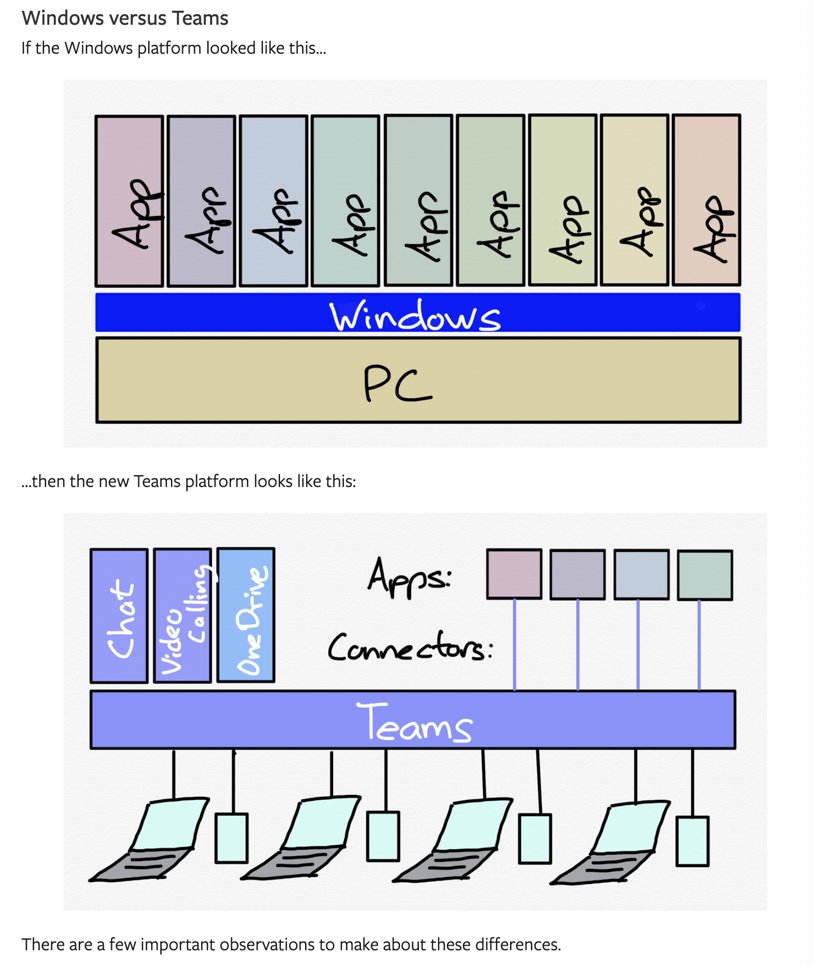

only 2 ways to make money in dev infra

bundling and unbundling the mainframe

bundling and unbundling the mainframe

https://twitter.com/nitzdev/status/1533914008415358978

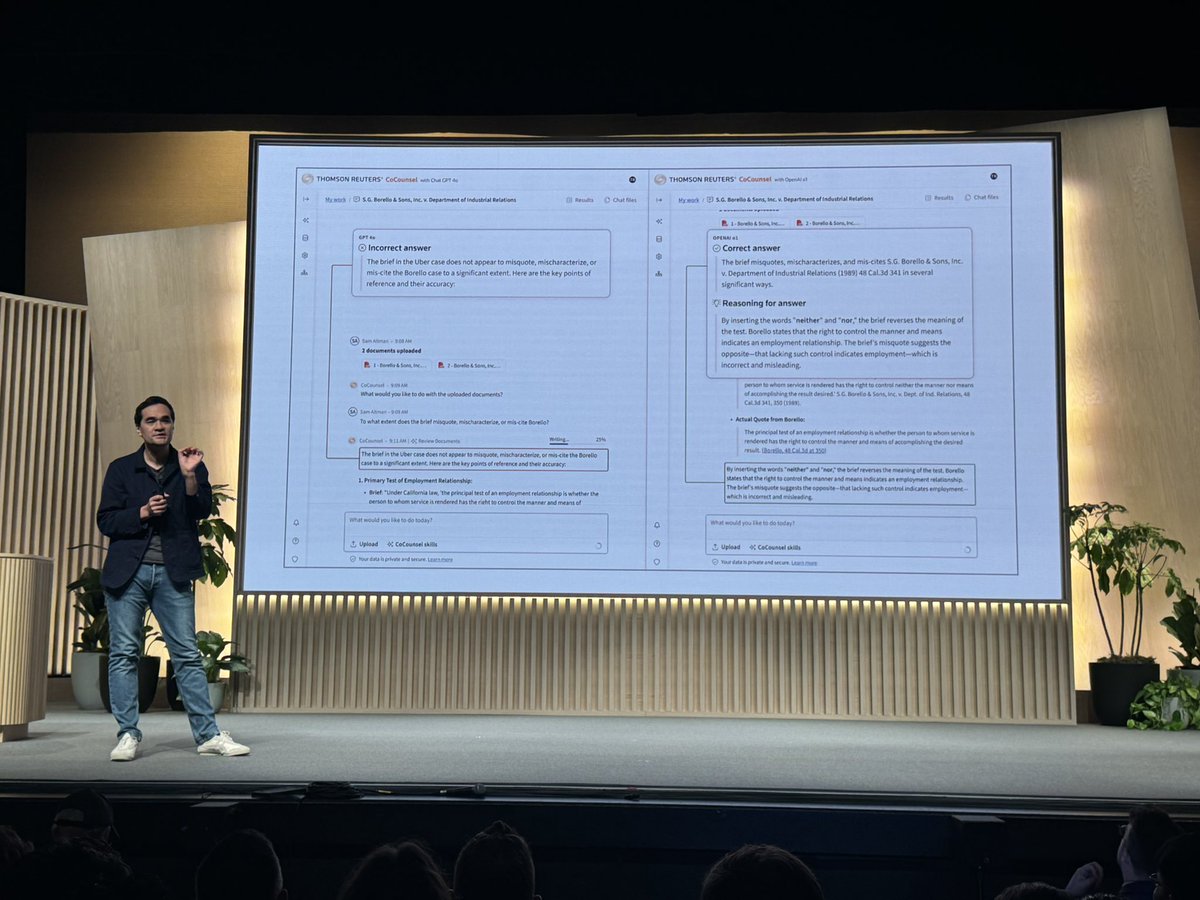

Here is @benthompson writing about @satyanadella's Build keynote:

"the thin client model has won"

stratechery.com/2022/thin-plat…

Perfect for the big move from a Windows-centric to Azure-centric Microsoft.

I wonder if this was planned since the Dave Cutler era

"the thin client model has won"

stratechery.com/2022/thin-plat…

Perfect for the big move from a Windows-centric to Azure-centric Microsoft.

I wonder if this was planned since the Dave Cutler era

https://twitter.com/swyx/status/1241537049955622913

@isamlambert @ericsimons40 @github This is live!

big thanks to everyone that contributed opinions and datapoints (shoutouts in the article)! I ended up compiling a nice big list of all the bigco cloud dev environments, and collecting all the arguments for/against

https://twitter.com/DXTipsHQ/status/1534572199557836800?s=20&t=WzHnQm7YIUCd0C9vYv2zVw

big thanks to everyone that contributed opinions and datapoints (shoutouts in the article)! I ended up compiling a nice big list of all the bigco cloud dev environments, and collecting all the arguments for/against

I've updated this post with great takes from this fantastic thread from Kelsey!

In particular like the "working around red tape" theory. So much of cloud service growth is secretly shadow IT.

https://twitter.com/kelseyhightower/status/1534723549830602753?s=20&t=Letc3ZvaBUjOCFmmuKllBQ

In particular like the "working around red tape" theory. So much of cloud service growth is secretly shadow IT.

Some survey data and interesting responses (see the Etsy one) in this poll!

https://twitter.com/simonw/status/1535298667854278657?s=20&t=Y4n45SWd6Bd06Ah0-Jx1Cg

"in the long run, fully utilizing the cloud will make engineers more productive. Imagine taking a large test suite and running 1000 tests in parallel on a FaaS platform"

https://twitter.com/bernhardsson/status/1551241460749668355?s=20&t=_uTbhbnr1v8Xwo7x2yk0Yw

Google launched Cloud Workstations at this week's #GoogleCloudNext

siliconangle.com/2022/10/11/goo…

this is basically the entire End of Localhost thesis, fully supported as step 1 of GCP’s solution to the software supply chain problem

(great recap on the GCP pod with @forrestbrazeal)

siliconangle.com/2022/10/11/goo…

this is basically the entire End of Localhost thesis, fully supported as step 1 of GCP’s solution to the software supply chain problem

(great recap on the GCP pod with @forrestbrazeal)

$25m series a?! in this economy?!

wonderful meetup event tonight. can feel the energy building and love @jolandgraf and @svenefftinge’s incredible passion for solving this problem. super encouraged that they really get how important it is to make dev environments *ephemeral*

wonderful meetup event tonight. can feel the energy building and love @jolandgraf and @svenefftinge’s incredible passion for solving this problem. super encouraged that they really get how important it is to make dev environments *ephemeral*

https://twitter.com/gitpod/status/1588176331065335808

• • •

Missing some Tweet in this thread? You can try to

force a refresh