Getting a lot of interest from people wanting to hear "@props, how did you do that?" re: @Waxbones' @Prometheus_Lab real-time random trait rolling and on-the-fly generation. So here we go 👇

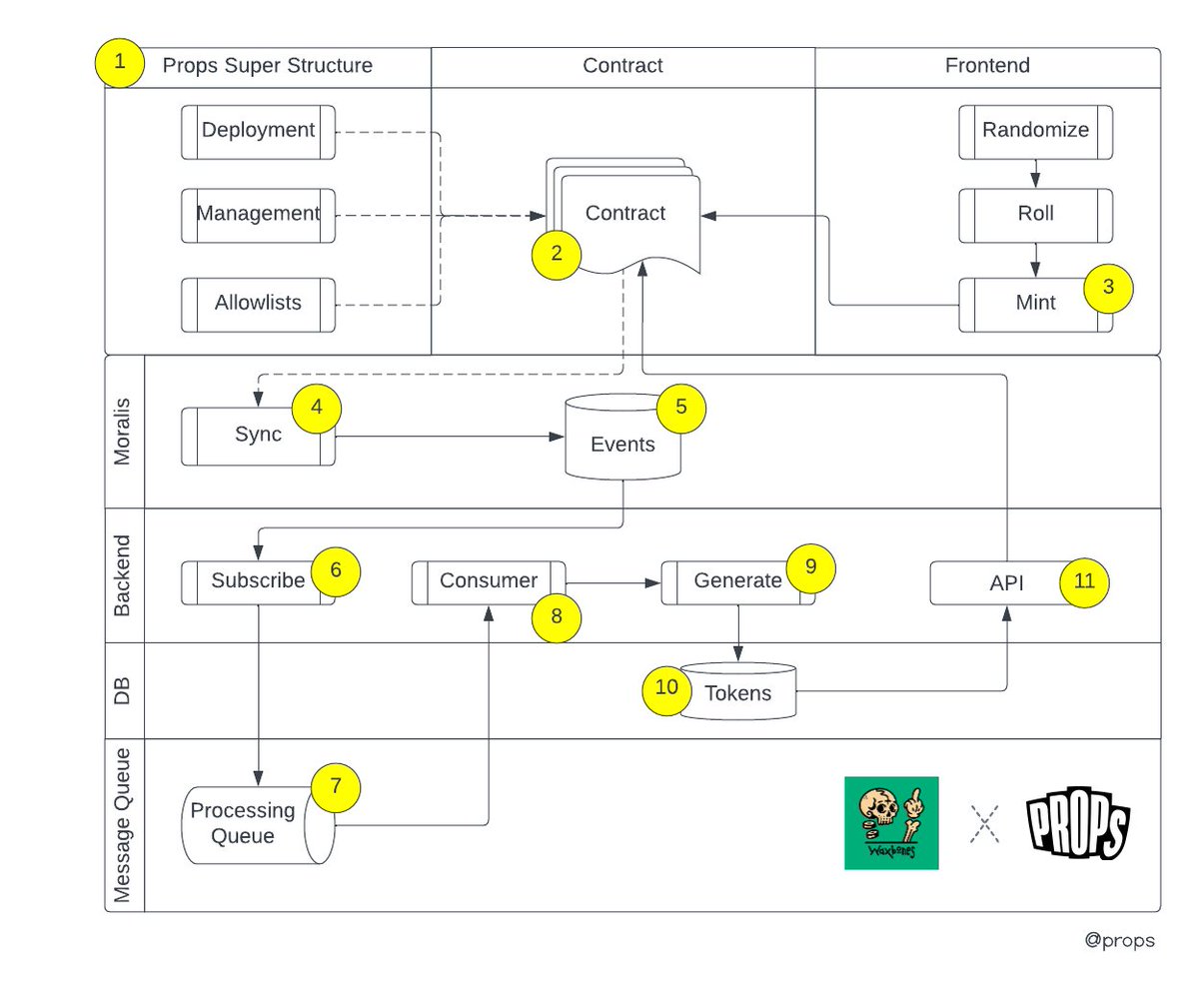

I'll be referencing this high level diagram to unpack each step. Some jargon may be technical but will try to keep it simple (comment with any questions!):

1/11: @props Super Structure:

We recently deployed our upgradable on-chain structure that provides us the ability to deploy contracts (via proxy) and then manage them through a powerful admin tool (not public, yet?).

We recently deployed our upgradable on-chain structure that provides us the ability to deploy contracts (via proxy) and then manage them through a powerful admin tool (not public, yet?).

The Props Platform has allowed us to start modularizing many of our capabilities w/ one-click deployment (721/A, 1155, Comics, Albums, 10Ks, etc), and between 50-100x gas savings for contract creation.

It provides an on-chain access control layer so *many* people on a team with different roles can maintain a project (which can have many contracts) rather than just one dev with a single wallet using a point-solution to manage a single contract. 💪

***We're currently running Props in "Pixar Mode"***

IE, like Pixar's model, we've built amazing sick tech, but for now we're using it to incubate projects internally. We're kicking around some ideas for the future however.

IE, like Pixar's model, we've built amazing sick tech, but for now we're using it to incubate projects internally. We're kicking around some ideas for the future however.

The Props Platform also has (imho) the most flexible and powerful allowlist logic on the planet, providing us an ability to have multiple allowlists mint simultaneously and for individuals to mint their allocations (w/ variable pricing) across those lists in one single cart.

2/11: The Prometheus Lab contract (721A). Gas efficient. Our contracts register themselves with the Props Registries (contract and access) which make them operable from the platform UI

3/11: The sick af frontend developed by the 👑 @calvinhoenes (built in @sveltejs). This project actually uses 2 generation algos, one on the front end (Calvin), one on the backend (Me). I got the easy side, he also had to design UX allowing users to re-roll traits and make it FUN

When you're happy with your trait rolls, those trait combos are sent to the mint() function in the contract as calldata, for the sole purpose of emitting them.

4-5/11: We then sync those emitted events to a @MoralisWeb3 DB.

6-7/11: A background process on our infrastructure subscribes to new records being written to the DB, and when it sees one, it sends a new task to a Message Queue. A message queue stores work to be done (like generation) and provides a ton of resiliency...

we care not if a generation task might fail due to a server running out of memory or a burst of user activity minting or whatevs. The tasks are durable and only removed from the queue when completed by a worker. Workers are free to fail, as another steps up to take the task.

8/11: We multi-thread the workers using Throng for stupid horsepower across the infra and using all cores available. A consumer process listens for work in real-time from the message queue and executes generation when it sees a new task, removing it from the queue on success.

9/11: The generation process is actually pretty simple logic. Since the trait combos were emitted and logged, we just break them apart and then fetch the trait asset corresponding to each, layering them on top of each other using canvas.

We then generate the metadata reflecting those selections and upload the generated image to IPFS, inserting the CID into the metadata's image attribute. This metadata gets stored in the DB by tokenID. From confirmed mint txn to completed token, this process takes 3-5 seconds.

10/11: Our database of choice is Postgres. We wrap our PG databases with Hasura which provides us an instant graphql API server and record streaming which negates the need for ORMs and maintaining schemas outside of the DB itself (pretty f'n cool)

11/11: The Front-End has a public facing API that consumes data from the DB, caching and serving results by tokenID using Redis for snappy response times on tokens that have been fetched before (Redis not on diagram my b)

12/11: The API acts as the BASEURI in the contract and gives us the speed to instant-reveal user generated NFTs... until we mint out all 3,333 units at 1PM EST today on prometheuslab.xyz, at which time all of the token metadata will be sent to IPFS and the BASEURI frozen

Soooo yeah, that's pretty much it. If you made it this far, #props to you.

Bull or bear we persevere and continue building. We're honored for this opportunity.

How to support Props? Support the projects we help. These amazing souls are the ones creating value in Web3 👊

Bull or bear we persevere and continue building. We're honored for this opportunity.

How to support Props? Support the projects we help. These amazing souls are the ones creating value in Web3 👊

• • •

Missing some Tweet in this thread? You can try to

force a refresh