"Can quantum androids dream of quantum electrodynmic sheep?"🤔🤖⚛️💭🐑⚡️

We explore this in a new paper on quantum-probabilistic generative models 🎲⚛️🤖💭 and information geometry 🌐📏 from our former QML group @Theteamatx 🥳

Time for an epic thread 👇

scirate.com/arxiv/2206.046…

We explore this in a new paper on quantum-probabilistic generative models 🎲⚛️🤖💭 and information geometry 🌐📏 from our former QML group @Theteamatx 🥳

Time for an epic thread 👇

scirate.com/arxiv/2206.046…

For some context: quantum-probabilistic generative models (QPGM) are a class of hybrid ML models which combine classical probabilistic ML models (e.g. EBM's) and quantum neural networks (QNNs). As we show in this new paper, turns out these types of models are optimal in many ways

It's really important to be able to model *mixed states* (probabilistic mixtures of quantum states) as most states in nature are not pure states/zero temperature! 🥵🔥🏞️ Nature is a mix of probabilistic 🎲 and quantum ⚛️, hence so should your models of it! 🤖⚛️🎲

So given quantum data ⚛️🔍 or target physics 🎯⏳🔥 you want this model to mimic, how to you train these?

Q: What loss do you use to compare the model and target? ⚖️

A: Quantum relative entropy is king 👑

Q: What kind of QPGM works best?

A: E?.... EBM's --> QHBMs

Q: What loss do you use to compare the model and target? ⚖️

A: Quantum relative entropy is king 👑

Q: What kind of QPGM works best?

A: E?.... EBM's --> QHBMs

Quantum Hamiltonian-Based Models (QHBM's) 🎲⚛️🤖🔥are great because they separate out the model into a classical EBM⚡️that runs MCMC for sampling from *classical* Boltzmann🔥+ a purely unitary QNN 😇, thus using QCs to circumvent sign problem for sampling of quantum states➖🔚👋

What's cool is that due to the *diagonal*↘️ exponential (Gibbs 🔥) parameterization, these models have very clean unbiased estimators 🤏📏 for the gradients ⤵️ of both the forwards ➡️ quantum relative entropy, and the backwards one ⬅️. Allowing for both generating and learning

How do you train these many-headed thermal beasts?🐉🔥⚛️

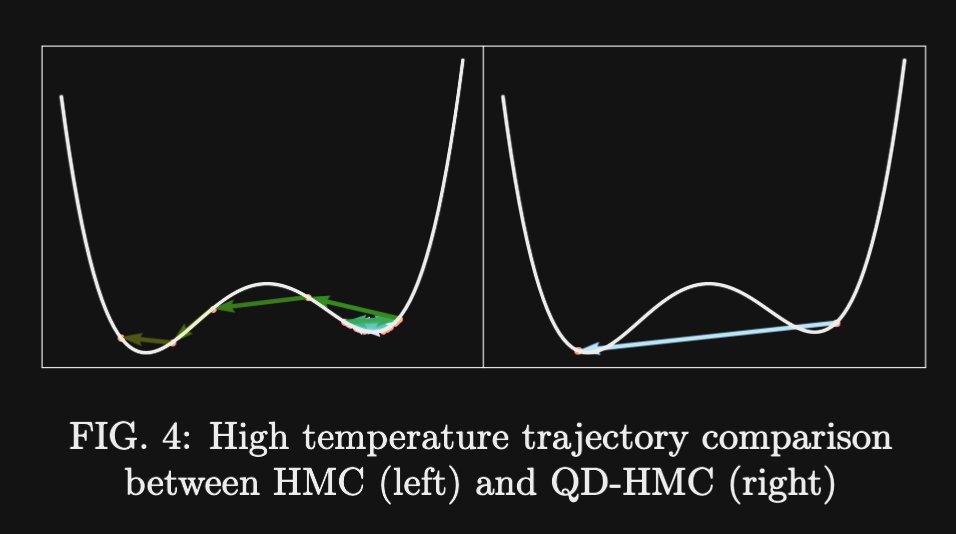

Regular gradient descent can struggle; small moves 🎛️🤏 in parameter space can lead to big/rapid moves 🏎️💨 in *quantum state space* 😱😩

Your optimizer can then get lost in the sauce 😵💫🥴🥣 and struggle to do downhill📉

Regular gradient descent can struggle; small moves 🎛️🤏 in parameter space can lead to big/rapid moves 🏎️💨 in *quantum state space* 😱😩

Your optimizer can then get lost in the sauce 😵💫🥴🥣 and struggle to do downhill📉

So what's the trick?

First, use small changes in quantum relative entropy as your notion of length; look at a circle ⭕️🌐, pull it back to parameter space, it becomes squished (ellipsoid 🏉), now unsquishing your coordinates (📐Euclideanizing) now means small 🤏➡️📏maps to smol👍

First, use small changes in quantum relative entropy as your notion of length; look at a circle ⭕️🌐, pull it back to parameter space, it becomes squished (ellipsoid 🏉), now unsquishing your coordinates (📐Euclideanizing) now means small 🤏➡️📏maps to smol👍

That's great! What's the catch? Well in n dim an ellipsoid 🏉 is an n^2 matrix (think of n semimajor raddii vectors of n dim each), that's a lot of parameters to estimate for each step 🫤⏳💀⚰️

That's quantum-probabilistic NGD, perfect theoretically 🏆, but slow practically ⏳😖

That's quantum-probabilistic NGD, perfect theoretically 🏆, but slow practically ⏳😖

What if we could have our cake and eat it too? 🤔🎂🏆

The trick is to use the bowl force 🥣🖖 like a rubber band 🪀 with an anchor point, and use gradient descent 📉 as an inner loop to find an NGD-equivalent update. This is Quantum-Probabilistic *Mirror* Descent (QPMD). 👑🦾

The trick is to use the bowl force 🥣🖖 like a rubber band 🪀 with an anchor point, and use gradient descent 📉 as an inner loop to find an NGD-equivalent update. This is Quantum-Probabilistic *Mirror* Descent (QPMD). 👑🦾

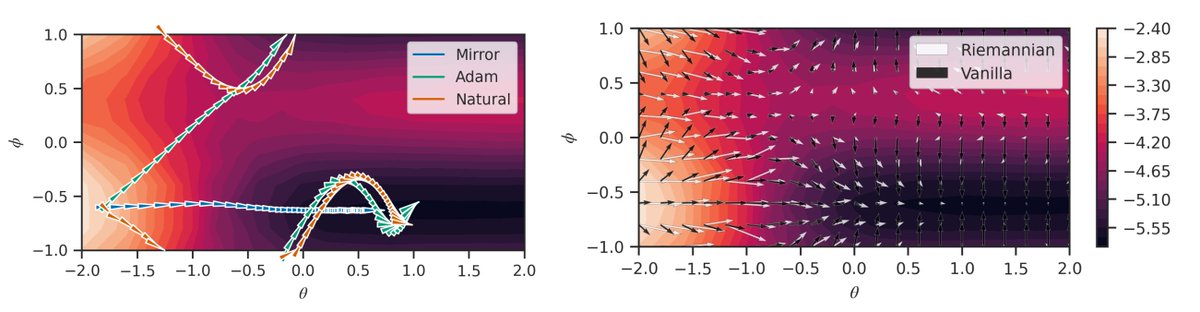

In our numerics, QPMD was *goated* 🐐👑🦾, even compared to full QPNGD (see left plot) 😮

It's a first-order (only needs gradients) method that's asymptotically equivalent to *full* QPNGD 🙌

Correcting GD steps with QPMD yields way better optimization trajectories🎯 (right)

It's a first-order (only needs gradients) method that's asymptotically equivalent to *full* QPNGD 🙌

Correcting GD steps with QPMD yields way better optimization trajectories🎯 (right)

This is where things get nutty 🥜🤓. Turns out that our approach is using a type of metric (e.g. 🏉vs 🏈) called the Kubo-Mori metric, and this is the *only* QML metric that achieves theoretical perfect (Fisher) efficiency! 🤯 See @FarisSbahi 🧵4 deets 👇

https://twitter.com/FarisSbahi/status/1535099898332385292?s=20&t=kC_EDvUVxSMCCwGPwrdq6w

What's the catch? 🤨 Well, to achieve this perfect efficiency and provable convergence guaranteed poractically, you need to be in the convex bowl 🥣 near the optimum of the loss landscape 🏔️🏂

Are there scenarios where that happens? 🤔 Yes! Huge number of scenarios of interest!😮

Are there scenarios where that happens? 🤔 Yes! Huge number of scenarios of interest!😮

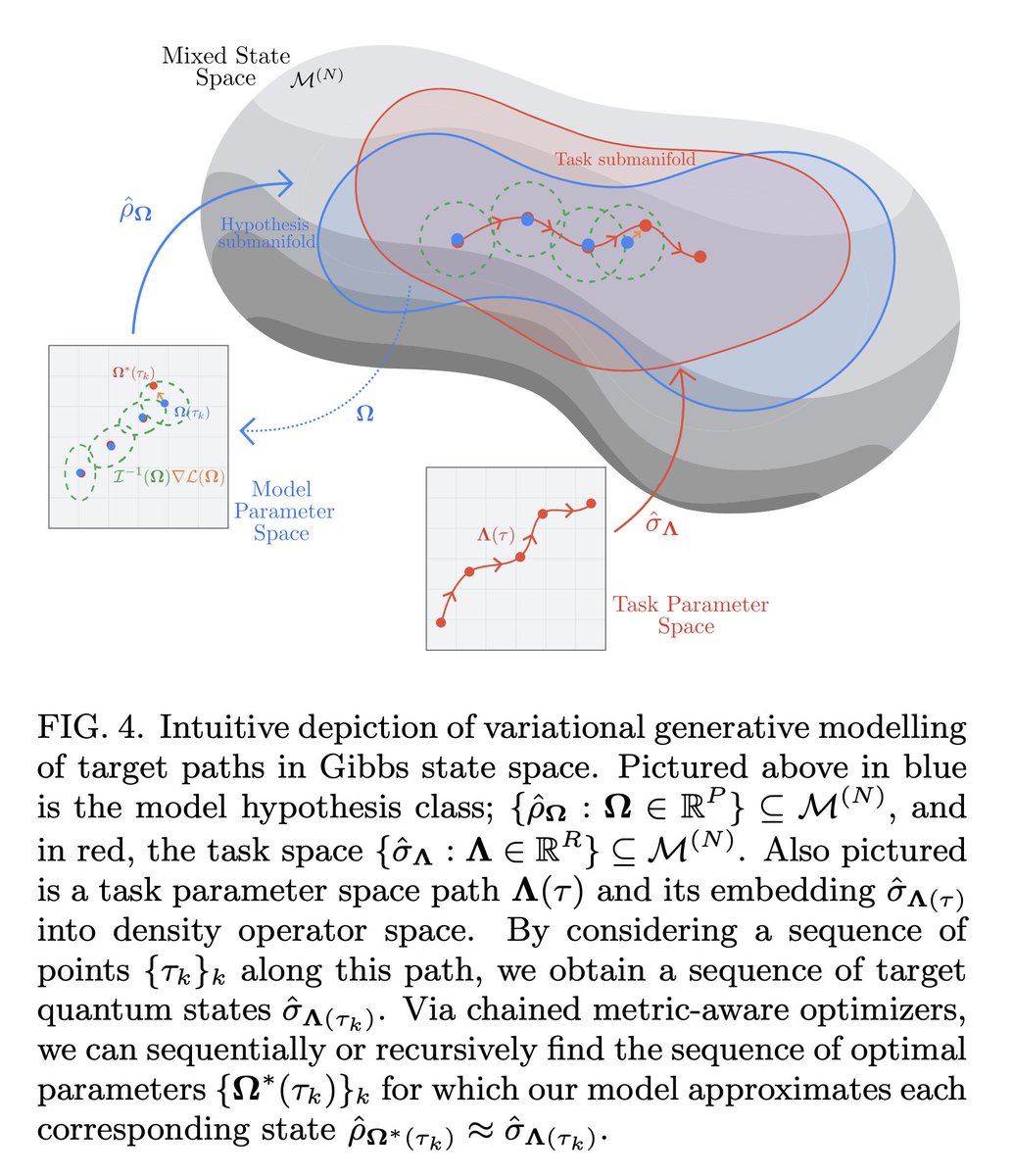

What if you wanted to *evolve* continuously a quantums state? 🏄 Could you just continuously surf the landscape as it shifts under you? 🤙🌊

Yes! You just gotta stay in the bowl 🥣 and ride the wave as the landscape shifts between *tasks* 🤯 a form of geometric transfer learning

Yes! You just gotta stay in the bowl 🥣 and ride the wave as the landscape shifts between *tasks* 🤯 a form of geometric transfer learning

You can imagine most tasks as following a *path* in some task space. 🚡🌐 If you have a sequence of close (🤏📏 in 🥔space) tasks along this path, you can use our anchored rubber band (QPMD) to smoothly transfer your parameters from one task to another, chaining the wins 🏆➡️🏆

Great! ☺️ But what can you do with this?🧐 Well, you can dream up 🤖⚛️💭 just about any quantum simulation that you can parameterize, and make a quantum simulated "movie" 🎥🎞️⚛️

@Devs_FX fans can appreciate 😏

@Devs_FX fans can appreciate 😏

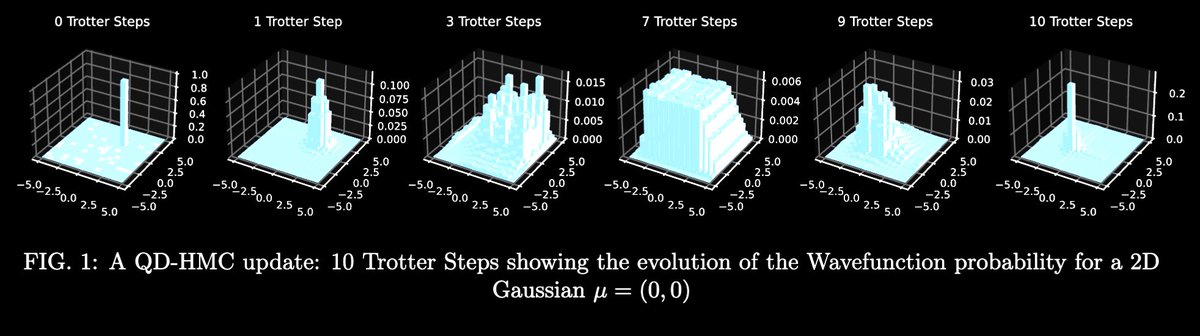

What happens when you try to generatively simulate, for example, time evolution? 🎥⏲️⚛️ Here's a genertaively modelled density matrix over time for a magnetic quantum system (transverse field Ising model) ⚛️🧲

Turns out we can recursively variationally learn to integrate time dynamics using our QVARTZ method (recursive ↪️ variational 🎛️ quantum ⚛️ time ⏲️ evolution 🏄) by evolving models a bit and re-learning them. This is a big deal because the quantum circuits 🎼 can remain small 🤏

What about *imaginary* time evolution, aka simulating a slow cooling process?🔥➡️❄️ We can do that too! Sequentially rather than recursively. We see it's way better to slowly go up in *coldness* (inverse temperature)🏔️🚠 than to try to go straight for a target 🪂 temperature 🔥🥵

We call this approach to imaginary time evolution META-VQT. 😶🌫️🔥 We think it could be a serious disruption to anyone interested even in basic VQE. It's a special case our our-geometric-transfer-learning methods

(FYI imaginary/Euclidean time+coldness 🥶 are all the same thing)

(FYI imaginary/Euclidean time+coldness 🥶 are all the same thing)

Phiew! 😅😤 That was a lot! 🥵 Tons of new possibilites with these methods... this is just the beginning of the story... are you ready to jump into *the simulation* with us? 🚀🤖🎲⚛️🖖

If so...

If so...

"Can I just use this stuff?" 🧑💻👨💻👩💻⚛️🎲🤖 Yes! We released an accompanying open-source library (QHBM lib), now accessible on GitHub 🚀 check it out and start quantum hacking of all sorts of quantum spaces you can dream of! 🤯

github.com/google/qhbm-li…

github.com/google/qhbm-li…

I could keep going..🤓😅 ending 🧵

This broad research program was a long road for ~2 years. Huge congrats to the whole team, @FarisSbahi leading the paper w/ @zaqqwerty_ai lead infra dev, huge s/o to @dberri18 @geoffrey_roeder Jae @sahilpx as well all their efforts & help🏆🫂

This broad research program was a long road for ~2 years. Huge congrats to the whole team, @FarisSbahi leading the paper w/ @zaqqwerty_ai lead infra dev, huge s/o to @dberri18 @geoffrey_roeder Jae @sahilpx as well all their efforts & help🏆🫂

@Theteamatx *electrodynamic sheep

*practically

@Theteamatx Here is the arxiv link directly for those who want to save a click

arxiv.org/abs/2206.04663

arxiv.org/abs/2206.04663

So many typos upon review 😆🙈 wrote this tweetstorm in a hurry

As an aside: Huge shout-out to my former QML Padawan turned quantum Jedi @FarisSbahi for going HAM 😤 taming the quantum metric zoo ⚛️🌐🗺️📏🦙🦛🦡🦒 and proving many important theoretical results not covered in this thread!

If you don't like reading threads, there's also a recorded talk of mine from a few months back (back at #qhack 2022) on the topics and intuitions of this paper👇

Congrats 🎉👏 You've successfully reached the end of the thread 🥳🏆 before you try to read the paper: 👾🧙♂️it's dangerous to go alone, take this 🗡️

https://twitter.com/quantumVerd/status/1535277628072001541?t=H7_EDiOSD--kGih76g5hWQ&s=19

And finally, when you feel ready 💪 for an even greater adventure 🚀, you can head to arxiv and dive deep into the maths 🤿📃📚✍️

glhf! 🫡 DMs open for Questions! 📲

glhf! 🫡 DMs open for Questions! 📲

https://twitter.com/quantumVerd/status/1535257877765578753?t=H7_EDiOSD--kGih76g5hWQ&s=19

• • •

Missing some Tweet in this thread? You can try to

force a refresh