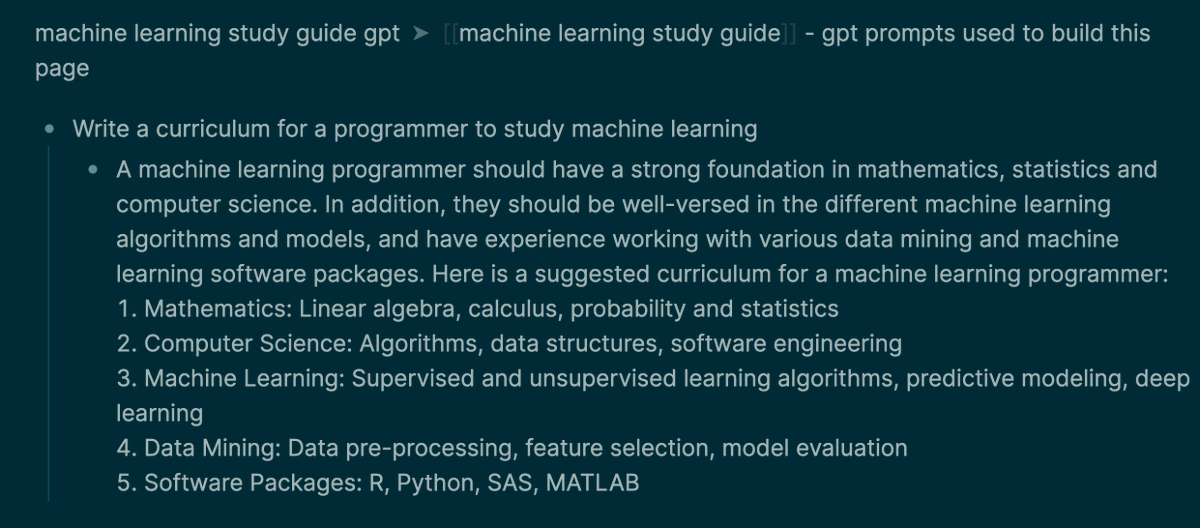

How to use #gpt3 for #gpt3poweredlearning like my machine learning study guide:

briansunter.com/graph/#/page/m…

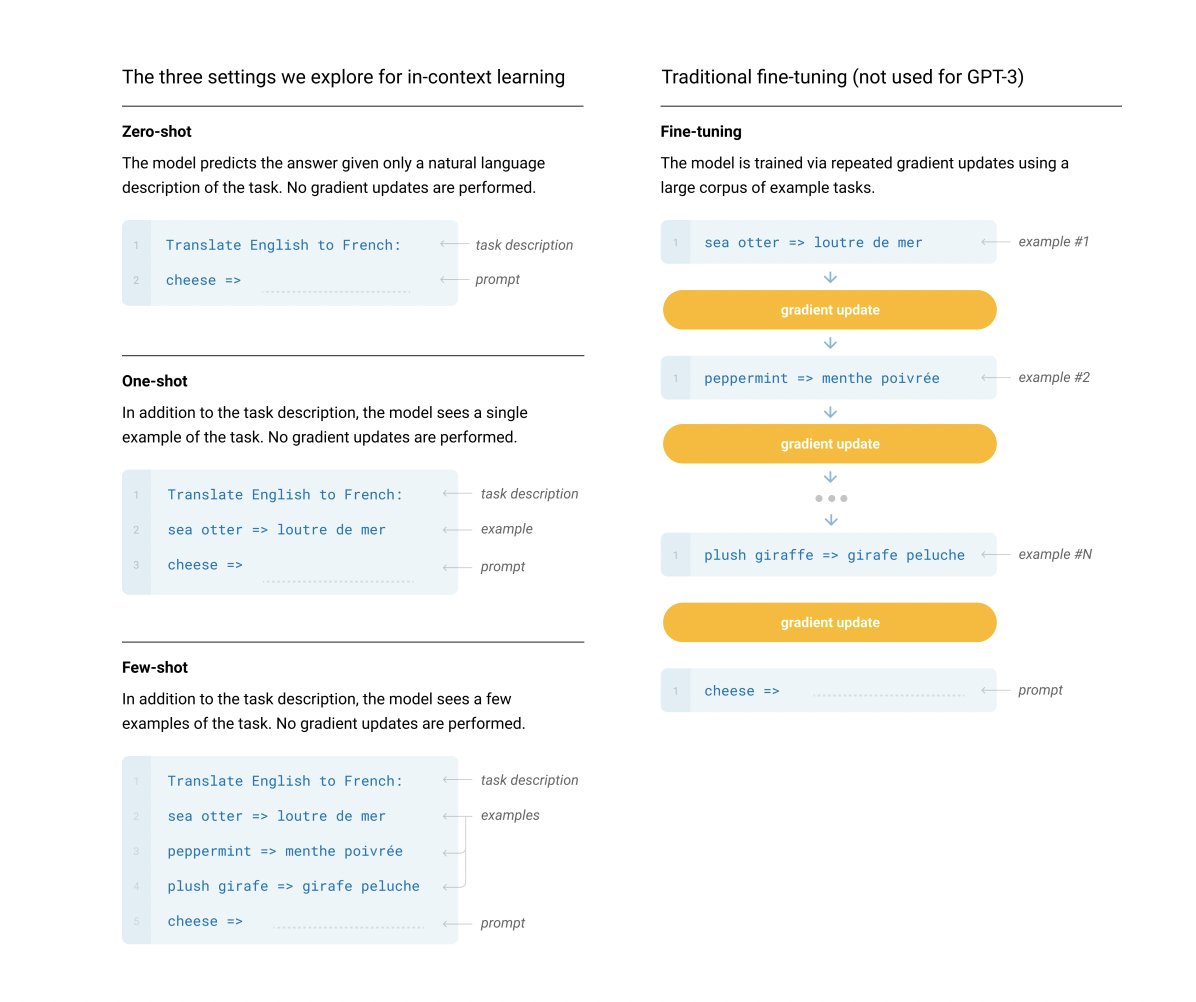

1. Ask it to create a high level study guide:

"Write a curriculum for a ${type of person} to study ${topic}"

briansunter.com/graph/#/page/m…

1. Ask it to create a high level study guide:

"Write a curriculum for a ${type of person} to study ${topic}"

2. Ask it to create a study guide for each subtopic.

"Write a curriculum for ${type of person} to study the most important ${subtopic} concepts for ${topic}"

"Write a curriculum for ${type of person} to study the most important ${subtopic} concepts for ${topic}"

3. Write detailed guide for subtopic.

"Write a advanced guide for a programmer to study support vector machines for machine learning"

"Write a advanced guide for a programmer to study support vector machines for machine learning"

4. Ask follow up questions on questions you have about the guide. Prompt it to be a "chat bot" if you want shorter more conversational answers.

"Explain what a "decision boundary" is to a programmer in the context of machine learning and support vector machines"

"Explain what a "decision boundary" is to a programmer in the context of machine learning and support vector machines"

5. Clearly separate what your own writing and thoughts with what the AI is writing.

Ideally keep the prompts separated and "cite" them in your own research.

Ideally keep the prompts separated and "cite" them in your own research.

• • •

Missing some Tweet in this thread? You can try to

force a refresh