The next big breakthrough in AI will come from hardware, not software.

Training giant models like PaLM already require 1000s of chips consuming several MW, and we will probably want to keep scaling these up several orders of magnitude. How can we do it? a 🧵

Training giant models like PaLM already require 1000s of chips consuming several MW, and we will probably want to keep scaling these up several orders of magnitude. How can we do it? a 🧵

All computations done by a neural network are ultimately a series of floating point operations.

To do a floating point operation, two (or three) numbers need to be loaded from memory into a circuit that performs the calculation, and the result needs to be stored back in memory.

To do a floating point operation, two (or three) numbers need to be loaded from memory into a circuit that performs the calculation, and the result needs to be stored back in memory.

Loading those numbers from memory costs an extraordinary amount of energy compared to doing the actual computation itself (1000x).

Electrons need to flow across a wire several cm long, and are dissipating energy as heat all along the way.

Electrons need to flow across a wire several cm long, and are dissipating energy as heat all along the way.

A big advantage of this architecture is that it allows us to do very general types of computations, which is what spurred the great IT revolution of the last few decades. Digitalization can be applied to basically anything we can think of.

But such a general architecture will necessarily not be the most optimized if we are looking at a specific computation type.

If we know in advance what floating point numbers we want to multiply, it doesn’t make much sense storing them on a large memory pool far far away.

If we know in advance what floating point numbers we want to multiply, it doesn’t make much sense storing them on a large memory pool far far away.

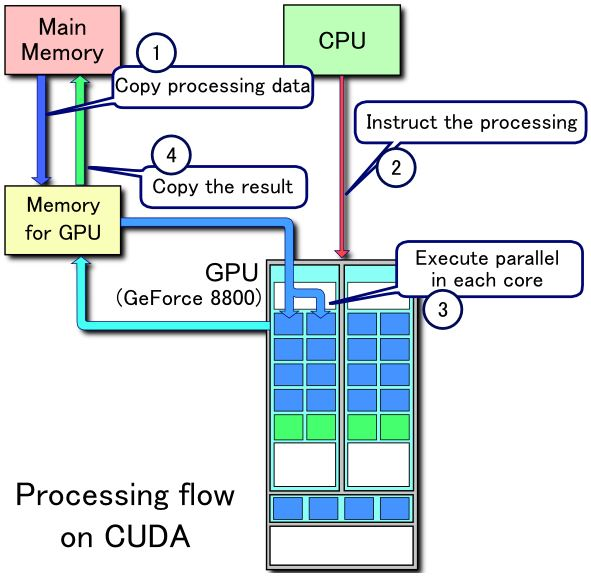

The first step to optimizing this computation is to try to reuse the same values as often as possible, as is done in GPUs, which can load one number and reuse it across several computation units

Systolic arrays are even more efficient, mapping the matrix-matrix multiplication directly to hardware (TPU, Tensor Core), and allowing us to reuse parameters as much as possible.

But these architectures still rely on keeping the parameters on an external memory pool. That means that a lot of time is spent waiting for the numbers to move back and forth from the memory chip.

To make memory transfers faster, we need to increase the memory clock speed, which increases energy consumption even more.

(See HBM3 memory chips next to an H100 core. This chip consumes over 700W)

(See HBM3 memory chips next to an H100 core. This chip consumes over 700W)

Some new startups (Graphcore, Cerebras) are starting to directly design the processing chips with memory and compute mixed together. This reduces the memory transfer overhead to some extent.

However, our brains are way more efficient! The “parameters” in a biological neural network are represented by the strength of synaptic connections, and the computation is done directly on the neuron that receives the signal. Memory and computation happen in the same unit.

Some memory manufacturers are working on in-memory processing systems to truly mix computing and memory in a way that’s much closer to the neuronal processing model.

The tradeoff is that the computations that can be implemented are less general, and to make these chips profitably there needs to be a large, established use-case for them.

At this point, neural network architectures are still evolving quickly and it may create some uncertainty for these manufacturers to settle on a design to mass manufacture. So these kinds of architectures may take a while to mature.

Besides memory locality, there are other factors which could improve efficiency of AI algorithms by orders of magnitude such as sparse computation and analog processing. I’ll cover those later. End of 🧵

• • •

Missing some Tweet in this thread? You can try to

force a refresh