Is it possible that adversarially-trained DNNs are already more robust than the biological neural networks of primate visual cortex? Here is a short thread for our #ICML2022 paper arxiv.org/pdf/2206.11228…. 1/8

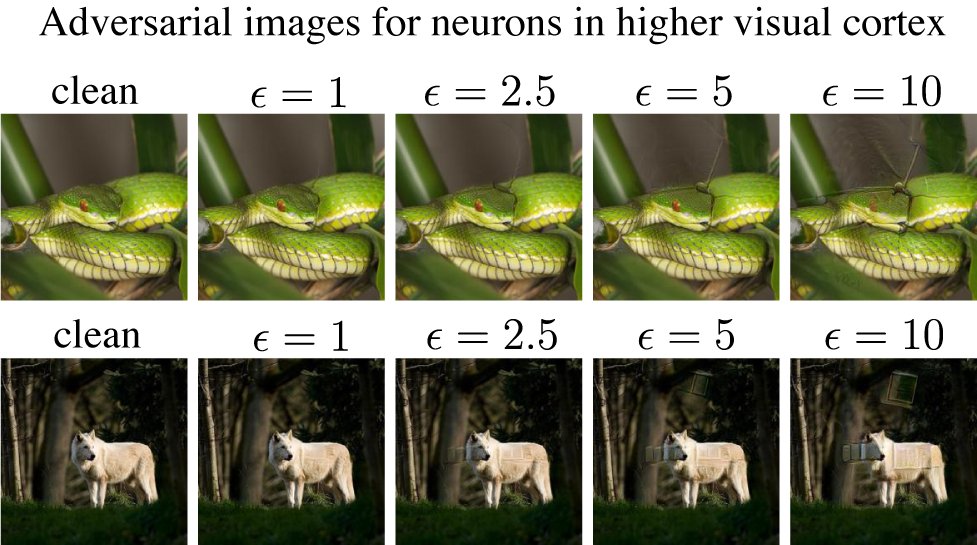

Biological neurons supporting visual recognition in the primate brain are thought to be robust. Using a novel technique, we discovered that neurons are in fact highly susceptible to nearly imperceptible adversarial perturbations. 🙈2/8

So how do biological neurons compare to artificial neurons in DNNs? We find that while biological neurons are less sensitive than units in vanilla DNNs, they are MORE SENSITIVE than units in adversarially trained DNNs. 🤯3/8

All the neurons we recorded are adversarially sensitive (A) and adversarial images can be found near any starting image (B). 4/8

So how is it that primate visual perception seems so robust yet its fundamental units of computation are far more brittle than expected? 5/8

One possibility is that human visual object recognition is actually not robust! We are just fooled into thinking it is robust because we can’t perform a white-box attack on someone’s visual system. 6/8

Alternatively, there could be an error-correction mechanism at the population level or in a downstream area that decodes object identity. Understand this may shed some light on how we could build more robust NNs. 7/8

This work is done with the support of @JamesJDiCarlo in collaboration with the amazing @joeldapello, @gpoleclerc, and @aleks_madry! Come chat with us at #ICML2022! Happy to answer more questions here or in person! 8/8

• • •

Missing some Tweet in this thread? You can try to

force a refresh