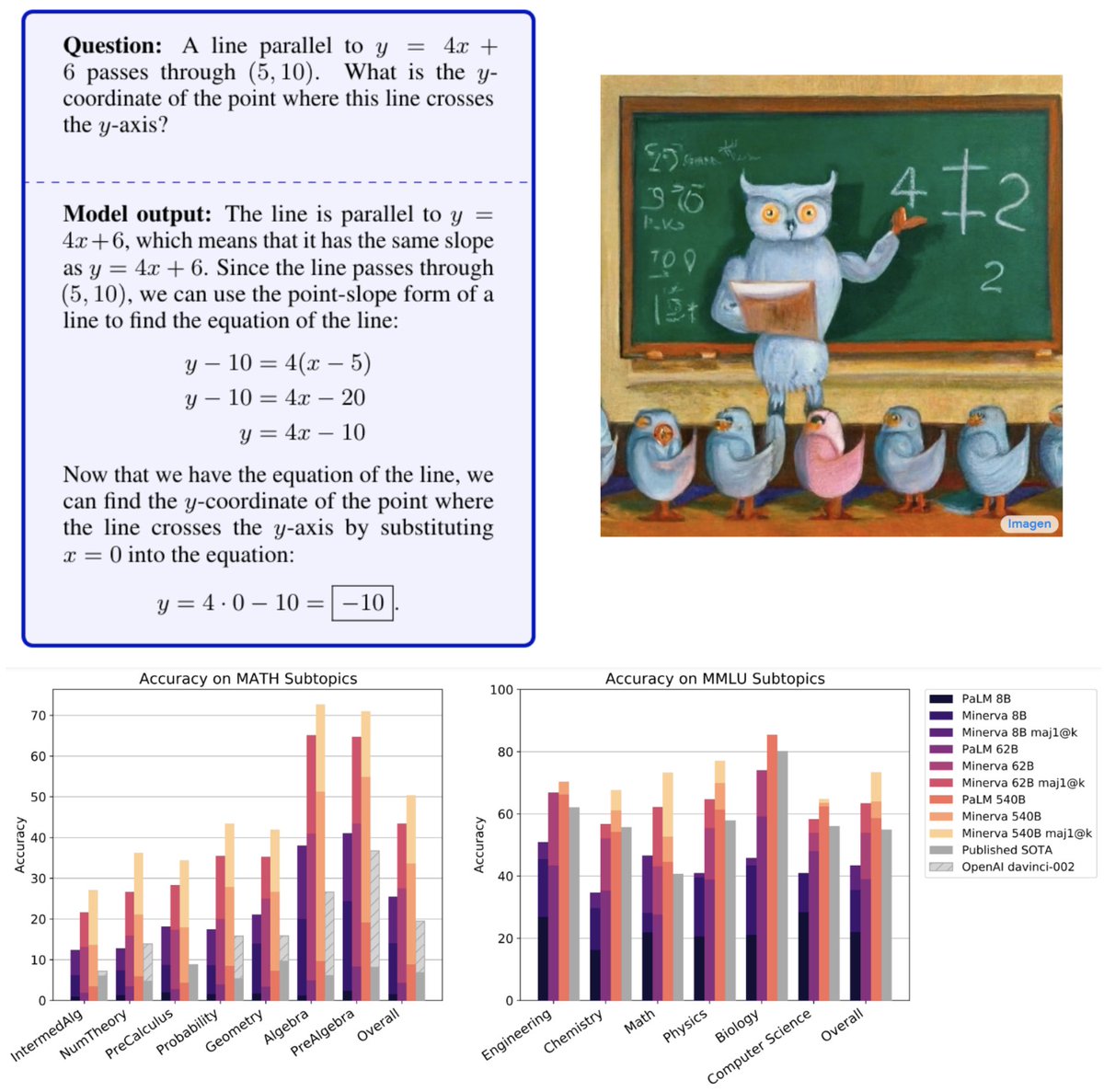

Very excited to present Minerva🦉: a language model capable of solving mathematical questions using step-by-step natural language reasoning.

Combining scale, data and others dramatically improves performance on the STEM benchmarks MATH and MMLU-STEM. goo.gle/3yGpTN7

Combining scale, data and others dramatically improves performance on the STEM benchmarks MATH and MMLU-STEM. goo.gle/3yGpTN7

Starting from PaLM🌴, Minerva was trained on a large dataset made of webpages with mathematical content and scientific papers. At inference time, we used chain-of-thought/scratchpad and majority voting to boost performance without the assistance of external tools.

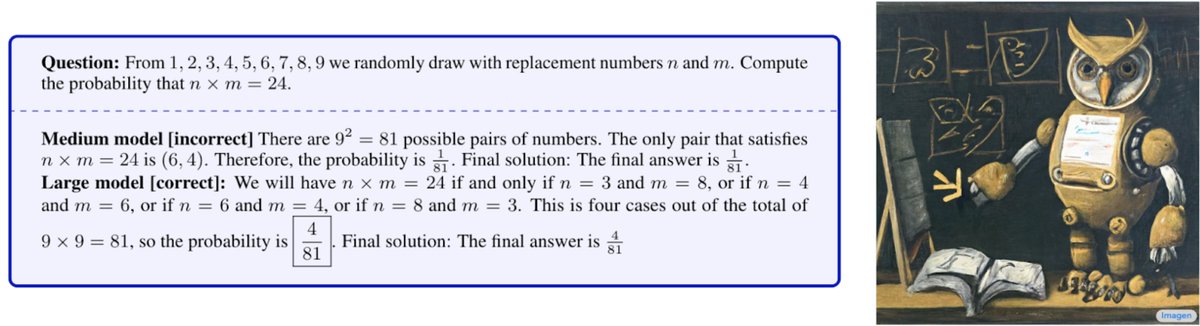

Models' mistakes are easily interpretable. Despite impressive arithmetic skills it still makes calculation mistakes. We estimate the false positive rate due to achieving the right answer from incorrect reasoning and find it relatively low. More samples: minerva-demo.github.io!

Evaluating our model in 2022 Poland’s National Math Exam it performed above the national average, it solved more than 80% GCSE Higher Mathematics problems and evaluated a variety of STEM undergraduate problems from MIT, it solved nearly a third of them.

Paper: bit.ly/3yuQtIU

Great collaboration with Anders, @dmdohan, @ethansdyer, @hmichalewski, @vinayramasesh, @AmbroseSlone, @cem__anil, Imanol, Theo, @Yuhu_ai_, @bneyshabur, @guygr and @vedantmisra !

Great collaboration with Anders, @dmdohan, @ethansdyer, @hmichalewski, @vinayramasesh, @AmbroseSlone, @cem__anil, Imanol, Theo, @Yuhu_ai_, @bneyshabur, @guygr and @vedantmisra !

• • •

Missing some Tweet in this thread? You can try to

force a refresh