VGG, U-Net, TCN, ... CNNs are powerful but must be tailored to specific problems, data-types, -lenghts & -resolutions.

Can we design a single CNN that works well on all these settings?🤔Yes! Meet the 𝐂𝐂𝐍𝐍, a single CNN that achieves SOTA on several datasets, e.g., LRA!🔥

Can we design a single CNN that works well on all these settings?🤔Yes! Meet the 𝐂𝐂𝐍𝐍, a single CNN that achieves SOTA on several datasets, e.g., LRA!🔥

Accepted at the #ICML2022 #Workshop on #ContinuousTimeMethods.

Joint work with @davidknigge, @_albertgu, @erikjbekkers, @egavves, @jmtomczak & Mark Hoogendoorn.

Paper: arxiv.org/abs/2206.03398

Code: github.com/david-knigge/c…

Slides: app.slidebean.com/p/gk5j826nq7/C…

Joint work with @davidknigge, @_albertgu, @erikjbekkers, @egavves, @jmtomczak & Mark Hoogendoorn.

Paper: arxiv.org/abs/2206.03398

Code: github.com/david-knigge/c…

Slides: app.slidebean.com/p/gk5j826nq7/C…

𝐌𝐚𝐢𝐧 𝐈𝐝𝐞𝐚: Architecture changes are needed to model long range dependencies for signals of different length, res & dims, e.g., pooling, depth, kernel sizes.

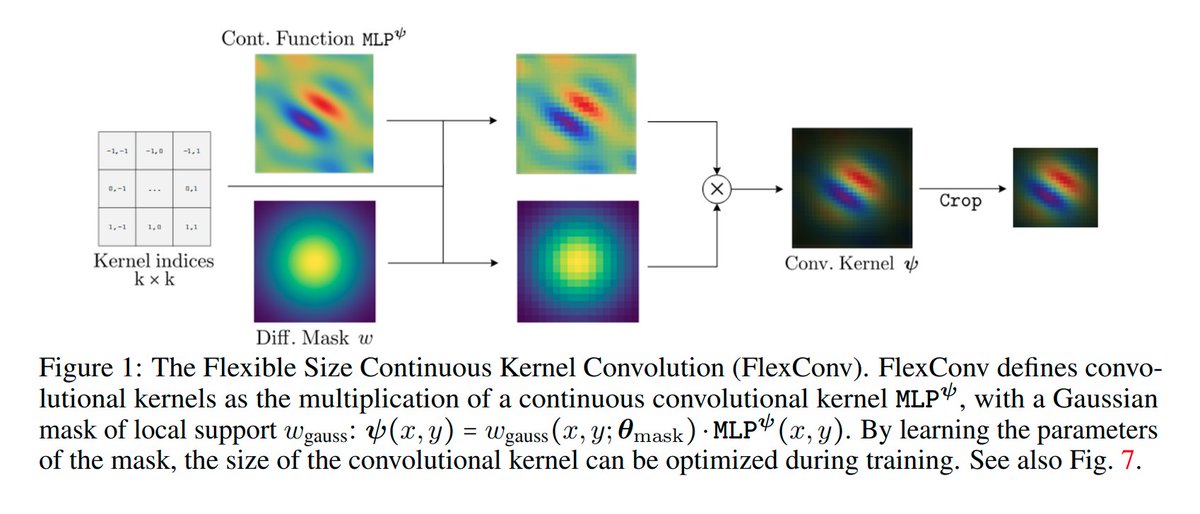

To solve all tasks with a single CNN, it must model long range deps. at every layer: use Continuous Conv Kernels!

To solve all tasks with a single CNN, it must model long range deps. at every layer: use Continuous Conv Kernels!

𝐂𝐨𝐧𝐭𝐢𝐧𝐮𝐨𝐮𝐬 𝐂𝐨𝐧𝐯. 𝐊𝐞𝐫𝐧𝐞𝐥𝐬 allow you to create conv. kernels of arbitrary length and dimensionlity that generalize across resolutions by parameterizing them with a neural net.

𝐒𝟒 𝐯𝐬 𝐂𝐂𝐍𝐍. Inspired by the powerful S4 model (arxiv.org/abs/2111.00396), we use a variation of their residual blocks, which we call S4 Blocks.

However, in contrast to S4, which only works with 1D signals, CCNNs easily model long range dependencies in ND.

However, in contrast to S4, which only works with 1D signals, CCNNs easily model long range dependencies in ND.

𝐑𝐞𝐬𝐮𝐥𝐭𝐬 𝟏𝐃. CCNNs obtain SOTA on several sequential benchmarks, e.g., Long Range Arena, Speech Recognition, 1D img classification, all with a single architecture.

CCNNs are often smaller and simpler than other methods.

CCNNs are often smaller and simpler than other methods.

𝐑𝐞𝐬𝐮𝐥𝐭𝐬 𝟐𝐃. With a single architecture, the CCNN matches & surpasses much deeper CNNs!

𝐋𝐨𝐧𝐠 𝐑𝐚𝐧𝐠𝐞 𝐀𝐫𝐞𝐧𝐚 𝐢𝐧 𝟐𝐃. Some LRA tasks are defined on 2D data. Using this info -not possible for other methods, eg S4- CCNNs easily get much getter results faster!

𝐋𝐨𝐧𝐠 𝐑𝐚𝐧𝐠𝐞 𝐀𝐫𝐞𝐧𝐚 𝐢𝐧 𝟐𝐃. Some LRA tasks are defined on 2D data. Using this info -not possible for other methods, eg S4- CCNNs easily get much getter results faster!

𝐂𝐨𝐧𝐜𝐥𝐮𝐬𝐢𝐨𝐧. The CCNN is a single CNN architecture that works well on several tasks on data with different lenghts, resolutions & dimensionality.

𝐅𝐮𝐭𝐮𝐫𝐞 𝐖𝐨𝐫𝐤. We plan to extend our results to 3D data & other tasks, e.g., segmentation, generative modelling.

𝐅𝐮𝐭𝐮𝐫𝐞 𝐖𝐨𝐫𝐤. We plan to extend our results to 3D data & other tasks, e.g., segmentation, generative modelling.

𝐂𝐨𝐥𝐥𝐚𝐛𝐨𝐫𝐚𝐭𝐢𝐨𝐧𝐬. Would you like to collaborate with us on this project? Ping me!😁We want to have an extensive list of experiments in this project & we are sure we can use your expertise and use case!

𝐀𝐜𝐤𝐧𝐨𝐰𝐥𝐞𝐝𝐠𝐞𝐦𝐞𝐧𝐭𝐬. This is part of my Qualcomm Innovetion Fellowship series. I'd like to thank @QCOMResearch & my mentor @danielewworrall for their support!

Details w.r.t. the fellowship:

qualcomm.com/research/unive…

Details w.r.t. the fellowship:

qualcomm.com/research/unive…

• • •

Missing some Tweet in this thread? You can try to

force a refresh