Neural nets are brittle under domain shift & subpop shift.

We introduce a simple mixup-based method that selectively interpolates datapts to encourage domain-invariance

ICML 22 paper: arxiv.org/abs/2201.00299

w/ @HuaxiuYaoML Yu Wang @zlj11112222 @liang_weixin @james_y_zou (1/3)

We introduce a simple mixup-based method that selectively interpolates datapts to encourage domain-invariance

ICML 22 paper: arxiv.org/abs/2201.00299

w/ @HuaxiuYaoML Yu Wang @zlj11112222 @liang_weixin @james_y_zou (1/3)

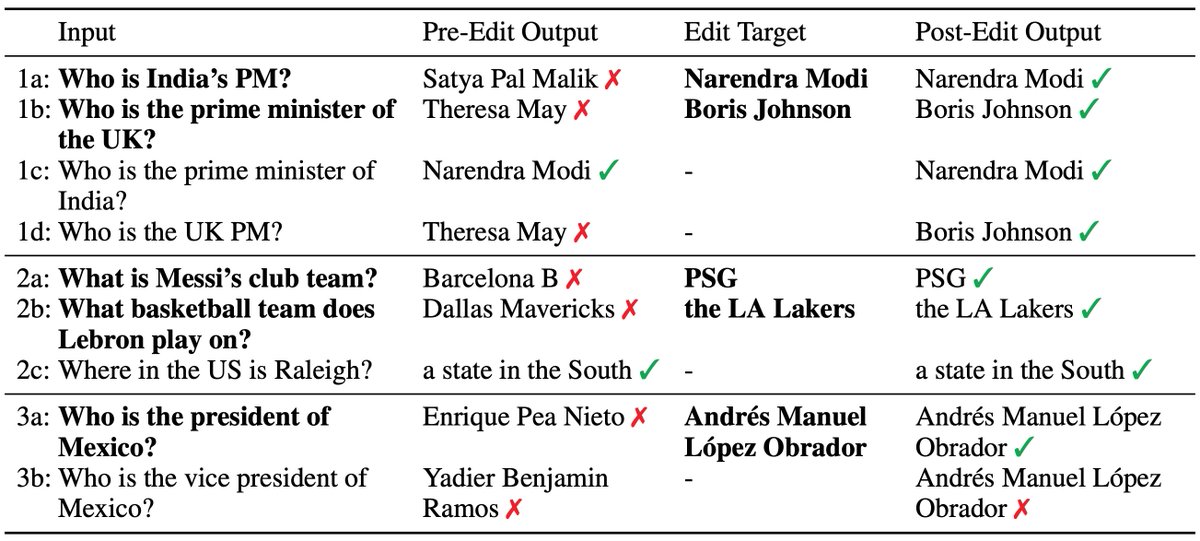

Prior methods encourage domain-invariant *representations*.

This constrains the model's internal representation

By using mixup to interpolate within & across domains, we get domain invariant *predictions* w/o constraining the model.

Less constraining -> better performance

(2/3)

This constrains the model's internal representation

By using mixup to interpolate within & across domains, we get domain invariant *predictions* w/o constraining the model.

Less constraining -> better performance

(2/3)

The method is also quite simple to implement.

Code: github.com/huaxiuyao/LISA

#ICML2022 Paper: arxiv.org/abs/2201.00299

WILDS Leaderboard: wilds.stanford.edu/leaderboard/

See Huaxiu's thread for much more!

(3/3)

Code: github.com/huaxiuyao/LISA

#ICML2022 Paper: arxiv.org/abs/2201.00299

WILDS Leaderboard: wilds.stanford.edu/leaderboard/

See Huaxiu's thread for much more!

https://twitter.com/HuaxiuYaoML/status/1478243699771412481

(3/3)

• • •

Missing some Tweet in this thread? You can try to

force a refresh