0/ Introducing TACTiS, a transformer-based model for multivariate probabilistic time series prediction. 1) supports forecasting, interpolation... 2) supports unaligned and non-uniformly sampled time series 3) can scale to hundreds of time series. Curious? Read on to learn more…

1/ The TACTiS model infers the joint distribution of masked time points given observed time points in multivariate time series. It enables probabilistic multivariate forecasting, interpolation, and backcasting according to arbitrary masking patterns specified at inference time.

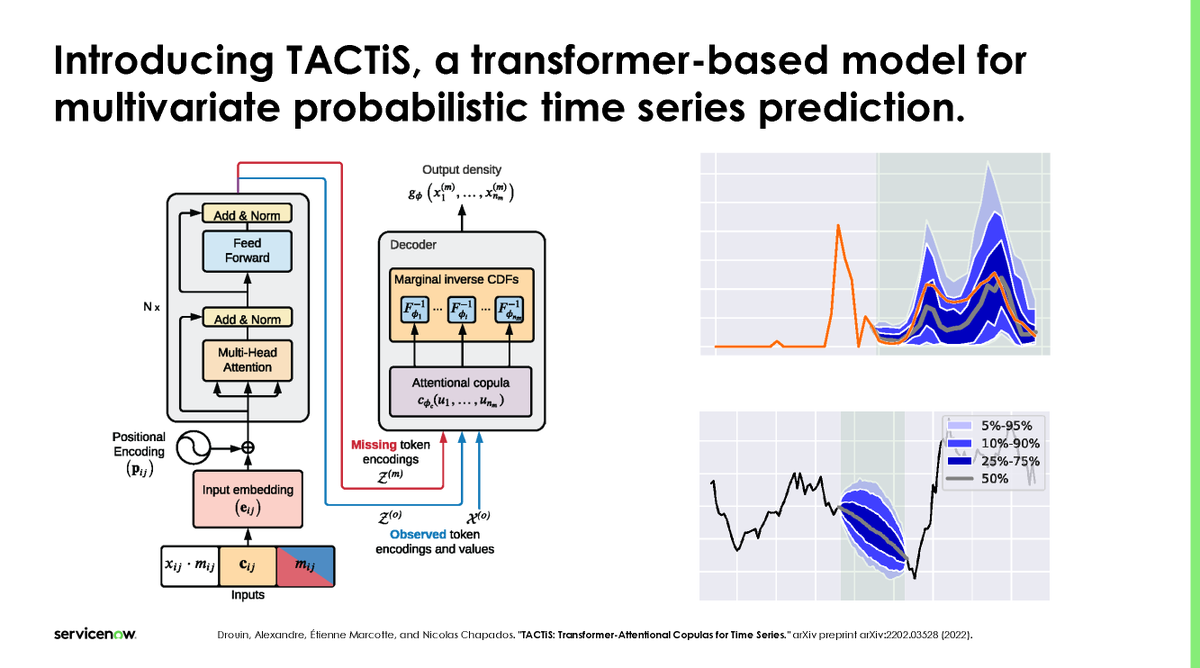

2/ TACTiS is an encoder-decoder model similar to standard transformers. Our main contribution, attentional copulas, belongs to the decoder. However, both component have key features that enables the great flexibility of TACTiS. Let’s dive into these…

3/ Encoder: each point in each time series is treated as a distinct time-stamped token. Some are masked (not observed, i.e. m_ij == 0) and are predicted based on those observed (m_ij = 1). For each point, we can specify additional covariates c_ij to provide additional context.

4/ Decoder: learns to parametrize conditional joint distribution of masked tokens given observed tokens. It captures co-dependencies between variables using non-parametric copula. Combined with variable-specific marginals we can model arbitrary multivariate distributions (Sklar).

5/ TACTiS defines an implicit copula using an attention mechanism over observed tokens and previously-decoded ones, making it non-parametric. It decodes auto-regressively, similar to NADE. The marginals are defined for each variable using univariate flows.

6/ Decoding proceeds autoregressively, one variable at a time. We show convergence of the scheme to a proper copula.

7/ TACTiS offers state-of-the-art forecasting performance, outperforming previously best-in-class models on real-world datasets with hundreds of time series.

8/ TACTiS performs well across datasets representing a significant cross-section of common use cases.

9/ TACTiS is very flexible and can approximate the Bayesian posterior distribution in time series interpolation.

12/ If you’re attending ICML 2022 in person, come to the Spotlight presentation, Tue 19 Jul 4:20 p.m. – 4:25 p.m. EDT, room 327–329.

14/ Here is the official link to the paper.

Alexandre Drouin, Étienne Marcotte, Nicolas Chapados (2022). TACTiS: Transformer-Attentional Copulas for Time Series. International Conference on Machine Learning (ICML 2022).

proceedings.mlr.press/v162/drouin22a…

Alexandre Drouin, Étienne Marcotte, Nicolas Chapados (2022). TACTiS: Transformer-Attentional Copulas for Time Series. International Conference on Machine Learning (ICML 2022).

proceedings.mlr.press/v162/drouin22a…

Watch the replay of @alexandredrouin presenting the @icmlconf Spotlight Paper for TACTiS: Transformer-Attentional Copulas for Time Series. #ICML2022

slideslive.com/embed/presenta…

slideslive.com/embed/presenta…

• • •

Missing some Tweet in this thread? You can try to

force a refresh