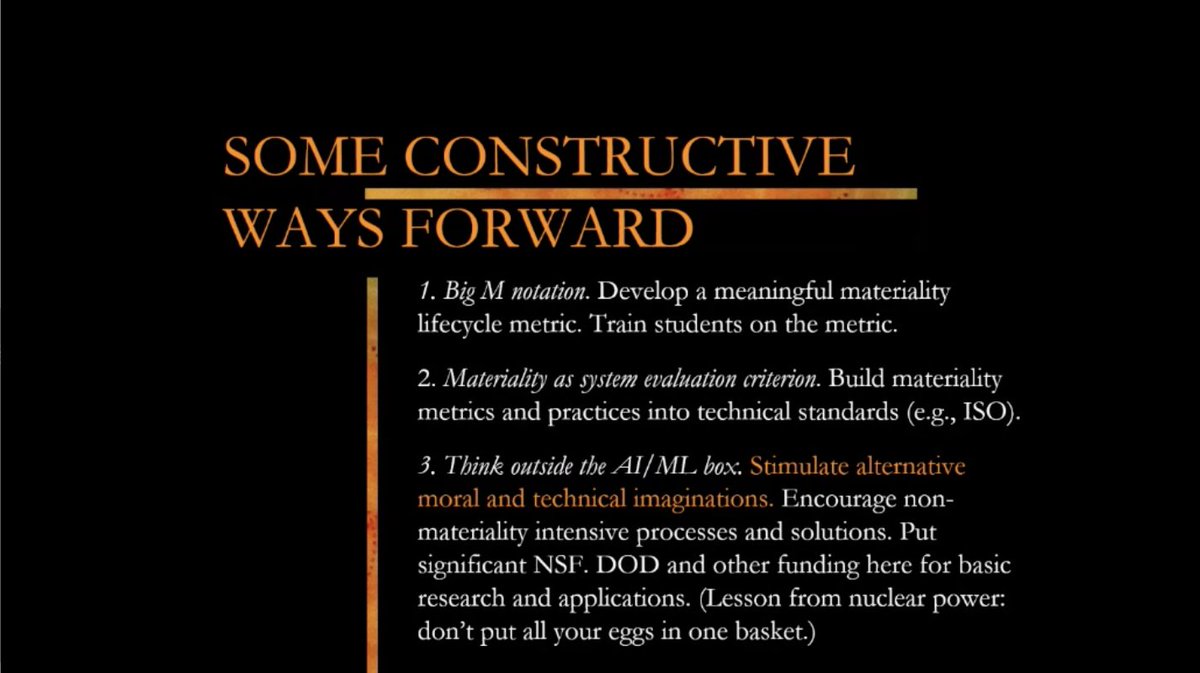

Thinking back to Batya Friedman (of UW's @TechPolicyLab and Value Sensitive Design Lab)'s great keynote at #NAACL2022. She ended with some really valuable ideas for going forward, in these slides:

Here, I really appreciated 3 "Think outside the AI/ML box".

>>

Here, I really appreciated 3 "Think outside the AI/ML box".

>>

As societies and as scientific communities, we are surely better served by exploring multiple paths rather than piling all resources (funding, researcher time & ingenuity) on MOAR DATA, MOAR COMPUTE! Friedman points out that this is *environmentally* urgent as well.

>>

>>

Where above she draws on the lessons of nuclear power (what other robust sources of non-fossil energy would we have now, if we'd spread our search more broadly back then?) here she draws on the lessons of plastics: they are key for some use case (esp medical). >>

Similarly, there may be life-critical or other important cases where AI/ML really is the best bet, and we can decide to use it there, being mindful that we are using something that has impactful materiality and so should be used sparingly.

>>

>>

Finally, I really appreciated this message of responsibility of the public. How we talk about these things matters, because we need to be empowering the public to make good decisions around regulation.

>>

>>

As an example, she gives an alternative visualization of "the cloud" that makes its materiality more apparent (but still feels some steps removed from e.g. the mining operations required to create that equipment).

>>

>>

Friedman's emphasis was on materiality & the environment, but this point holds equally true for the way we communicate about what so-called "AI" does, how it relates to data, etc.

Thanks again to Batya for such a great talk and to #NAACL2022 for bringing her to us!

Thanks again to Batya for such a great talk and to #NAACL2022 for bringing her to us!

• • •

Missing some Tweet in this thread? You can try to

force a refresh