.@Helium, often cited as one of the best examples of a Web3 use case, has received $365M of investment led by @a16z.

Regular folks have also been convinced to spend $250M buying hotspot nodes, in hopes of earning passive income.

The result? Helium's total revenue is $6.5k/month

Regular folks have also been convinced to spend $250M buying hotspot nodes, in hopes of earning passive income.

The result? Helium's total revenue is $6.5k/month

Members of the r/helium subreddit have been increasingly vocal about seeing poor Helium returns.

On average, they spent $400-800 to buy a hotspot. They were expecting $100/month, enough to recoup their costs and enjoy passive income.

Then their earnings dropped to only $20/mo.

On average, they spent $400-800 to buy a hotspot. They were expecting $100/month, enough to recoup their costs and enjoy passive income.

Then their earnings dropped to only $20/mo.

These folks maintain false hope of positive ROI. They still don’t realize their share of data-usage revenue isn’t actually $20/month; it’s $0.01/month.

The other $19.99 is a temporary subsidy from investment in growing the network, and speculation on the value of the $HNT token.

The other $19.99 is a temporary subsidy from investment in growing the network, and speculation on the value of the $HNT token.

Meanwhile, according to Helium network rules, $300M (30M $HNT) per year gets siphoned off by @novalabs_, the corporation behind Helium.

This "revenue" on the books, which comes mainly from retail speculators, is presumably what justified such an aggressive investment by @a16z.

This "revenue" on the books, which comes mainly from retail speculators, is presumably what justified such an aggressive investment by @a16z.

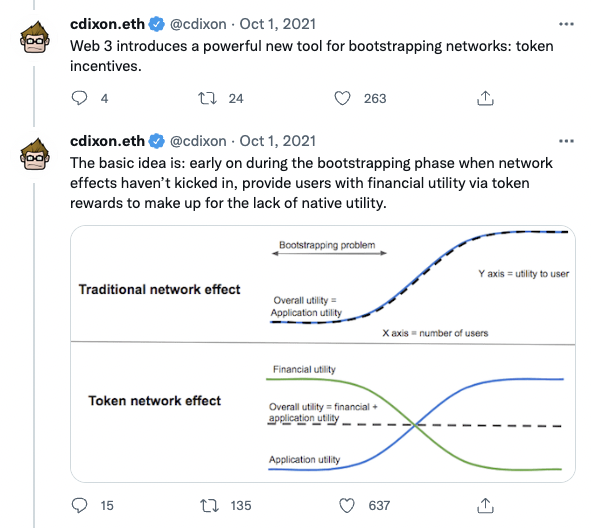

.@cdixon's "mental model" thread on Helium claims that this kind of network can't be built in Web2 because it requires token incentives.

But the facts indicate Web2 *won’t* incentivize Helium because demand is low. Even with a network of 500k hotspots, revenue is nonexistent.

But the facts indicate Web2 *won’t* incentivize Helium because demand is low. Even with a network of 500k hotspots, revenue is nonexistent.

The complete lack of end-user demand for Helium should not have come as a surprise.

A basic LoRaWAN market analysis would have revealed that this was a speculation bubble around a fake, overblown use case.

A basic LoRaWAN market analysis would have revealed that this was a speculation bubble around a fake, overblown use case.

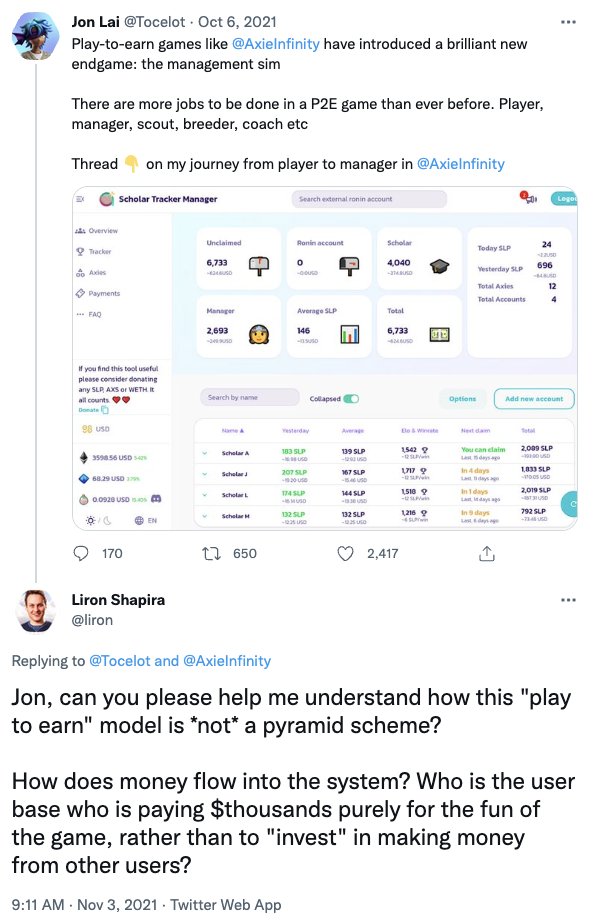

The ongoing Axie Infinity debacle is a similar case of @a16z's documented thought process being shockingly disconnected from reality, wherein skeptics get vindicated within a matter of months at the expense of unsophisticated end users turned investors.

https://twitter.com/liron/status/1540035327665967105

More generally, I posit the two keys to understanding Web3 are:

1) Beware of easy money schemes

2) Beware of #HollowAbstractions

When proponents like @cdixon promise riches to come via abstract "mental models", we can gently guide them to focus on money flows and use cases.

1) Beware of easy money schemes

2) Beware of #HollowAbstractions

When proponents like @cdixon promise riches to come via abstract "mental models", we can gently guide them to focus on money flows and use cases.

I've posed the question to the @a16z partner involved with Axie Infinity, "how does money flow into the system?"

He blocked me.

The tech community deserves better.

Let's continue to press for answers to simple questions about Web3's money flows and use cases.

He blocked me.

The tech community deserves better.

Let's continue to press for answers to simple questions about Web3's money flows and use cases.

Helium leadership responded…

https://twitter.com/liron/status/1552102241259163648

• • •

Missing some Tweet in this thread? You can try to

force a refresh