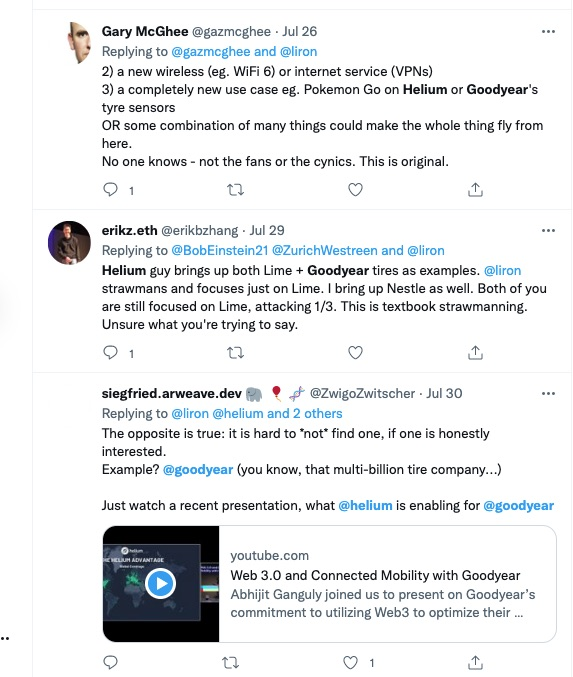

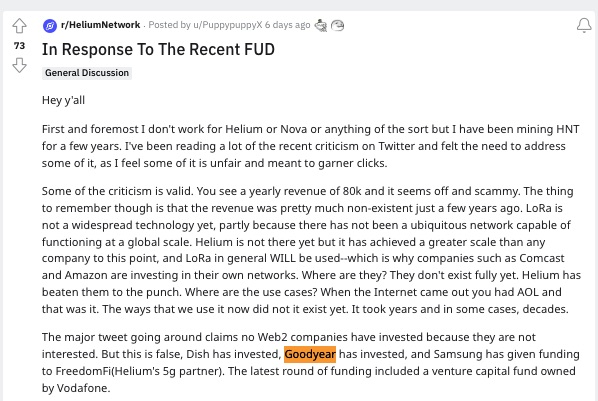

.@Helium supporters have been accusing me of FUD.

To encourage one another to stay positive, they cite exciting corporate partnerships such as... @Goodyear Tire & Rubber.

Maybe I can help them perform a sanity check before they pin their hopes on this promising "customer".

To encourage one another to stay positive, they cite exciting corporate partnerships such as... @Goodyear Tire & Rubber.

Maybe I can help them perform a sanity check before they pin their hopes on this promising "customer".

Believers of the Goodyear/Helium partnership envision a future where your vehicle connects to the internet... through its tires.

Inspiring.

I'd hate to burst their bubble that a Goodyear Ventures investment with the goal to "learn about new mobility" isn't proof of real demand.

Inspiring.

I'd hate to burst their bubble that a Goodyear Ventures investment with the goal to "learn about new mobility" isn't proof of real demand.

To learn more, I watched this presentation by @AbhijitCVC of Goodyear Ventures:

Does Goodyear have a plan for giving tire sensor devices their own internet connection?

Not really, says Abhijit: "Assume we have the right sensors, and we don't yet..."

Does Goodyear have a plan for giving tire sensor devices their own internet connection?

Not really, says Abhijit: "Assume we have the right sensors, and we don't yet..."

This slide presents Goodyear's next steps. They're going to "explore use cases", i.e. they don't yet have a clear one.

When you're pinning your hopes on a vague, futuristic-sounding slide from a corporate VC, you're on track to be a #BloatedMVP of Goodyear Blimp proportions.

When you're pinning your hopes on a vague, futuristic-sounding slide from a corporate VC, you're on track to be a #BloatedMVP of Goodyear Blimp proportions.

I'm not spreading gratuitous FUD here. I've just heard enough startup pitches to call out the #HollowAbstractions.

The reality on the ground is that Goodyear has no compelling use case. Neither does Helium's LoRaWAN network to date.

I fixed that tweet for you, @AbhijitCVC.

The reality on the ground is that Goodyear has no compelling use case. Neither does Helium's LoRaWAN network to date.

I fixed that tweet for you, @AbhijitCVC.

@AbhijitCVC .@AbhijitCVC thanks for the pic of your team gluing a sensor to a tire, but the key question that remains unvalidated is whether a car's tires should be architected to connect directly to the internet using a decentralized network of LoRa hotspots.

https://twitter.com/AbhijitCVC/status/1554484605201293312

• • •

Missing some Tweet in this thread? You can try to

force a refresh