.@a16z, @Accel and @paradigm looked directly at a blatant Ponzi scheme, Axie Infinity.

They called it “play-to-earn” and invested $311M into its parent company.

Then it collapsed.

How Web3 VCs stumbled into funding a Ponzi. 🧵

They called it “play-to-earn” and invested $311M into its parent company.

Then it collapsed.

How Web3 VCs stumbled into funding a Ponzi. 🧵

First, let’s be clear that Axie really is a Ponzi scheme. To quote @matt_levine's newsletter from last month: “Axie Infinity is a Ponzi scheme”.

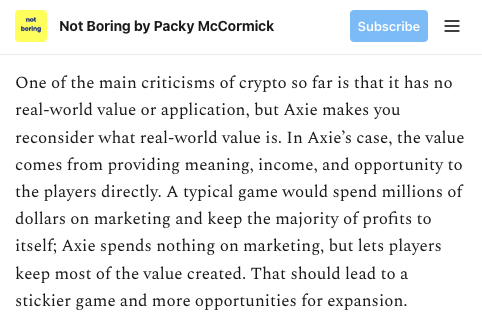

This viral Substack essay by @packyM, published July 19, 2021, is representative of last year’s peak VC hype around Axie: notboring.co/p/infinity-rev…

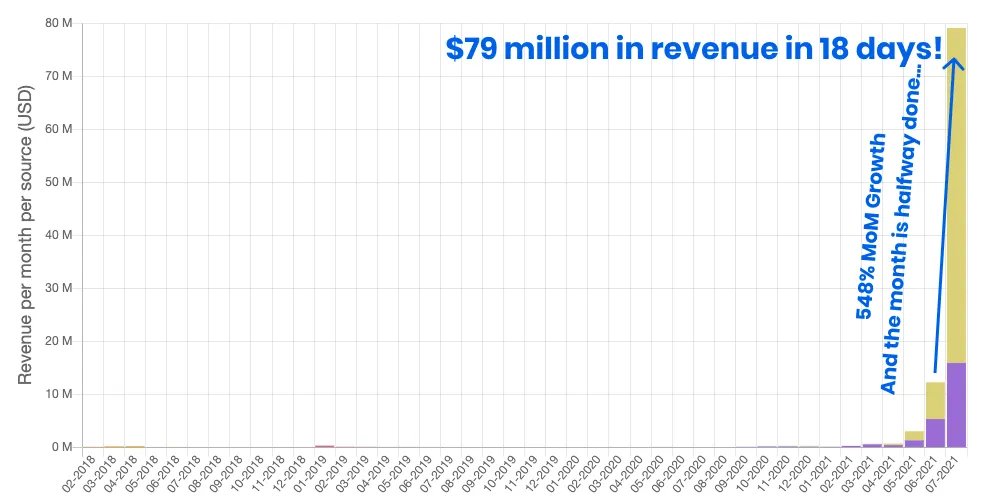

For context, VCs are used to measuring companies by their revenue growth.

Exponential growth is taken as a sign that a startup has discovered a lucrative new business model.

Axie’s revenue growth was off the charts.

Exponential growth is taken as a sign that a startup has discovered a lucrative new business model.

Axie’s revenue growth was off the charts.

Packy was fully in that VC mindset when he attempted to explain Axie’s revenue growth to his readers.

He claimed it was a result of a uniquely blockchain-enabled innovation: “letting players keep most of the value created”.

He claimed it was a result of a uniquely blockchain-enabled innovation: “letting players keep most of the value created”.

A similar VC-brain analysis was promulgated by @cdixon.

Chris claimed that Axie’s revenue growth came from the success of innovations like “letting users participate in the financial upside of the community” and “lowering take rates”.

Chris claimed that Axie’s revenue growth came from the success of innovations like “letting users participate in the financial upside of the community” and “lowering take rates”.

Thanks to VCs' misguidedly enthusiastic affirmation of Axie's business model, the term “play-to-earn” (P2E) became a trendy buzzword in the VC community, and more money poured in.

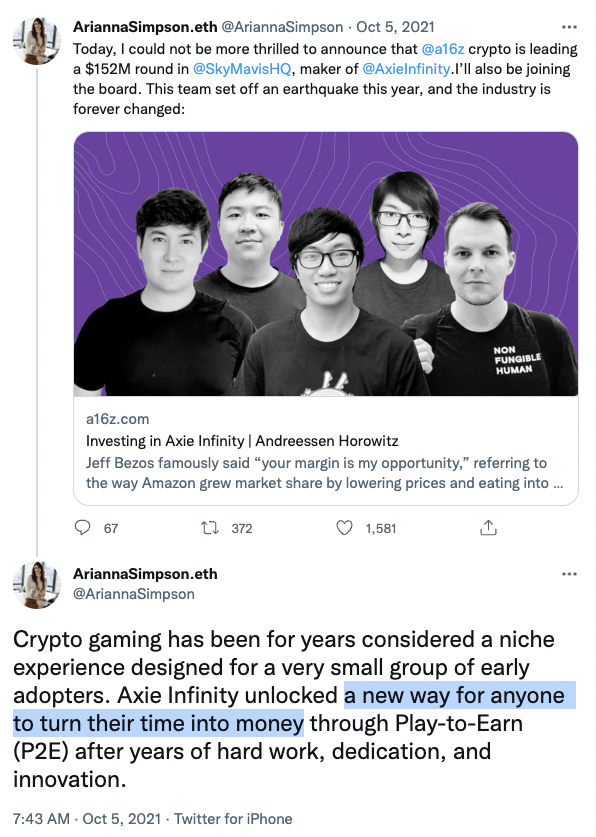

On Oct 5, 2021, @a16z led a $152M funding round in Axie Infinity maker @SkyMavisHQ.

@AriannaSimpson, the partner who joined Sky Mavis’s board, described the game as “a new way for anyone to turn their time into money”.

@AriannaSimpson, the partner who joined Sky Mavis’s board, described the game as “a new way for anyone to turn their time into money”.

Doubts or concerns about the possibility of Axie being a Ponzi scheme were not mentioned or addressed in Packy's Substack post.

He did like a comment arguing why Ponzis are similar to regular businesses.

He did like a comment arguing why Ponzis are similar to regular businesses.

Multiple comments dating back to July 2021 correctly identified why Axie is a Ponzi. None received a like or reply from Packy.

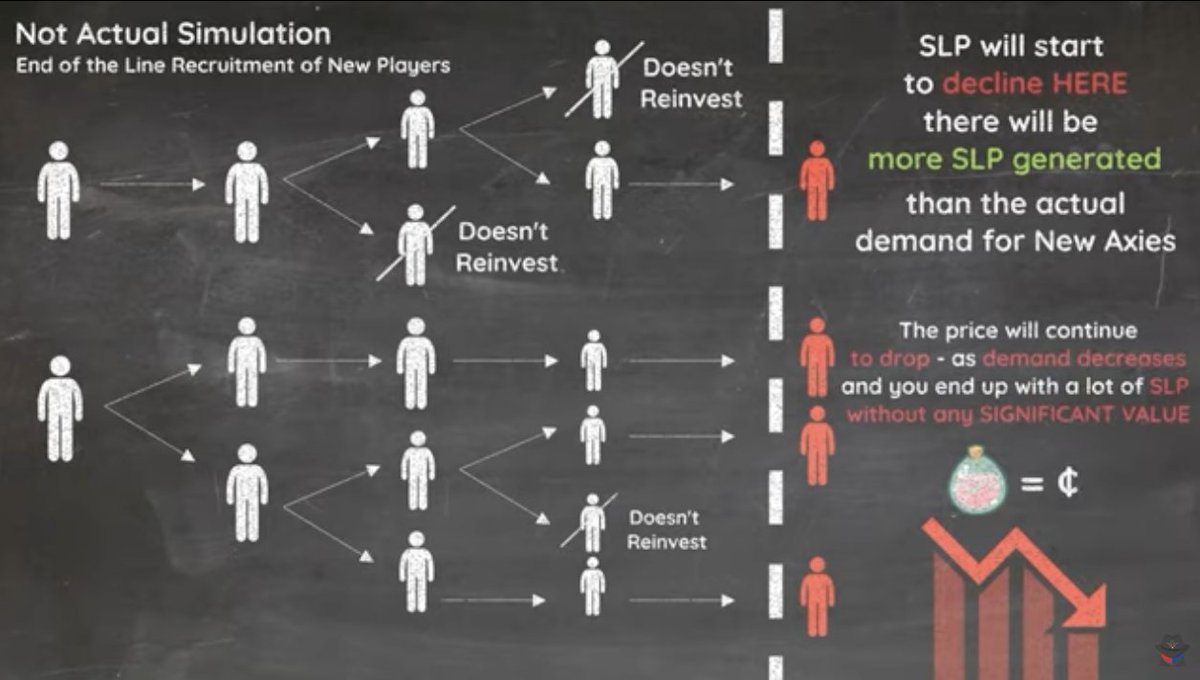

Prior to Packy’s post, others had already caught on to the fact that Axie is structurally a Ponzi scheme.

If any VC had searched “Axie Infinity” on YouTube, they'd have been able to watch this helpful animated explainer that was posted Jul 4, 2021:

If any VC had searched “Axie Infinity” on YouTube, they'd have been able to watch this helpful animated explainer that was posted Jul 4, 2021:

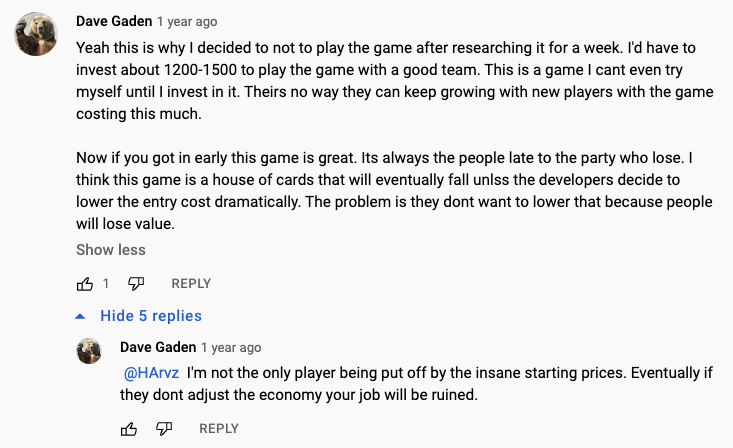

One YouTube commenter, who had been hoping for an opportunity to “play to earn”, decided to steer clear of Axie.

He correctly understood that, despite the potential for large sums of money, he was more likely to *lose* money playing the game than to earn it.

He correctly understood that, despite the potential for large sums of money, he was more likely to *lose* money playing the game than to earn it.

Axie Infinity’s revenue peaked in Aug 2021, just one month after Packy’s post.

The truth is, we've never been looking at the revenue graph of a promising startup. We’ve been looking at the revenue graph of a Ponzi.

The truth is, we've never been looking at the revenue graph of a promising startup. We’ve been looking at the revenue graph of a Ponzi.

After the scheme played out its inevitable collapse, news organizations picked up the story that thousands of players have been left financially worse off.

But it shouldn’t have been a surprise to any qualified analyst that this Ponzi scheme played out the way Ponzis always do.

But it shouldn’t have been a surprise to any qualified analyst that this Ponzi scheme played out the way Ponzis always do.

What lesson can we take away from Axie’s rise and fall?

Crypto throws a wrench into the usual analysis of a startup’s growth.

Analysts must distinguish positive-sum demand vs demand for easy money. Don’t be fooled by what users are saying - even users can’t tell the difference.

Crypto throws a wrench into the usual analysis of a startup’s growth.

Analysts must distinguish positive-sum demand vs demand for easy money. Don’t be fooled by what users are saying - even users can’t tell the difference.

I was hoping some VCs would publicly acknowledge last year's errors in judgement.

They simply didn't realize that a Ponzi scheme could put up the same dazzling growth numbers as a high-performing startup.

Recent commenters on Packy's Substack post hoped for a post-mortem too.

They simply didn't realize that a Ponzi scheme could put up the same dazzling growth numbers as a high-performing startup.

Recent commenters on Packy's Substack post hoped for a post-mortem too.

By the way, while this thread has largely focused on Packy, it’s only because he’s been one of Axie’s biggest champions. He also advises @a16z Crypto, the largest fund of its kind.

Rest assured, plenty of other VCs were making the same arguments for Axie and play-to-earn gaming.

Rest assured, plenty of other VCs were making the same arguments for Axie and play-to-earn gaming.

I recently put together this video to show how @cdixon and @AriannaSimpson are framing the situation.

I'm not seeing any acknowledgement/accountability around the serious flaws in their 2021 Axie analysis, which is disappointing this late in the game.

I'm not seeing any acknowledgement/accountability around the serious flaws in their 2021 Axie analysis, which is disappointing this late in the game.

https://twitter.com/i/status/1540035327665967105

The closest we have to a post-mortem is from @DKThomp’s excellent podcast last month.

How is @packyM reflecting on the decision to fund and hype Axie?

Here’s his answer.

I also highly recommend the full interview: theringer.com/2022/7/26/2327…

How is @packyM reflecting on the decision to fund and hype Axie?

Here’s his answer.

I also highly recommend the full interview: theringer.com/2022/7/26/2327…

Packy’s post-mortem is that “[Axie’s] economics weren’t ready for that kind of usage”, which couldn’t possibly have been predicted in 2017-18.

Really?

The graph that stoked his excitement in Jul ‘21 was pure Ponzi.

I’d love to see more accountability from VCs who hyped this.

Really?

The graph that stoked his excitement in Jul ‘21 was pure Ponzi.

I’d love to see more accountability from VCs who hyped this.

If you’re still on the fence about whether Web3 is a #HollowAbstraction, consider this question:

If crypto VCs can stumble into funding a Ponzi on the blockchain, where else are they unintentionally misleading everyone?

If crypto VCs can stumble into funding a Ponzi on the blockchain, where else are they unintentionally misleading everyone?

• • •

Missing some Tweet in this thread? You can try to

force a refresh