How might an online community look after many people join? My paper w/ @lindsaypopowski @Carryveggies @merrierm @percyliang @msbernst introduces "social simulacra": a method of generating compelling social behaviors to prototype social designs 🧵

arxiv.org/abs/2208.04024 #uist2022

arxiv.org/abs/2208.04024 #uist2022

You can see some of its generated behaviors—posts, replies, trolls—in our demo here: social-simulacra.herokuapp.com

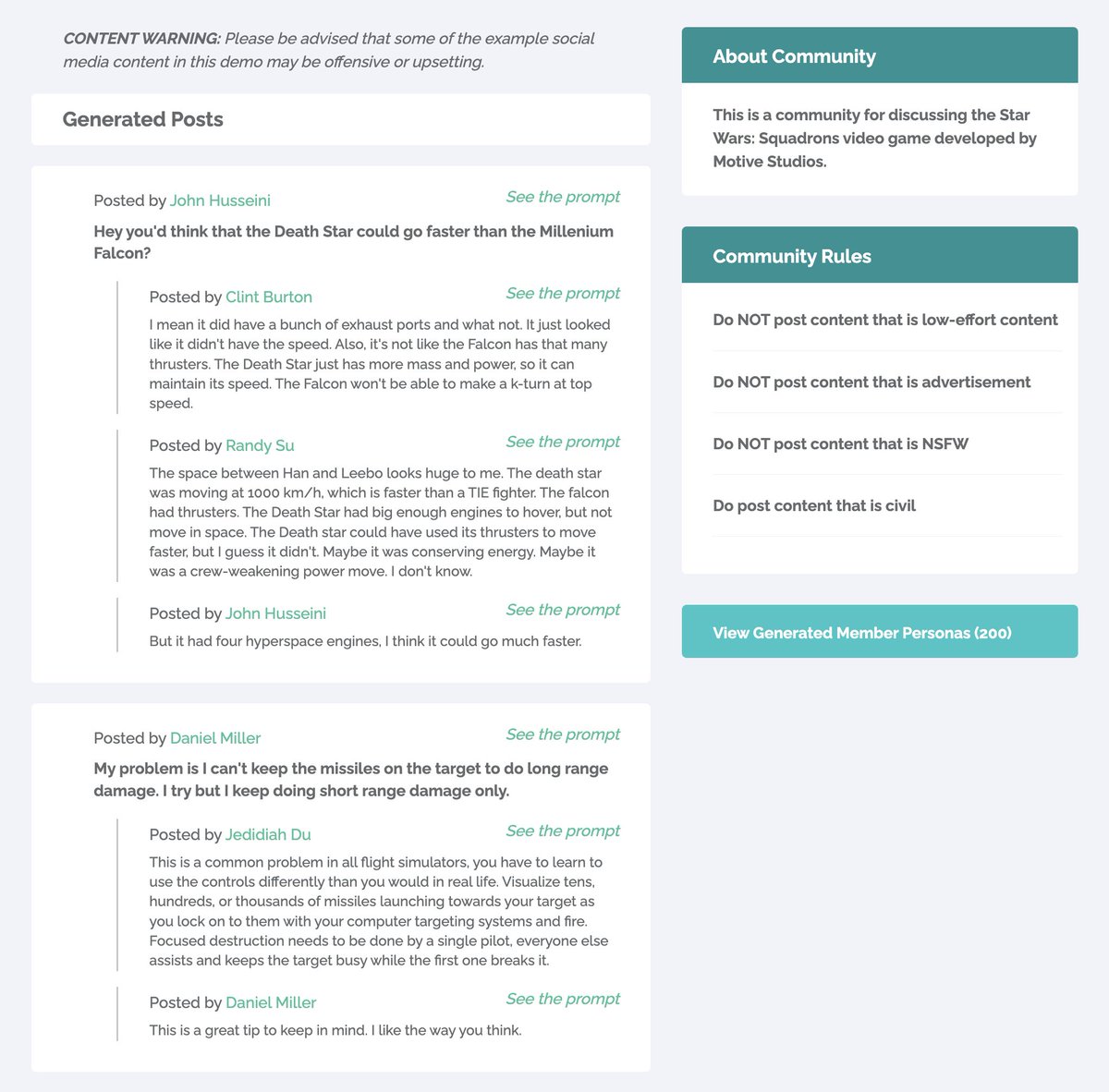

E.g., say you are creating a new community for discussing a StarWar game with a few rules. Given this description, our tool generated a simulacrum like this: (2/10)

E.g., say you are creating a new community for discussing a StarWar game with a few rules. Given this description, our tool generated a simulacrum like this: (2/10)

Why are these useful? In social computing design, understanding our design decisions’ impact is hard since many challenges do not arise until a system is populated by *many*. Think about: newcomers with unintentional norm-breaking, trolling, or other antisocial behaviors (3/10)

What if we could generate an unbounded number of synthetic users and the social interactions between them that can realistically reflect how actual users might behave in our system designs?

Social simulacra lets you do that. (4/10)

Social simulacra lets you do that. (4/10)

What power social simulacra are LLMs (e.g., GPT-3). We observe that their training data has a wide range of social behavior & they can generate compelling simulacra of possible interactions w/ proper prompting. This lets us ask “what if” questions to iterate on our design. (5/10)

*So what does this all mean?*

For social computing designers, this means they can design “proactively.” Participating designers in our study said that they are in the practice of reactive design, implementing interventions only after a dumpster fire damages the community. (6/10)

For social computing designers, this means they can design “proactively.” Participating designers in our study said that they are in the practice of reactive design, implementing interventions only after a dumpster fire damages the community. (6/10)

Social simulacra could change this equation by helping the designers understand possible modes of failures in their design before they arise and cause harm. (7/10)

Also important: social simulacra (intentionally) generates not only the good, but also the bad behaviors so it can help the community leaders to understand what could go well *and* wrong in their community. But this puts in focus… (8/10)

... the need for a close collaboration with social computing stakeholders and accountability measures/evaluation techniques such as auditing to ensure that our prototyping approach is used for its intended purpose—empowering online communities—and not for auto-trolling. (9/10)

Thanks to @GoogleAI, HPDTRP, @StanfordHAI, and @OpenAI for their support.

And finally, one more thank you to my amazing collaborator on this work, @lindsaypopowski, my mentors, @Carryveggies and @merrierm, and my advisors, @percyliang and @msbernst. (10/10)

And finally, one more thank you to my amazing collaborator on this work, @lindsaypopowski, my mentors, @Carryveggies and @merrierm, and my advisors, @percyliang and @msbernst. (10/10)

• • •

Missing some Tweet in this thread? You can try to

force a refresh